Method 1 (verified to be correct):

Find the my.ini configuration file in the installation directory and add the following code:

max_allowed_packet=500M

wait_timeout=288000

interactive_timeout = 288000

The three parameters are annotated as follows:

max_ allowed_ Packet is the largest packet allowed by mysql, that is, the request you send;

wait_ Timeout is the maximum waiting time. You can customize this value. However, if the time is too short, a MySQL server has gone away #2006 error will appear after the timeout.

max_ allowed_ The packet parameter is used to control the maximum length of its communication buffer

Method 2 (online extract is not verified temporarily):

Open tools in the menu of Navicat, select server monitor, then select in the left column, and click variable in the right column to find max_ allowed_ Packet and increase its value.

In the Chinese version: in the menu, tools – & gt; Server monitoring – & gt; Tick in front of the database list on the left – & gt; Find Max in the variable on the right_ allowed_ Packet, increase the value, such as 999999999

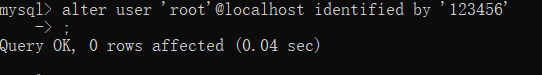

MySQL settings are case insensitive:

MySQL under Linux is case sensitive by default. You can make MySQL case insensitive by the following settings:

1. Log in with root and modify/etc/my.cnf

2. Under the [mysqld] node, add a line: lower_ case_ table_ Names = 1

3. Restart MySQL;

Where lower_ case_ table_ The name = 1 Parameter defaults to 1 in windows and 0 in UNIX. Therefore, the problem will not be encountered in windows. Once it reaches Linux, the cause of the problem will occur (especially when MySQL names the table with uppercase letters, but when querying with uppercase letters, there will be an error that cannot be found, which is really puzzling)

View the maximum length of the communication buffer:

show global variables like ‘max_ allowed_ packet’;

The default maximum is 1m. You can modify the maximum length of the communication buffer to 16m:

set global max_ allowed_ packet=1024102416;

Query again:

Next, import again. Import succeeded!

Note: the modification is only valid for the current. If MySQL is restarted, it will still restore the original size. If you want to take effect permanently, you can modify the configuration file and add or modify the configuration in my.ini (under Windows) or my.cnf (under Linux):

max_ allowed_ packet = 16M

Restart MySQL service

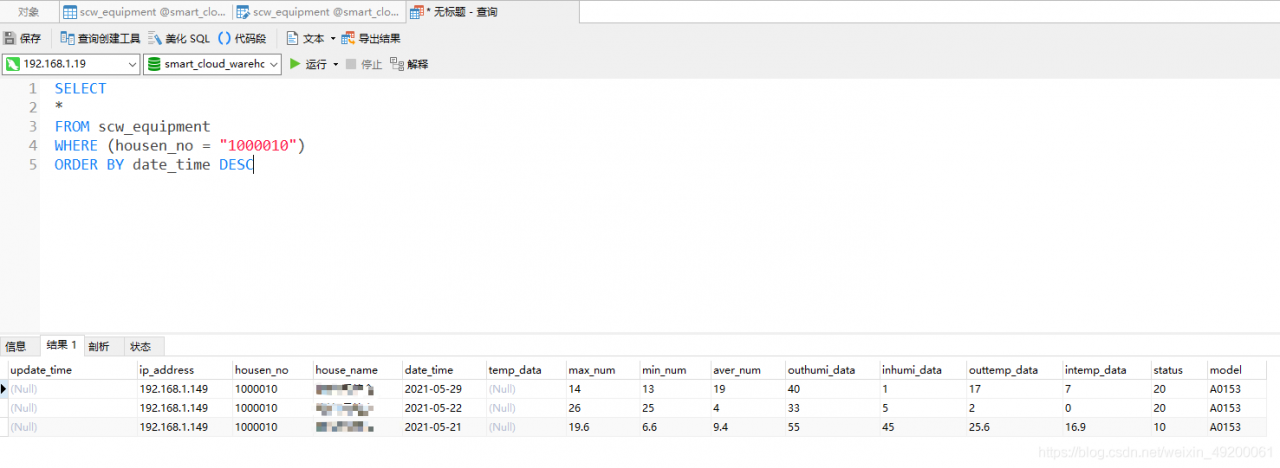

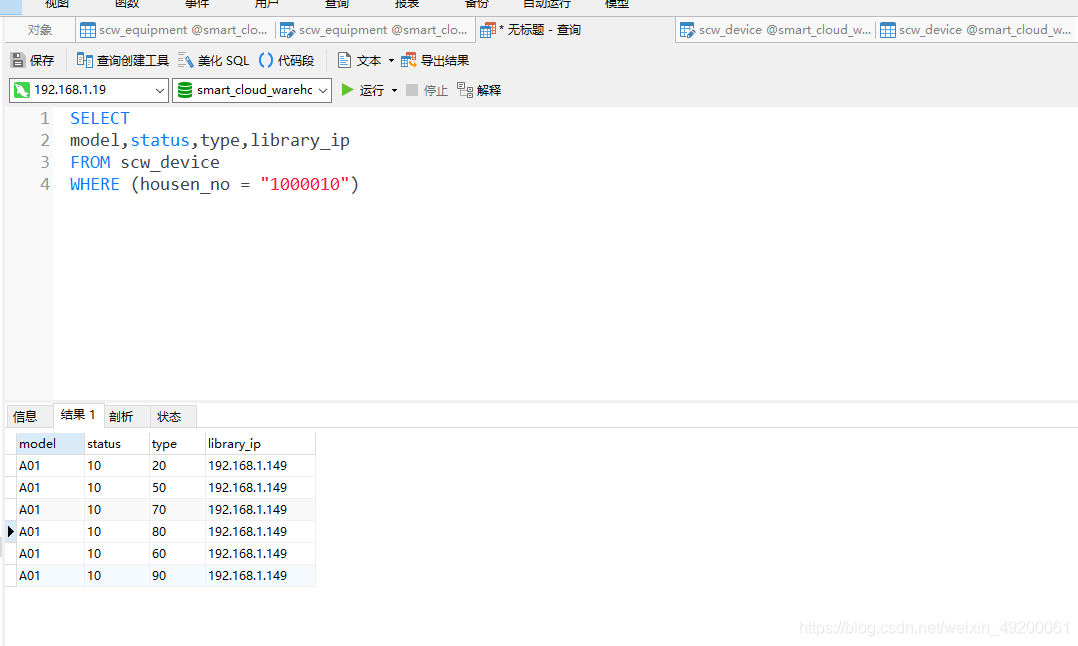

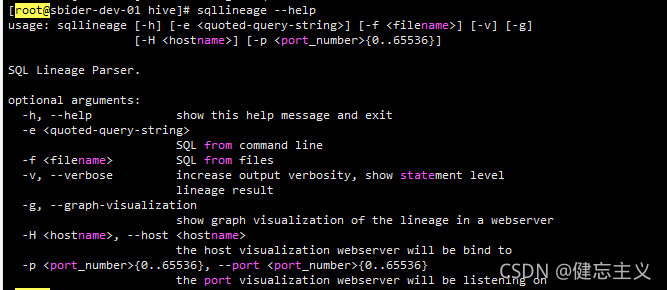

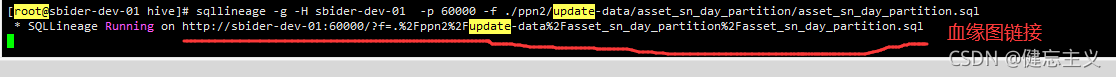

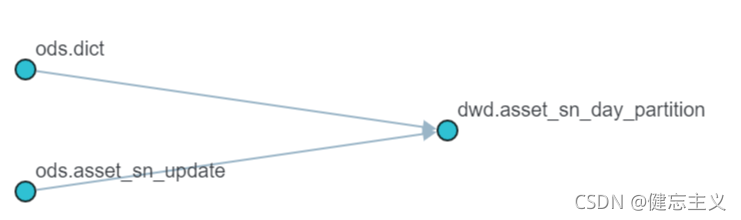

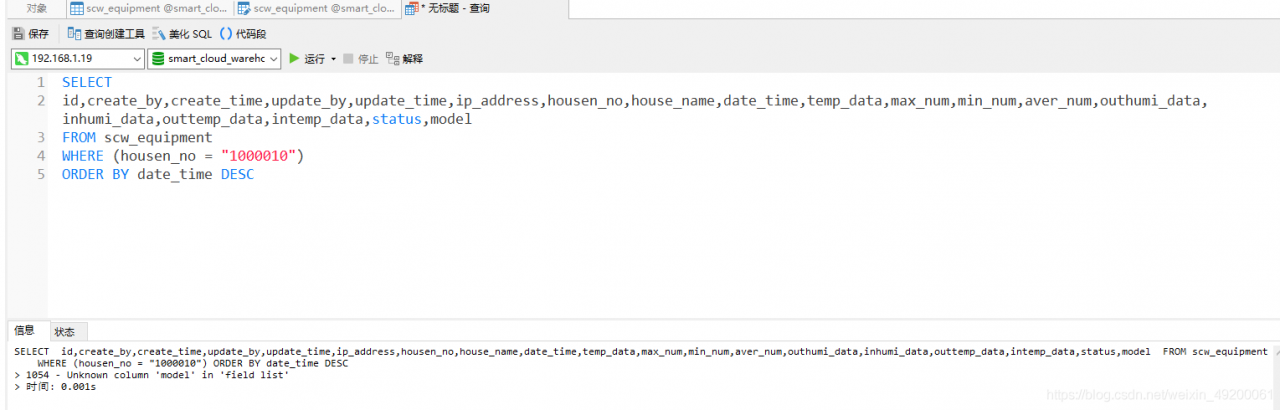

with the SQL statement executed by the system

with the SQL statement executed by the system