report errors

---------------------------------------------------------------------------

Py4JJavaError Traceback (most recent call last)

<ipython-input-24-bafca16b0526> in <module>

8 return jobitem, ratingsRDD

9 jobitem, jobRDD = preparJobdata(sc)

---> 10 jobRDD.collect()

G:\Projects\python-3.6.4-amd64\lib\site-packages\pyspark\rdd.py in collect(self)

947 """

948 with SCCallSiteSync(self.context) as css:

--> 949 sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

950 return list(_load_from_socket(sock_info, self._jrdd_deserializer))

951

G:\Projects\python-3.6.4-amd64\lib\site-packages\py4j\java_gateway.py in __call__(self, *args)

1303 answer = self.gateway_client.send_command(command)

1304 return_value = get_return_value(

-> 1305 answer, self.gateway_client, self.target_id, self.name)

1306

1307 for temp_arg in temp_args:

G:\Projects\python-3.6.4-amd64\lib\site-packages\py4j\protocol.py in get_return_value(answer, gateway_client, target_id, name)

326 raise Py4JJavaError(

327 "An error occurred while calling {0}{1}{2}.\n".

--> 328 format(target_id, ".", name), value)

329 else:

330 raise Py4JError(

Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0) (192.168.101.68 executor driver): org.apache.spark.SparkException: Python worker failed to connect back.

at org.apache.spark.api.python.PythonWorkerFactory.createSimpleWorker(PythonWorkerFactory.scala:182)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:107)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:119)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:145)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:497)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1439)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:500)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.SocketTimeoutException: Accept timed out

at java.net.DualStackPlainSocketImpl.waitForNewConnection(Native Method)

at java.net.DualStackPlainSocketImpl.socketAccept(DualStackPlainSocketImpl.java:135)

at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:409)

at java.net.PlainSocketImpl.accept(PlainSocketImpl.java:199)

at java.net.ServerSocket.implAccept(ServerSocket.java:545)

at java.net.ServerSocket.accept(ServerSocket.java:513)

at org.apache.spark.api.python.PythonWorkerFactory.createSimpleWorker(PythonWorkerFactory.scala:174)

... 14 more

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2253)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:2202)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:2201)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:2201)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:1078)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:1078)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:1078)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2440)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2382)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2371)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:868)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2202)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2223)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2242)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2267)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1030)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:414)

at org.apache.spark.rdd.RDD.collect(RDD.scala:1029)

at org.apache.spark.api.python.PythonRDD$.collectAndServe(PythonRDD.scala:180)

at org.apache.spark.api.python.PythonRDD.collectAndServe(PythonRDD.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.spark.SparkException: Python worker failed to connect back.

at org.apache.spark.api.python.PythonWorkerFactory.createSimpleWorker(PythonWorkerFactory.scala:182)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:107)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:119)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:145)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:497)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1439)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:500)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

... 1 more

Caused by: java.net.SocketTimeoutException: Accept timed out

at java.net.DualStackPlainSocketImpl.waitForNewConnection(Native Method)

at java.net.DualStackPlainSocketImpl.socketAccept(DualStackPlainSocketImpl.java:135)

at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:409)

at java.net.PlainSocketImpl.accept(PlainSocketImpl.java:199)

at java.net.ServerSocket.implAccept(ServerSocket.java:545)

at java.net.ServerSocket.accept(ServerSocket.java:513)

at org.apache.spark.api.python.PythonWorkerFactory.createSimpleWorker(PythonWorkerFactory.scala:174)

... 14 more

Solution:

The following variable environments are configured:

# Windows Hadoop variable environments are configured

HADOOP_HOME = F:\hadoop-common-2.2.0-bin-master\hadoop-common-2.2.0-bin-master

# Windows JDKvariable environments are configured

JAVA_HOME = F:\jdk-8u121-windows-x64_8.0.1210.13

# Windows Pysparkvariable environments are configured

PYSPARK_DRIVER_PYTHON = jupyter

PYSPARK_DRIVER_PYTHON_OPTS = notebook

PYSPARK_PYTHON = python

Remember to restart the computer after the configuration is completed!

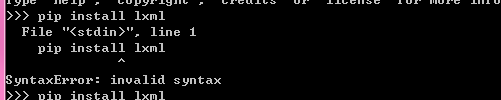

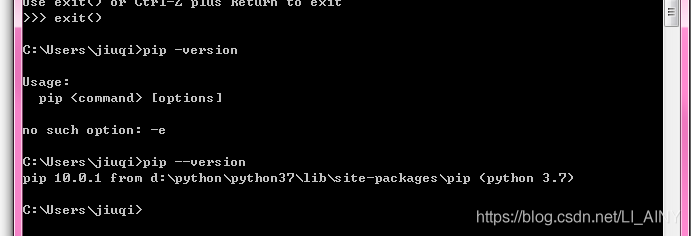

, so I directly use PIP installation package in CMD

, so I directly use PIP installation package in CMD