can’t connect to the NVIDIA driver after restarting the server. At this point, TensorFlow is still running, but only on the CPU. When installing the GPU version of TensorFlow, it also shows that it is installed.

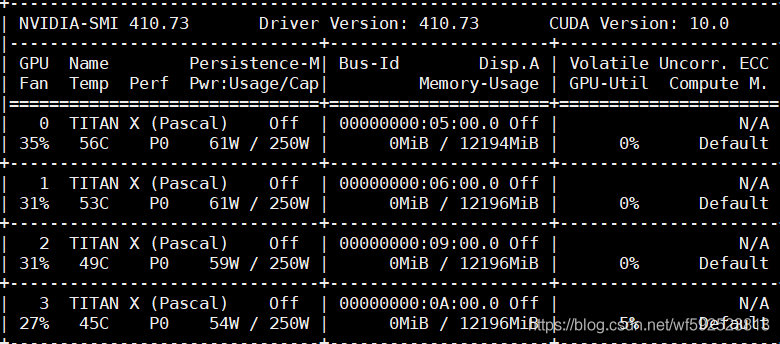

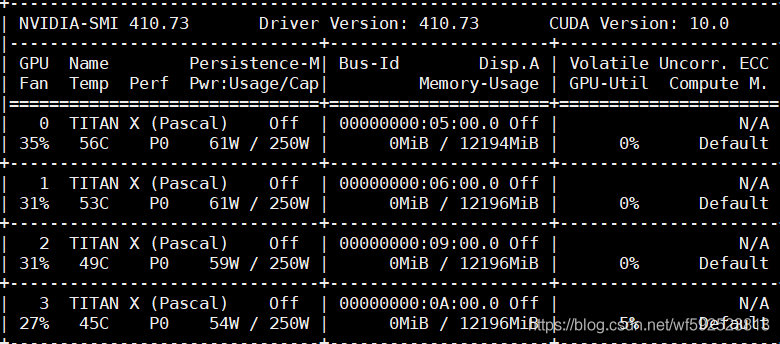

first enter at the terminal nvidia-smi

appears nvidia-smi has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

1 input in the terminal nvcc-v driver is also

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2016 NVIDIA Corporation

Built on Tue_Jan_10_13:22:03_CST_2017

Cuda compilation tools, release 8.0, V8.0.61

solution takes only two steps, without restarting

step1:sudo apt-get install dkms

step2: sudo dkms install -m nvidia -v 410.73

enter nvidia-smi again, return to normal.

where 410.73 in step2 is the version number of NVIDIA. When you do not know the version number, enter the directory /usr/ SRC, you can see that there is a folder of NVIDIA inside, the suffix is its version number

cd /usr/src

another: how to check whether TensorFlow is gpu version or CPU version

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

https://blog.csdn.net/hangzuxi8764/article/details/86572093