1 Error description

1.1 System Environment

Hardware Environment(Ascend/GPU/CPU): GPU

Software Environment:

– MindSpore version (source or binary): 1.5.2

– Python version (e.g., Python 3.7.5): 3.7.6

– OS platform and distribution (e.g., Linux Ubuntu 16.04): Ubuntu 4.15.0-74-generic

– GCC/Compiler version (if compiled from source):

1.2 Basic information

1.2.1 Script

The training script normalizes the Tensor by constructing a BatchNorm single-operator network. The script is as follows:

01 class Net(nn.Cell):

02 def __init__(self):

03 super(Net, self).__init__()

04 self.batch_norm = ops.BatchNorm()

05 def construct(self,input_x, scale, bias, mean, variance):

06 output = self.batch_norm(input_x, scale, bias, mean, variance)

07 return output

08

09 net = Net()

10 input_x = Tensor(np.ones([2, 2]), mindspore.float16)

11 scale = Tensor(np.ones([2]), mindspore.float16)

12 bias = Tensor(np.ones([2]), mindspore.float16)

13 bias = Tensor(np.ones([2]), mindspore.float16)

14 mean = Tensor(np.ones([2]), mindspore.float16)

15 variance = Tensor(np.ones([2]), mindspore.float16)

16 output = net(input_x, scale, bias, mean, variance)

17 print(output)

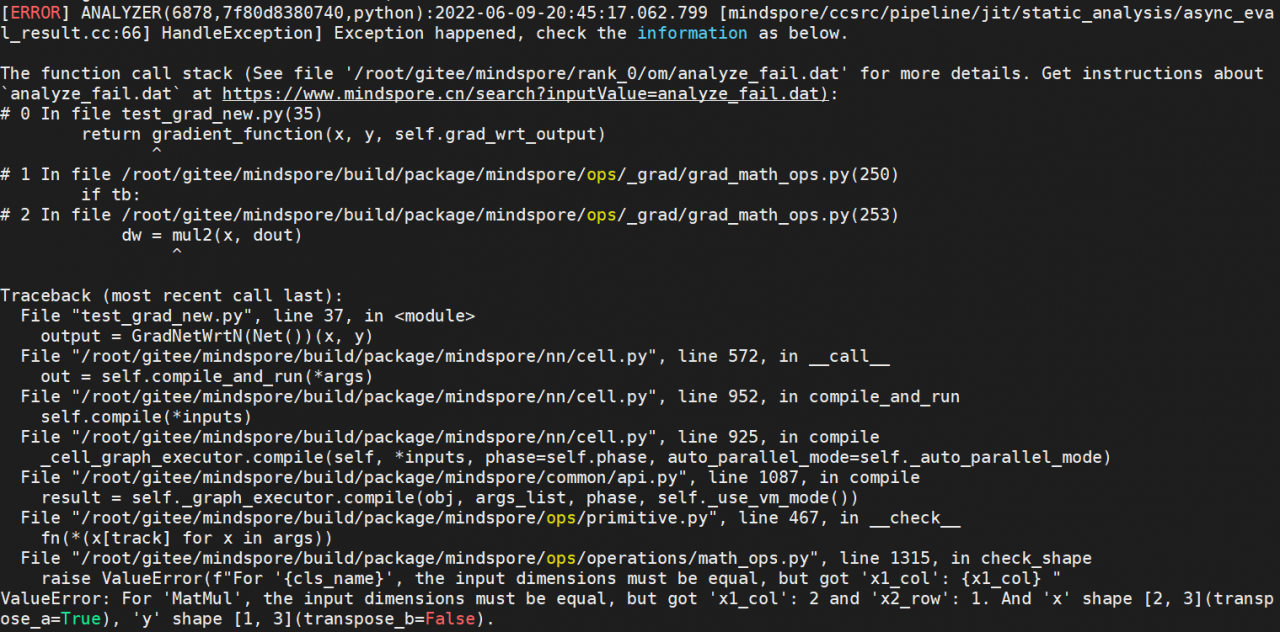

1.2.2 Error reporting

The error message here is as follows:

Traceback (most recent call last):

File "116945.py", line 22, in <module>

output = net(input_x, scale, bias, mean, variance)

File "/data2/llj/mindspores/r1.5/build/package/mindspore/nn/cell.py", line 407, in __call__

out = self.compile_and_run(*inputs)

File "/data2/llj/mindspores/r1.5/build/package/mindspore/nn/cell.py", line 734, in compile_and_run

self.compile(*inputs)

File "/data2/llj/mindspores/r1.5/build/package/mindspore/nn/cell.py", line 721, in compile

_cell_graph_executor.compile(self, *inputs, phase=self.phase, auto_parallel_mode=self._auto_parallel_mode)

File "/data2/llj/mindspores/r1.5/build/package/mindspore/common/api.py", line 551, in compile

result = self._graph_executor.compile(obj, args_list, phase, use_vm, self.queue_name)

TypeError: mindspore/ccsrc/runtime/device/gpu/kernel_info_setter.cc:355 PrintUnsupportedTypeException] Select GPU kernel op[BatchNorm] fail! Incompatible data type!

The supported data types are in[float32 float32 float32 float32 float32], out[float32 float32 float32 float32 float32]; in[float16 float32 float32 float32 float32], out[float16 float32 float32 float32 float32]; , but get in [float16 float16 float16 float16 float16 ] out [float16 float16 float16 float16 float16 ]

Cause Analysis

Let’s look at the error message. In TypeError, write Select GPU kernel op[BatchNorm] fail! Incompatible data type!

The supported data types are in[float32 float32 float32 float32 float32], out[float32 float32 float32 float32 float32]; in[float16 float32 float32 float32 float32], out[float16 float32 float32 float32 float32]; , but get in [float16 float16 float16 float16 float16 ] out [float16 float16 float16 float16 float16 ], which probably means that the current input data type combination is not supported in the GPU environment, and explains what the supported data type combinations are: all are float32 or input_x is float16, and the rest are float32 . Check the input of the script and find that all are of type float16, so an error is reported.

2 Solutions

For the reasons known above, it is easy to make the following modifications:

01 class Net(nn.Cell):

02 def __init__(self):

03 super(Net, self).__init__()

04 self.batch_norm = ops.BatchNorm()

05 def construct(self,input_x, scale, bias, mean, variance):

06 output = self.batch_norm(input_x, scale, bias, mean, variance)

07 return output

08

09 net = Net()

10 input_x = Tensor(np.ones([2, 2]), mindspore.float16)

11 scale = Tensor(np.ones([2]), mindspore.float32)

12 bias = Tensor(np.ones([2]), mindspore.float32)

13 mean = Tensor(np.ones([2]), mindspore.float32)

14 variance = Tensor(np.ones([2]), mindspore.float32)

15

16 output = net(input_x, scale, bias, mean, variance)

17 print(output)

At this point, the execution is successful, and the output is as follows:

output: (Tensor(shape=[2, 2], dtype=Float16, value=

[[ 1.0000e+00, 1.0000e+00],

[ 1.0000e+00, 1.0000e+00]]), Tensor(shape=[2], dtype=Float32, value= [ 0.00000000e+00, 0.00000000e+00]), Tensor(shape=[2], dtype=Float32, value= [ 0.00000000e+00, 0.00000000e+00]), Tensor(shape=[2], dtype=Float32, value= [ 0.00000000e+00, 0.00000000e+00]), Tensor(shape=[2], dtype=Float32, value= [ 0.00000000e+00, 0.00000000e+00]))

3 Summary

Steps to locate the error report:

1. Find the line of user code that reports the error: 16 output = net(input_x, scale, bias, mean, variance);

2. According to the keywords in the log error message, narrow the scope of the analysis problem: The supported data types are in[float32 float32 float32 float32 float32], out[float32 float32 float32 float32 float32]; in[float16 float32 float32 float32 float32], out [float16 float32 float32 float32 float32]; , but get in [float16 float16 float16 float16 float16 ] out [float16 float16 float16 float16 float16 ]

3. It is necessary to focus on the correctness of variable definition and initialization.