1、elasticsearch-7.2.1 startup error: the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured。

1 [elsearch@slaver2 elasticsearch-7.2.1]$ ./bin/elasticsearch

2 future versions of Elasticsearch will require Java 11; your Java version from [/usr/local/soft/jdk1.8.0_281/jre] does not meet this requirement

3 [2021-03-23T15:13:43,592][INFO ][o.e.e.NodeEnvironment ] [slaver2] using [1] data paths, mounts [[/ (/dev/mapper/centos-root)]], net usable_space [1.1gb], net total_space [9.9gb], types [xfs]

4 [2021-03-23T15:13:43,599][INFO ][o.e.e.NodeEnvironment ] [slaver2] heap size [990.7mb], compressed ordinary object pointers [true]

5 [2021-03-23T15:13:43,605][INFO ][o.e.n.Node ] [slaver2] node name [slaver2], node ID [FsI1qieBQ5Kn4MYh001oHQ], cluster name [elasticsearch]

6 [2021-03-23T15:13:43,607][INFO ][o.e.n.Node ] [slaver2] version[7.2.1], pid[10143], build[default/tar/fe6cb20/2019-07-24T17:58:29.979462Z], OS[Linux/3.10.0-1160.el7.x86_64/amd64], JVM[Oracle Corporation/Java HotSpot(TM) 64-Bit Server VM/1.8.0_281/25.281-b09]

7 [2021-03-23T15:13:43,610][INFO ][o.e.n.Node ] [slaver2] JVM home [/usr/local/soft/jdk1.8.0_281/jre]

8 [2021-03-23T15:13:43,612][INFO ][o.e.n.Node ] [slaver2] JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Des.networkaddress.cache.ttl=60, -Des.networkaddress.cache.negative.ttl=10, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch-6519446121284753262, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -XX:+PrintGCDetails, -XX:+PrintGCDateStamps, -XX:+PrintTenuringDistribution, -XX:+PrintGCApplicationStoppedTime, -Xloggc:logs/gc.log, -XX:+UseGCLogFileRotation, -XX:NumberOfGCLogFiles=32, -XX:GCLogFileSize=64m, -Dio.netty.allocator.type=unpooled, -XX:MaxDirectMemorySize=536870912, -Des.path.home=/usr/local/soft/elasticsearch-7.2.1, -Des.path.conf=/usr/local/soft/elasticsearch-7.2.1/config, -Des.distribution.flavor=default, -Des.distribution.type=tar, -Des.bundled_jdk=true]

9 [2021-03-23T15:13:49,428][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [aggs-matrix-stats]

10 [2021-03-23T15:13:49,429][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [analysis-common]

11 [2021-03-23T15:13:49,431][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [data-frame]

12 [2021-03-23T15:13:49,433][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [ingest-common]

13 [2021-03-23T15:13:49,434][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [ingest-geoip]

14 [2021-03-23T15:13:49,435][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [ingest-user-agent]

15 [2021-03-23T15:13:49,435][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [lang-expression]

16 [2021-03-23T15:13:49,436][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [lang-mustache]

17 [2021-03-23T15:13:49,438][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [lang-painless]

18 [2021-03-23T15:13:49,439][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [mapper-extras]

19 [2021-03-23T15:13:49,441][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [parent-join]

20 [2021-03-23T15:13:49,443][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [percolator]

21 [2021-03-23T15:13:49,445][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [rank-eval]

22 [2021-03-23T15:13:49,446][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [reindex]

23 [2021-03-23T15:13:49,447][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [repository-url]

24 [2021-03-23T15:13:49,448][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [transport-netty4]

25 [2021-03-23T15:13:49,448][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-ccr]

26 [2021-03-23T15:13:49,448][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-core]

27 [2021-03-23T15:13:49,449][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-deprecation]

28 [2021-03-23T15:13:49,449][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-graph]

29 [2021-03-23T15:13:49,449][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-ilm]

30 [2021-03-23T15:13:49,450][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-logstash]

31 [2021-03-23T15:13:49,450][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-ml]

32 [2021-03-23T15:13:49,450][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-monitoring]

33 [2021-03-23T15:13:49,451][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-rollup]

34 [2021-03-23T15:13:49,451][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-security]

35 [2021-03-23T15:13:49,452][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-sql]

36 [2021-03-23T15:13:49,456][INFO ][o.e.p.PluginsService ] [slaver2] loaded module [x-pack-watcher]

37 [2021-03-23T15:13:49,460][INFO ][o.e.p.PluginsService ] [slaver2] no plugins loaded

38 [2021-03-23T15:13:59,813][INFO ][o.e.x.s.a.s.FileRolesStore] [slaver2] parsed [0] roles from file [/usr/local/soft/elasticsearch-7.2.1/config/roles.yml]

39 [2021-03-23T15:14:01,757][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [slaver2] [controller/10234] [Main.cc@110] controller (64 bit): Version 7.2.1 (Build 4ad685337be7fd) Copyright (c) 2019 Elasticsearch BV

40 [2021-03-23T15:14:03,624][DEBUG][o.e.a.ActionModule ] [slaver2] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

41 [2021-03-23T15:14:05,122][INFO ][o.e.d.DiscoveryModule ] [slaver2] using discovery type [zen] and seed hosts providers [settings]

42 [2021-03-23T15:14:09,123][INFO ][o.e.n.Node ] [slaver2] initialized

43 [2021-03-23T15:14:09,125][INFO ][o.e.n.Node ] [slaver2] starting ...

44 [2021-03-23T15:14:09,472][INFO ][o.e.t.TransportService ] [slaver2] publish_address {192.168.110.135:9300}, bound_addresses {192.168.110.135:9300}

45 [2021-03-23T15:14:09,504][INFO ][o.e.b.BootstrapChecks ] [slaver2] bound or publishing to a non-loopback address, enforcing bootstrap checks

46 ERROR: [1] bootstrap checks failed

47 [1]: the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

48 [2021-03-23T15:14:09,550][INFO ][o.e.n.Node ] [slaver2] stopping ...

49 [2021-03-23T15:14:09,627][INFO ][o.e.n.Node ] [slaver2] stopped

50 [2021-03-23T15:14:09,629][INFO ][o.e.n.Node ] [slaver2] closing ...

51 [2021-03-23T15:14:09,681][INFO ][o.e.n.Node ] [slaver2] closed

52 [2021-03-23T15:14:09,690][INFO ][o.e.x.m.p.NativeController] [slaver2] Native controller process has stopped - no new native processes can be started

Solution:

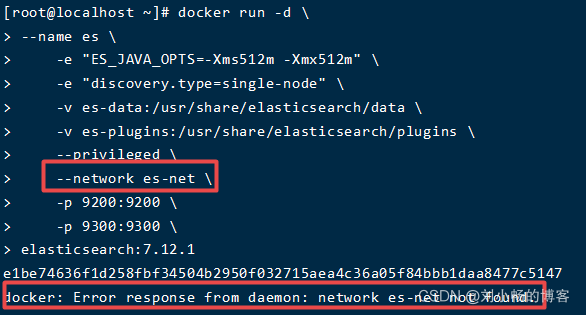

In the config directory of elasticsearch, modify the elasticsearch.yml configuration file and add the following configuration to the configuration file:

1 ip replace host1, etc., multiple nodes please add more than one ip address, single node can be written by default to

2 # configure the following three, at least one of them #[discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes]

3 #cluster.initial_master_nodes: ["node-1", "node-2"]

4 cluster.initial_master_nodes: ["192.168.110.135"]