Environmental description

Server: CentOS 7

docker: 20.10 12

kubeadm:v1. 23.1

Kubernetes:v1. twenty-three point one

Exception description

After docker and k8s related components are installed, there is a problem when executing kubedm init initializing the master node

execute the statement

kubeadm init \

--apiserver-advertise-address=Server_IP \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.23.1 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12

Error reporting exception

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

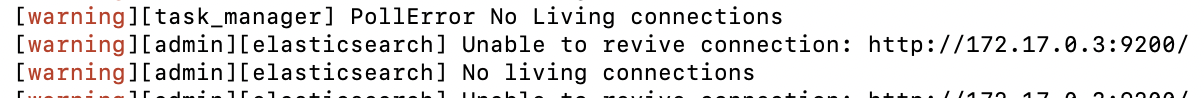

According to the prompt following the error, you can use journalctl -XEU kubelet or journalctl -XEU kubelet -L to view the detailed error information. If you can’t see it completely, you can directly use the direction keys to adjust the error information.

This is

[root@k8s-node01 ~]# journalctl -xeu kubelet

Dec 24 20:24:13 k8s-node01 kubelet[9127]: I1224 20:24:13.456712 9127 cni.go:240] "Unable to update cni config" err="no

Dec 24 20:24:13 k8s-node01 kubelet[9127]: I1224 20:24:13.476156 9127 docker_service.go:264] "Docker Info" dockerInfo=&{

Dec 24 20:24:13 k8s-node01 kubelet[9127]: E1224 20:24:13.476236 9127 server.go:302] "Failed to run kubelet" err="failed

Dec 24 20:24:13 k8s-node01 systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

Dec 24 20:24:13 k8s-node01 systemd[1]: Unit kubelet.service entered failed state.

Dec 24 20:24:13 k8s-node01 systemd[1]: kubelet.service failed.

Move the direction key to the right to view the details of the fourth line

ID:ZYIL:OO24:BWLY:DTTB:TDKT:D3MZ:YGJ4:3ZOU:7DDY:YYPQ:DPWM:ERFV Containers:0 ContainersRunning:0 ContainersPaused:0 Contain

to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \"cgroupfs\"

Error reporting reason

In fact, according to the above error information, it is caused by the inconsistency between k8s and docker’s CGroup driver

k8s is SYSTEMd, while docker is cgroupfs

Yes

docker info

Check CGroup driver: SYSTEMd or cgroupfs are displayed. K8s defaults to cgroupfs

Solution:

Modify the cgroup driver of docker to systemd

edit the configuration file of docker, and create it if it does not exist

vi /etc/docker/daemon.json

Modified to

{

…

“exec-opts”: [“native.cgroupdriver=systemd”]

…

}

Then restart Dockers

systemctl restart docker

Re kubedm init