Hint: if you want to see a list of allocated tenants when oom happens, add Report_tensor_allocations_upon_oom to RunOptions for current allocation info.

Problem description

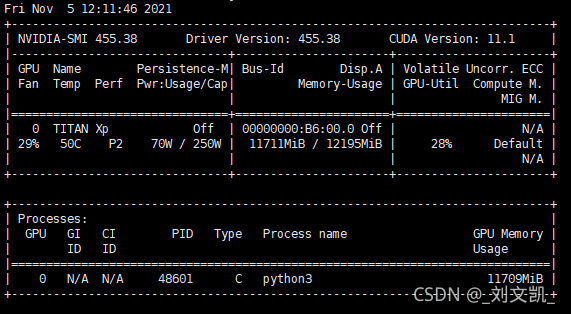

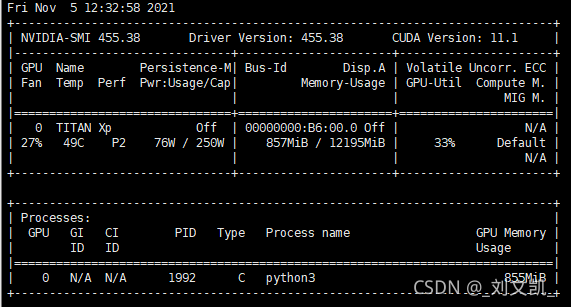

The problems encountered in today’s 50% off cross-validation and grid search are that the amount of data was too large or bitch_ It also occurs when the size is too large, as shown in the figure:

use the command: Watch – N 0.1 NVIDIA SMI in Linux to view the GPU usage

reason

Due to the lack of video memory, but it is not the real lack of video memory, but because TensorFlow has eaten up the video memory, but there is no actual effective utilization. Therefore, the required video memory can be allocated to TensorFlow. (keras based on TensorFlow is also applicable)

Solution:

1. Set small pitch_Size, although it can be used, the indicator does not cure the root cause

2. Manually set the GPU. In train.py:

(1) in tensorflow

import tensorflow as tf

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0" Specify which GPU to use

config = tf.ConfigProto()

config.gpu_options.allow_growth = True # Allocate video memory on demand

config.gpu_options.per_process_gpu_memory_fraction = 0.4 # Maximum memory usage 40%

session = tf.Session(config=config)) # Create tensorflow session

...

(2) in keras

import tensorflow as tf

from keras.models import Sequential

import os

from keras.backend.tensorflow_backend import set_session ## Different from tf.keras

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

config = tf.ConfigProto()

config.gpu_options.allow_growth = True # Allocate video memory on demand

set_session(tf.Session(config=config)) # Pass the settings to keras

model = Sequential()

...

(3) in tf.keras

import tensorflow as tf

from tensorflow.keras.models import Sequential

import os

from tensorflow_core.python.keras.backend import set_session # Different from tf.keras

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

config = tf.ConfigProto()

config.gpu_options.allow_growth = True # Allocate video memory on demand

config.gpu_options.per_process_gpu_memory_fraction = 0.4 # use 40% of the maximum video memory

set_session(tf.Session(config=config)) # Pass the settings to tf.keras

model = Sequential()

...

Supplement:

tf.keras can use data reading multithreading acceleration:

model.fit(x_train,y_train,use_multiprocessing=True, workers=4) # Enable multithreading, using 4 CPUs

Empty session:

from tensorflow import keras

keras.backend.clear_session()

After emptying, you can continue to create a new session

Read More:

- TensorFlow tf.keras.losses .MeanSquaredError

- Keras-nightly Import package Error: cannot import name ‘Adam‘ from ‘keras.optimizers‘

- [Solved] Tensorflow/Keras Error reading weights: ValueError: axes don‘t match array

- [Solved] Tensorflow-gpu Error: self._traceback = tf_stack.extract_stack()

- How to Solve Keras calls plot_model error

- Tensorflow Run Error or the interface is stuck or report error: Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

- [Solved] AttributeError: module ‘keras.preprocessing.image‘ has no attribute ‘load_img‘

- [Solved] Error during composer operation in docker: insufficient memory

- [Solved] Tensorflow2.0 Error: Function call stack:distributed_function

- [Solved] TF2.4 Error: Failed to get convolution algorithm. This is probably because cuDNN failed to initialize

- Error using tensorflow GPU: could not create cudnn handle: cudnn_STATUS_NOT_INITIALIZED

- [Solved] OpenCV Train the class Error: Bad argument & Error: Insufficient memory

- Keras Concatenate error: Layer concatenate_1 was called with an input that isn’t a symbolic tensor…

- Tensorflow C++:You must define TF_LIB_GTL_ALIGNED_CHAR_ARRAY for your compiler

- [Solved] Keras Error: KeyError: ‘accuracy‘, KeyError: ‘val_acc‘, KeyError: ‘acc‘

- Keras’ print model error: Failed to import pydot. You must install pydot and graphviz for `pydotprint` to work.

- TensorFlow-gpu Error: failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

- Tensorflow Error: Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

- TensorFlow error: AttributeError: module ‘tensorflow_core._api.v2.train’ has no attribute ‘Optimizer‘

- Tensorflow error: attributeerror: module ‘tensorflow_ API. V1. Train ‘has no attribute’ summarywriter ‘