Tensorflow GPU reports an error of self_ traceback = tf_ stack.extract_ stack()

Reason 1: the video memory is full

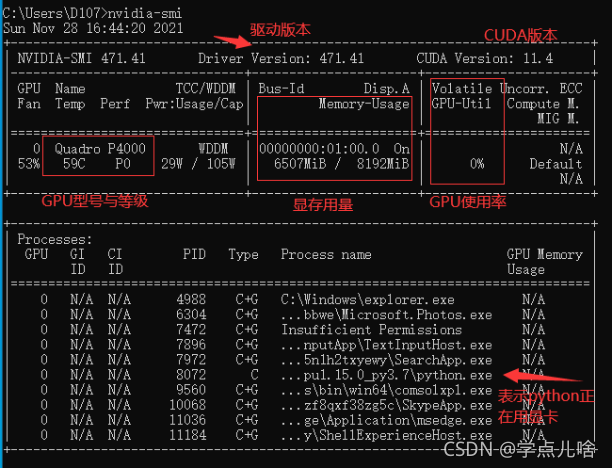

At this time, you can view the GPU running status by entering the command NVIDIA SMI in CMD,

most likely because of the batch entered_ Size or the number of hidden layers is too large, and the display memory is full and the data cannot be loaded completely. At this time, the GPU will not start working (similar to memory and CPU), and the utilization rate is 0%

Solution to reason 1:

1. turn down bath_Size and number of hidden layers, reduce the picture resolution, close other software that consumes video memory, and other methods that can reduce the occupation of video memory, and then try again. If the video memory has only two G’s, it’s better to run with CPU

2.

1. Use with code

os.environ['CUDA_VISIBLE_DEVICES'] = '/gpu:0'

config = tf.compat.v1.ConfigProto(allow_soft_placement=True)

config.gpu_options.per_process_gpu_memory_fraction = 0.7

tf.compat.v1.keras.backend.set_session(tf.compat.v1.Session(config=config))

Reason 2. There are duplicate codes and the calling programs overlap

I found this when saving and loading the model. The assignment and operation of variables are repeatedly written during saving and loading, and an error self is reported during loading_traceback = tf_stack.extract_Stack()

There are many reasons for the tensorflow error self_traceback = tf_stack.extract_stack()

the error codes are as follows:

import tensorflow as tf

a = tf.Variable(5., tf.float32)

b = tf.Variable(6., tf.float32)

num = 10

model_save_path = './model/'

model_name = 'model'

saver = tf.train.Saver()

with tf.Session() as sess:

init_op = tf.compat.v1.global_variables_initializer()

sess.run(init_op)

for step in np.arange(num):

c = sess.run(tf.add(a, b))

saver.save(sess, os.path.join(model_save_path, model_name), global_step=step)

print("Parameters saved successfully!")

a = tf.Variable(5., tf.float32)

b = tf.Variable(6., tf.float32) # Note the repetition here

num = 10

model_save_path = './model/'

model_name = 'model'

saver = tf.train.Saver() # Note the repetition here

with tf.Session() as sess:

init_op = tf.compat.v1.global_variables_initializer()

sess.run(init_op)

ckpt = tf.train.get_checkpoint_state(model_save_path)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

print("load success")

Running the code will report an error: self_traceback = tf_stack.extract_stack()

Reason 2 solution

when Saver = TF.Train.Saver() in parameter loading is commented out or commented out

a = tf.Variable(5., tf.float32)

b = tf.Variable(6., tf.float32) # Note the repetition here

The model will no longer report errors. I don’t know the specific reason.

Read More:

- [Solved] Tensorflow2.0 Error: Function call stack:distributed_function

- [Solved] NPM install error: Maximum call stack size exceeded

- [Solved] Tensorflow error or keras error and tf.keras error: oom video memory is insufficient

- NPM installation error Maximum call stack size exceeded

- TensorFlow tf.keras.losses .MeanSquaredError

- Tensorflow C++:You must define TF_LIB_GTL_ALIGNED_CHAR_ARRAY for your compiler

- TensorFlow error: AttributeError: module ‘tensorflow_core._api.v2.train’ has no attribute ‘Optimizer‘

- FailedPreconditionError (see above for traceback): Attempting to use uninitialized value Variable

- Tensorflow error: attributeerror: module ‘tensorflow_ API. V1. Train ‘has no attribute’ summarywriter ‘

- [Solved] Tensorflow-gpu 2.0.0 Run Error: ModuleNotFoundError: No module named ‘tensorflow_core.estimator‘

- TF Error: Error: TF_DENORMALIZED_QUATERNION [How to Solve]

- [Solved] Tensorflow Error: Failed to load the native TensorFlow runtime.

- [Solved] TF2.4 Error: Failed to get convolution algorithm. This is probably because cuDNN failed to initialize

- Error using tensorflow GPU: could not create cudnn handle: cudnn_STATUS_NOT_INITIALIZED

- Tensorflow Run Error or the interface is stuck or report error: Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

- [Solved] Error: Failure while executing; `tar –extract –no-same-owner –file /Users/wangchuangyan/Library/C

- Tensorflow Error: Could not create cudnn handle: CUDNN_STATUS_INTERNAL_ERROR

- [Solved] tsfresh extract_features Error: ValueError: Can only compute partial correlations for lags up to 50%…

- [Solved] No tf data. Actual error: Fixed Frame [world] does not exist

- [Solved] TensorFlow severing Container Creat Error: failed: Out of range: Read less bytes than requested