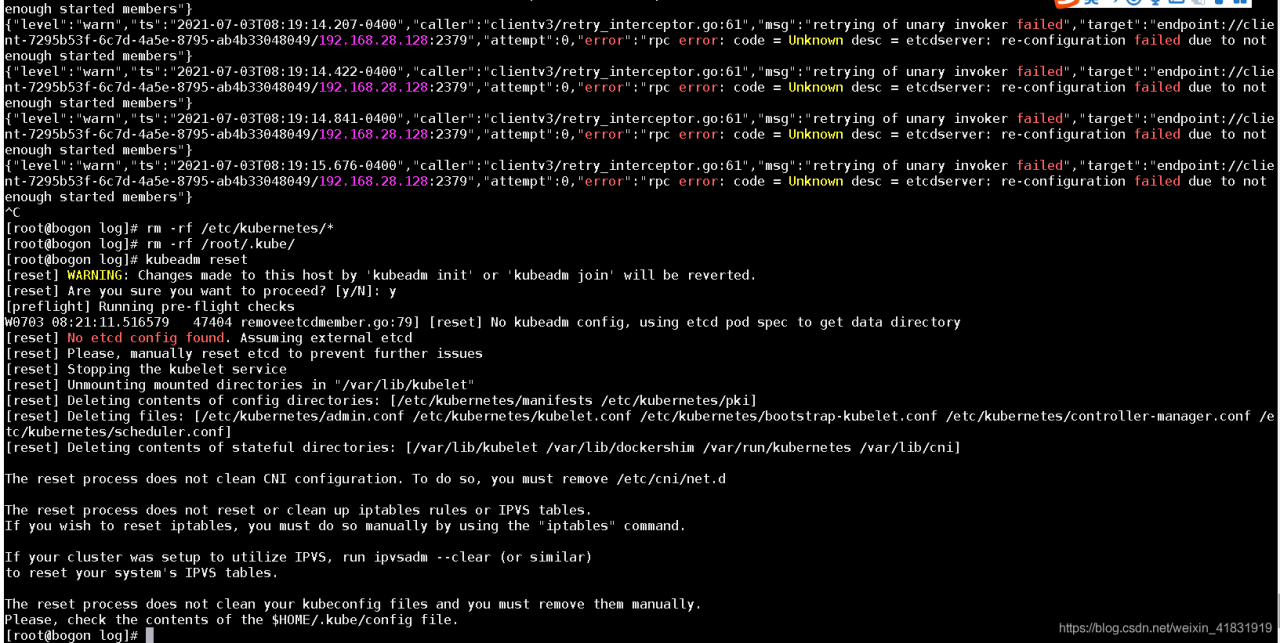

Error information:

[root@bogon log]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed?[y/N]: y

[preflight] Running pre-flight checks

[reset] Removing info for node "bogon" from the ConfigMap "kubeadm-config" in the "kube-system" Namespace

{"level":"warn","ts":"2021-07-03T08:19:14.041-0400","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"endpoint://client-7295b53f-6c7d-4a5e-8795-ab4b33048049/192.168.28.128:2379","attempt":0,"error":"rpc error: code = Unknown desc = etcdserver: re-configuration failed due to not enough started members"}

{"level":"warn","ts":"2021-07-03T08:19:14.096-0400","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"endpoint://client-7295b53f-6c7d-4a5e-8795-ab4b33048049/192.168.28.128:2379","attempt":0,"error":"rpc error: code = Unknown desc = etcdserver: re-configuration failed due to not enough started members"}

Solutions:

Execute the following two commands

rm -rf /etc/kubernetes/*

rm -rf /root/.kube/Then execute it again

kubeadm reset

Read More:

- kubeadm join Add a New Note Error [How to Solve]

- [Solved] kubeadm init initialize error: “unknown service runtime.v1alpha2.RuntimeService”

- [Solved] Kubeadm join Timeout error execution phase kubelet-start: error uploading crisocket: timed out waiting

- [Solved] Installation failed due to: ‘INSTALL_FAILED_SHARED_USER_INCOMPATIBLE: Package com.

- How to Fix Error 1069:The service did not start due to a logon failure

- [Solved] Unity Import Xcode Project Error: iOS framework addition failed due to a CocoaPods installation failure.

- [Solved] Kafka Start Log Error: WARN Found a corrupted index file due to requirement failed: Corrupt index found

- Kubernetes Error: Error in configuration: unable to read client-cert* unable to read client-key*

- RT thread download compilation error: Error: Unable to reset MCU!

- [Solved] A needed class was not found. This could be due to an error in your runpath. Missing class: scala/co

- [Solved] Logging system failed to initialize using configuration from ‘classpathlogbacklogback-spring.xml‘

- [Solved] push declined due to email privacy restrictions (GH007 error code)

- [Fixed]ERROR: canceling statement due to conflict with recovery

- Connection was reset, errno 10054 Error [How to Solve]

- Centos7 hive started to report an error. There is no route to the host. The firewall has been closed

- How to Solve Hmaster hangs up issue due to namenode switching in Ha mode

- [Solved] Vscode error: Unable to resolve resource walkThrough://vscode_getting_started_page

- [Solved] UNABLE TO START SERVLETWEBSERVERAPPLICATIONCONTEXT DUE TO MISSING SERVLETWEBSERVERFACTORY BEAN

- [Solved] Hive tez due to: ROOT_INPUT_INIT_FAILURE java.lang.IllegalArgumentException: Illegal Capacity: -38297

- [Solved] ClientError.Security.Unauthorized: The client is unauthorized due to authentication failure.