**

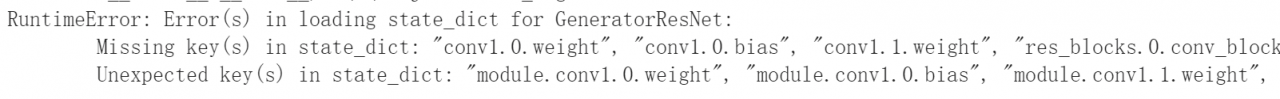

Error (s) in loading state_dict for GeneratorResNet

**

cause of the problem: check whether we use dataparallel for multi GPU during training. The model generated by this method will automatically add key: module

observe the error message:

you can find that the key values in the model are more modules

you can find that the key values in the model are more modules

Solution:

1. Delete the module

gentmps=torch.load("./saved_models/generator_%d.pth" % opt.epoch)

distmps = torch.load("./saved_models/discriminator_%d.pth" % opt.epoch)

from collections import OrderedDict

new_gens = OrderedDict()

new_diss = OrderedDict()

for k, v in gentmps.items():

name = k.replace('module.','') # remove 'module.'

new_gens[name] = v #The value corresponding to the key value of the new dictionary is a one-to-one value.

for k, v in distmps.items():

name = k.replace('module.','') # remove 'module.'

new_diss[name] = v #The value corresponding to the key value of the new dictionary is a one-to-one value.

generator.load_state_dict(new_gens)

discriminator.load_state_dict(new_diss)

Read More:

- Pytorch Loading model error: RuntimeError: Error(s) in loading state_dict for Model: Missing key(s) in state_dict

- [Solved] PyTorch Load Model Error: Missing key(s) RuntimeError: Error(s) in loading state_dict for

- [Solved] RuntimeError: Error(s) in loading state_dict for BertForTokenClassification

- pytorch RuntimeError: Error(s) in loading state_ Dict for dataparall… Import model error solution

- [Solved] RuntimeError: Error(s) in loading state_dict for Net:

- [Solved] Pytorch Error: RuntimeError: Error(s) in loading state_dict for Network: size mismatch

- [Solved] RuntimeError: Error(s) in loading state dict for YOLOX:

- pytorch model.load_state_dict Error [How to Solve]

- [Solved] TUM associate.py Scripte Error: AttributeError: ‘dict_keys‘ object has no attribute ‘remove‘

- [Solved] Python 3.6 Error: ‘dict’ object has no attribute ‘has_key’

- How to Solve Python AttributeError: ‘dict’ object has no attribute ‘item’

- [ONNXRuntimeError] : 10 : INVALID_Graph loading model error

- How to Solve Pytorch DataLoader Loading Error: UnicodeDecodeError: ‘utf-8‘ codec can‘t decode byte 0xe5 in position 1023

- [Solved] ParserError: NULL byte detected. This byte cannot be processed in Python‘s native csv library

- [Solved] bushi RuntimeError: version_ <= kMaxSupportedFileFormatVersion INTERNAL ASSERT FAILED at /pytorch/caffe2/s

- [Solved] django.core.exceptions.ImproperlyConfigured: Error loading MySQLdb module.Did you install mysqlclie

- [Solved] Python Networkx Error: Network error: random_state_index is incorrect

- OSError: [WinError 1455] The page file is too small to complete the operation. Error loading…

- [Solved] Yolov5 Deep Learning Error: RuntimeError: DataLoader worker (pid(s) 2516, 1768) exited unexpectedly

- Python Error: mongod: error while loading shared libraries: libcrypto.so.1.1