**

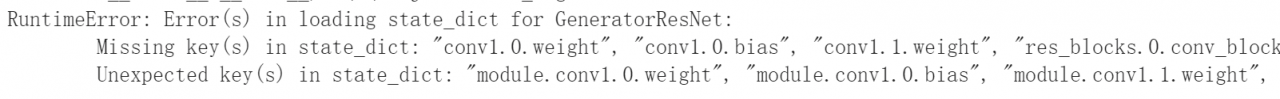

Error (s) in loading state_dict for GeneratorResNet

**

cause of the problem: check whether we use dataparallel for multi GPU during training. The model generated by this method will automatically add key: module

observe the error message:

you can find that the key values in the model are more modules

you can find that the key values in the model are more modules

Solution:

1. Delete the module

gentmps=torch.load("./saved_models/generator_%d.pth" % opt.epoch)

distmps = torch.load("./saved_models/discriminator_%d.pth" % opt.epoch)

from collections import OrderedDict

new_gens = OrderedDict()

new_diss = OrderedDict()

for k, v in gentmps.items():

name = k.replace('module.','') # remove 'module.'

new_gens[name] = v #The value corresponding to the key value of the new dictionary is a one-to-one value.

for k, v in distmps.items():

name = k.replace('module.','') # remove 'module.'

new_diss[name] = v #The value corresponding to the key value of the new dictionary is a one-to-one value.

generator.load_state_dict(new_gens)

discriminator.load_state_dict(new_diss)