1. Make a note of Kafka error handling

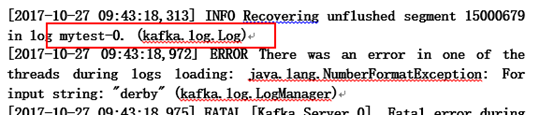

After Kafka was stopped, an error occurred when restarting :

[2017-10-27 09:43:18,313] INFO Recovering unflushed segment 15000679 in log mytest-0. (kafka.log.Log)

[2017-10-27 09:43:18,972] ERROR There was an error in one of the threads during logs loading: java.lang.NumberFormatException: For input string: “derby” (kafka.log.LogManager)

[2017-10-27 09:43:18,975] FATAL [Kafka Server 0], Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

java.lang.NumberFormatException: For input string: “derby”

at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)

at java.lang.Long.parseLong (Long.java:589)

at java.lang.Long.parseLong (Long.java:631)

at scala.collection.immutable.StringLike$class.toLong(StringLike.scala:277)

at scala.collection.immutable.StringOps.toLong(StringOps.scala:29)

at kafka.log.Log$.offsetFromFilename(Log.scala:1648)

at kafka.log.Log $$ anonfun $ loadSegmentFiles $ 3.apply (Log.scala: 284)

at kafka.log.Log $$ anonfun $ loadSegmentFiles $ 3.apply (Log.scala: 272)

at scala.collection.TraversableLike$WithFilter$$anonfun$foreach$1.apply(TraversableLike.scala:733)

Looking directly at the error log, you can see from the log that there is an obvious error:

ERROR There was an error in one of the threads during logs loading: java.lang.NumberFormatException: For input string: “derby” (kafka.log.LogManager)

From the original meaning, it can be seen that there is a thread that made an error while loading the log, java.lang.NumberFormatException throws an exception, and the input string derby has a problem.

What the hell? ?

First, let’s analyze what to do when kafka restarts: When the

kafka broker is started, the data of each topic before it will be reloaded, and under normal circumstances, it will prompt that each topic is restored.

INFO Recovering unflushed segment 8790240 in log userlog-2. (kafka.log.Log)

INFO Loading producer state from snapshot file 00000000000008790240.snapshot for partition userlog-2 (kafka.log.ProducerStateManager)

INFO Loading producer state from offset 10464422 for partition userlog-2 with message format version 2 (kafka.log.Log)

INFO Loading producer state from snapshot file 00000000000010464422.snapshot for partition userlog-2 (kafka.log.ProducerStateManager)

INFO Completed load of log userlog-2 with 2 log segments, log start offset 6223445 and log end offset 10464422 in 4460 ms (kafka.log.Log)

But when the data recovery under some topics fails, it will cause the broker to shut down, and an error will be reported:

ERROR There was an error in one of the threads during logs loading: java.lang.NumberFormatException: For input string: “derby” (kafka .log.LogManager)

Now it is clear that the problem lies in the topic data. What is the problem? ?

Quickly go to the place where kafka stores the topic, this path is set in server.properties:

log.dirs=/data/kafka/kafka-logs

1 ) From the previous line of the error log:

It can be seen that the problem occurred when loading the topic mytest-0. Go directly to the directory where this topic is located, and find that there is an illegal file called derby.log. Delete it directly and restart the service.

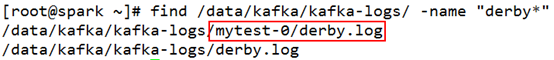

2 ) Check it completely and make sure that there is no similar document

#cd /data/kafka/kafka-logs

#find /data/kafka/kafka-logs/ -name “derby*”

You can see that there is a derby.log file under topic, mytest-0, which is illegal. Because kafka broker requires all data file names to be of type Long . Just delete this file and restart kafka.

2. Remember a kafka, zookeeper error report

Both Kafka and zookeeper started normally, but from the log, it was disconnected soon after being connected. The error message is as follows:

[2017-10-27 15:06:08,981] INFO Established session 0x15f5c88c014000a with negotiated timeout 240000 for client /127.0.0.1:33494 (org.apache.zookeeper.server.ZooKeeperServer)

[2017-10-27 15:06:08,982] INFO Processed session termination for sessionid: 0x15f5c88c014000a (org.apache.zookeeper.server.PrepRequestProcessor)

[2017-10-27 15:06:08,984] WARN caught end of stream exception (org.apache.zookeeper.server.NIOServerCnxn)

EndOfStreamException: Unable to read additional data from client sessionid 0x15f5c88c014000a, likely client has closed socket

at org.apache.zookeeper.server.NIOServerCnxn.doIO (NIOServerCnxn.java:239)

at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:203)

at java.lang.Thread.run(Thread.java:745)

From the literal meaning of the log, the first log: said that session 0x15f5c88c014000a timed out after 240 seconds (what the hell?); continue to the second log and said 0x15f5c88c014000a This session ended, and the timeout caused the session to be disconnected. This is Understand; Ok, let’s look at the third item: You can’t read additional data from the 0x15f5c88c014000a session. (All disconnected, how to read). So far log analysis is complete, it seems that the session timeout disconnection. Just increase the connection time of the session.

The configured timeout is too short, Zookeeper has not finished reading the data of Consumer , and the connection is disconnected by Consumer !

Solution:

Modify kafka’s server.properties file:

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=600000

zookeeper.session.timeout.ms=400000

Generally it is fine. If you are not at ease, change the zookeeper configuration file:

# disable the per-ip limit on the number of connections since this is a non-production config

maxClientCnxns=1000

tickTime = 120000

Read More:

- [Solved] kafka Startup Error: ERROR Shutdown broker because all log dirs in /…/kafka/logs have failed (kafka.log.LogManager)

- [Solved] Wwagger error: java.lang.NumberFormatException: For input string: ““

- [Solved] Kafka Error: kafka.common.InconsistentClusterIdException…

- Kafka executes the script to create topic error: error org apache. kafka. common. errors. InvalidReplicationFactorException: Replicati

- [Solved] Win 10 Kafka error: failed to construct Kafka consumer

- [Solved] kafka startup Error: java.net.ConnectException: Connection refused

- [Solved] Kafka Start Log Error: WARN Found a corrupted index file due to requirement failed: Corrupt index found

- Kafka Creates and Writes Logs Error [How to Solve]

- [Solved] Kafka Error: InvalidReplicationFactorException: Replication factor:

- kafka Error: Error while fetching metadata with correlation

- How to Solve Kafka Error: no leader

- [Solved] Kafka2.3.0 Error: Timeout of 60000ms expired before the position for partition could be determined

- Huawei kafka Authentication error: Server not found in Kerberos database (7) – LOOKING_UP_SERVER

- How to Solve canal & MYSQL or “Kafka cannot consume data” Error

- [Solved] java.lang.IllegalStateException: Cannot get a STRING value from a NUMERIC cell

- [Solved] Hive tez due to: ROOT_INPUT_INIT_FAILURE java.lang.IllegalArgumentException: Illegal Capacity: -38297

- [Solved] Arm Server kibana7.4.1 Error: Sending Logstash logs to /home/logstash-6.8.4/logs which is now configured via log4j2.properties

- Kafka Error while fetching metadata with correlation id 1 : {alarmHis=LEADER_NOT_AVAILABLE}

- Start error in maven web project java.lang.ClassNotFoundException: org.springframework.web.util.Log4jConfigListener