Error:

CommandNotFoundError: Your shell has not been properly configured to use ‘conda activate’.

Solution:

source activate

source deactivateExecuting CONDA activate XXX again will not cause any problems

Error:

CommandNotFoundError: Your shell has not been properly configured to use ‘conda activate’.

Solution:

source activate

source deactivateExecuting CONDA activate XXX again will not cause any problems

When debugging the code of densenet for classification task, the following errors are encountered in the process of image preprocessing:

runtimeerror: stack expectations each tensor to be equal size, but got [640, 640] at entry 0 and [560, 560] at entry 2

it means that the size of the loaded sheets is inconsistent

after searching, it is found that there should be a problem in the preprocessing process when I load the image

the following is the instantiation part of training data preprocessing.

train_transform = Compose(

[

LoadImaged(keys=keys),

AddChanneld(keys=keys),

CropForegroundd(keys=keys[:-1], source_key="tumor"),

ScaleIntensityd(keys=keys[:-1]),

# # Orientationd(keys=keys[:-1], axcodes="RAI"),

Resized(keys=keys[:-1], spatial_size=(64, 64), mode='bilinear'),

ConcatItemsd(keys=keys[:-1], name="image"),

RandGaussianNoised(keys=["image"], std=0.01, prob=0.15),

RandFlipd(keys=["image"], prob=0.5), # , spatial_axis=[0, 1]

RandAffined(keys=["image"], mode='bilinear', prob=1.0, spatial_size=[64, 64], # The 3 here is because we don't know what the size of the three modal images will be after stitching, so we first use

rotate_range=(0, 0, np.pi/15), scale_range=(0.1, 0.1)),

ToTensord(keys=keys),

]

)My keys are [“t2_img”, “dwi_img”, “adc_img”, “tumor”]

the error shows that the loaded tensor has dimensions [640, 640] and [560, 560], which are the dimensions of my original image, which indicates that there may be a problem in my clipping step or resize step. Finally, after screening, it is found that there is a problem in my resize step. In the resize step, I selected keys = keys [: – 1], that is, it does not contain “tumor”. Therefore, when resizing, my tumor image will still maintain the size of the original image, and the data contained in this dictionary will still be a whole when loaded, The dimensions of each dimension of the whole will automatically expand to the largest of the corresponding dimensions of all objects, so the data I loaded will still be the size of the original drawing. Make the following corrections:

train_transform = Compose(

[

LoadImaged(keys=keys),

AddChanneld(keys=keys),

CropForegroundd(keys=keys[:-1], source_key="tumor"),

ScaleIntensityd(keys=keys[:-1]),

# # Orientationd(keys=keys[:-1], axcodes="RAI"),

Resized(keys=keys, spatial_size=(64, 64), mode='bilinear'), # remove [:-1]

ConcatItemsd(keys=keys[:-1], name="image"),

RandGaussianNoised(keys=["image"], std=0.01, prob=0.15),

RandFlipd(keys=["image"], prob=0.5), # , spatial_axis=[0, 1]

RandAffined(keys=["image"], mode='bilinear', prob=1.0, spatial_size=[64, 64], # The 3 here is because we don't know what the size of the three modal images will be after stitching, so we first use

rotate_range=(0, 0, np.pi/15), scale_range=(0.1, 0.1)),

ToTensord(keys=keys),

]

)Run successfully!

AttributeError: module ‘time’ has no attribute ‘clock’

Error Messages:

# `flask_sqlalchemy` Error:

File "D:\python38-flasky\lib\site-packages\sqlalchemy\util\compat.py", line 172, in <module>

time_func = time.clock

AttributeError: module 'time' has no attribute 'clock'reason:

Python 3.8 no longer supports time.clock, but it still contains this method when calling. There is a version problem.

Solution:

Use the replacement method: time.perf_Counter(), such as:

import time

if win32 or jython:

# time_func = time.clock

time_finc = time.perf_counter()

else:

time_func = time.timePdfplumber reports an error when reading PDF table attributeerror: function/symbol ‘arc4_stream_init’ not found in library

Solutions to errors reported

Error reporting item

When using pdfplumber to extract tables in PDF, you will be prompted that arc4 is missing_stream_init。

Traceback (most recent call last):

File "C:\Users\Stan\Python\ALIRT\pdf extracter\test.py", line 50, in <module>

text = convert_pdf_to_txt('test_pdf.pdf')

File "C:\Users\Stan\Python\ALIRT\pdf extracter\test.py", line 40, in convert_pdf_to_txt

for page in PDFPage.get_pages(fp, pagenos, maxpages=maxpages, password=password,caching=caching, check_extractable=True):

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfpage.py", line 127, in get_pages

doc = PDFDocument(parser, password=password, caching=caching)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 564, in __init__

self._initialize_password(password)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 590, in _initialize_password

handler = factory(docid, param, password)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 283, in __init__

self.init()

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 291, in init

self.init_key()

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 304, in init_key

self.key = self.authenticate(self.password)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 354, in authenticate

key = self.authenticate_user_password(password)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 361, in authenticate_user_password

if self.verify_encryption_key(key):

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 368, in verify_encryption_key

u = self.compute_u(key)

File "C:\Users\Stan\anaconda3\lib\site-packages\pdfminer\pdfdocument.py", line 326, in compute_u

result = ARC4.new(key).encrypt(hash.digest()) # 4

File "C:\Users\Stan\anaconda3\lib\site-packages\Crypto\Cipher\ARC4.py", line 132, in new

return ARC4Cipher(key, *args, **kwargs)

File "C:\Users\Stan\anaconda3\lib\site-packages\Crypto\Cipher\ARC4.py", line 60, in __init__

result = _raw_arc4_lib.ARC4_stream_init(c_uint8_ptr(key),

File "C:\Users\Stan\anaconda3\lib\site-packages\cffi\api.py", line 912, in __getattr__

make_accessor(name)

File "C:\Users\Stan\anaconda3\lib\site-packages\cffi\api.py", line 908, in make_accessor

accessors[name](name)

File "C:\Users\Stan\anaconda3\lib\site-packages\cffi\api.py", line 838, in accessor_function

value = backendlib.load_function(BType, name)

AttributeError: function/symbol 'ARC4_stream_init' not found in library 'C:\Users\Stan\anaconda3\lib\site-packages\Crypto\Util\..\Cipher\_ARC4.cp37-win_amd64.pyd': error 0x7fSolution:

Downgrade:

pip install pycryptodome==3.0.0Two methods

Method 1:

# Installation

$ pip install arc4

# Import ARC4 package

from arc4 import ARC4Method 2:

# Installation

$ pip install crypto

# Import ARC4 package

from Crypto.Cipher import ARC4Problem code:

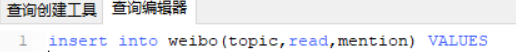

MySQLdb._exceptions.ProgrammingError: (1064, "You have an error in your SQL syntax; check the manual that corresponds to you

r MySQL server version for the right syntax to use near 'read,mention) values ('家长偏心对孩子的影响有多大',15424499,5655)'

at line 1")First analyze the error message: MySQLdb._exceptions.ProgrammingError is because the connecting party did not respond correctly after a period of time or the connected host did not respond, and the connection attempt failed.

So I first checked the sql statement, and when I ran my sql statement in Navicat, I found:

Obviously, the read inside is the keyword index, and an error is reported.

Solution: change the key value in items to something else.

forrtl: error (200): program aborting due to control-C event

pycharm Error:

forrtl: error (200): program aborting due to control-C event

Image PC Routine Line Source

libifcoremd.dll 00007FFD5FCA3B58 Unknown Unknown Unknown

KERNELBASE.dll 00007FFDC015B933 Unknown Unknown Unknown

KERNEL32.DLL 00007FFDC15D7034 Unknown Unknown Unknown

ntdll.dll 00007FFDC2762651 Unknown Unknown Unknown

Solution:

pip install --upgrade scipyJust run this in terminal. The principle is not clear.

Error Messages:

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.FloatTensor [544, 768]], which is output 0 of ViewBackward, is at version 1; expected version 0 instead.

Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Solution:

The reason for the error is that when I was building the network model, the code for summing residuals was written like this: x += y

But in pytorch, it is wrong to write it like this, just change it to: x = x+y

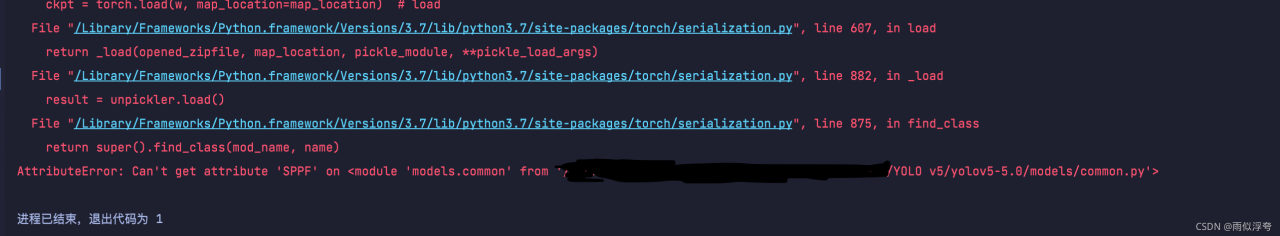

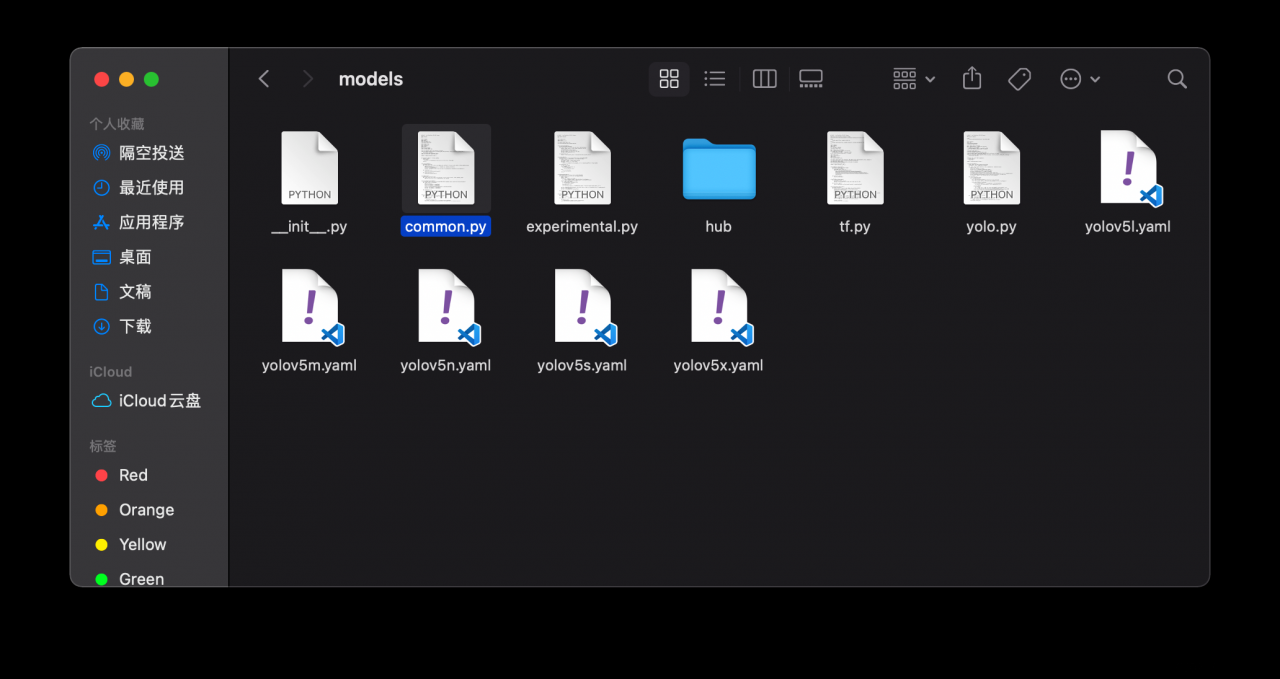

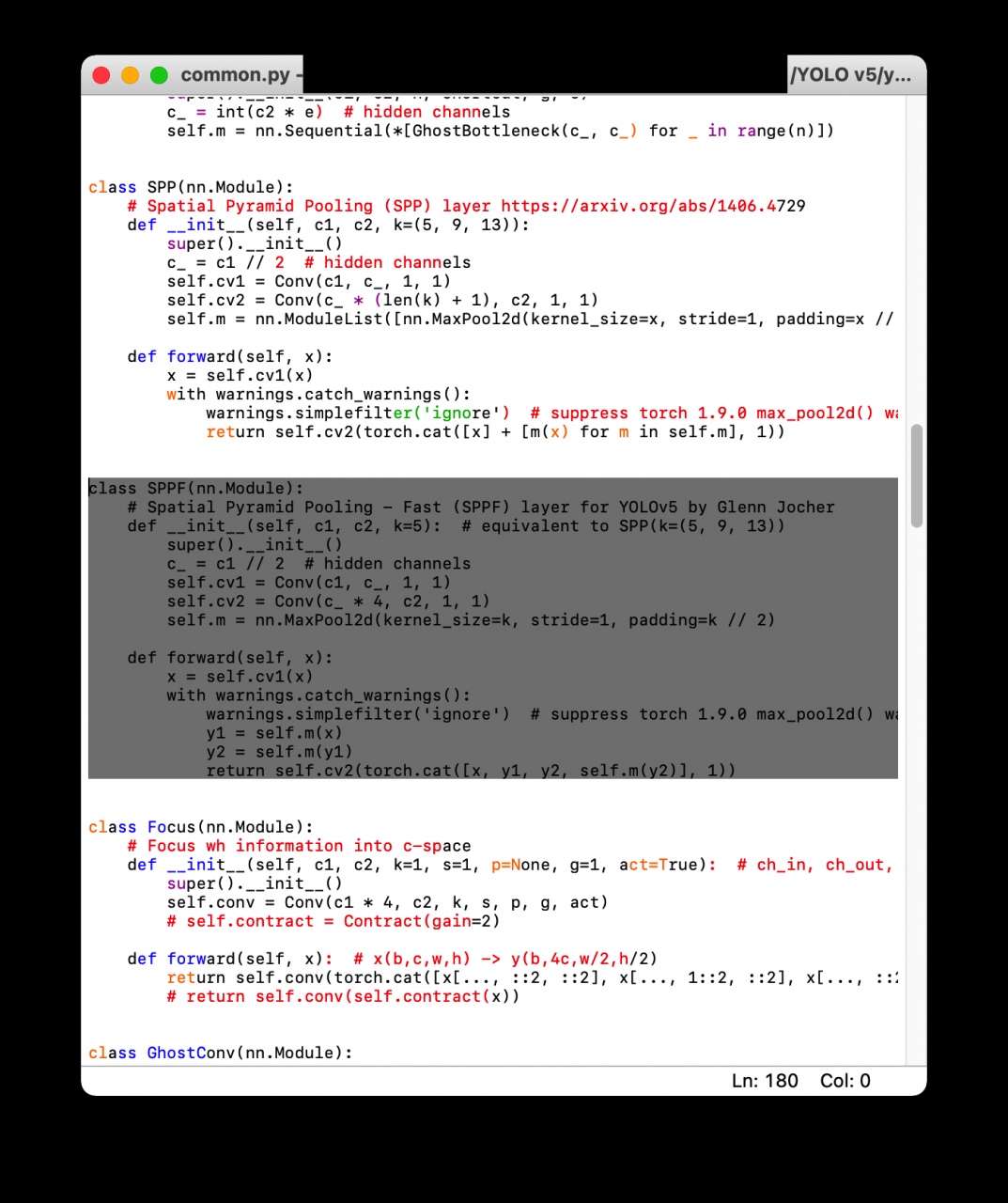

When running the detect.py program of yolov5, the following error prompt attributeerror appears: can’t get attribute sppf on module models. Common from D:// yolov\ yolov5-5.0\ odels\common.py**

2. Solution

class SPPF(nn.Module): # export-friendly version of nn.SiLU()

@staticmethod

def forward(x):

return x * torch.sigmoid(x)

Problem-solving:

Bitcake failed on the simplest recipe

1. Compilation error: error: execution of event handler ‘sstate’_ eventhandler2’ failed

Download the yocto code. When compiling, the following errors are reported:

$ bitbake core-image-minimal

Loading cache: 100% |##########################################################################################################| Time: 0:00:00

Loaded 1320 entries from dependency cache.

ERROR: Execution of event handler 'sstate_eventhandler2' failed

Traceback (most recent call last):

File "/home/some-user/projects/melp/poky/meta/classes/sstate.bbclass", line 1015, in sstate_eventhandler2(e=<bb.event.ReachableStamps object at 0x7fbc17f2e0f0>):

for l in lines:

> (stamp, manifest, workdir) = l.split()

if stamp not in stamps:

ValueError: not enough values to unpack (expected 3, got 1)

ERROR: Command execution failed: Traceback (most recent call last):

File "/home/some-user/projects/melp/poky/bitbake/lib/bb/command.py", line 101, in runAsyncCommand

self.cooker.updateCache()

File "/home/some-user/projects/melp/poky/bitbake/lib/bb/cooker.py", line 1658, in updateCache

bb.event.fire(event, self.databuilder.mcdata[mc])

File "/home/some-user/projects/melp/poky/bitbake/lib/bb/event.py", line 201, in fire

fire_class_handlers(event, d)

File "/home/some-user/projects/melp/poky/bitbake/lib/bb/event.py", line 124, in fire_class_handlers

execute_handler(name, handler, event, d)

File "/home/some-user/projects/melp/poky/bitbake/lib/bb/event.py", line 96, in execute_handler

ret = handler(event)

File "/home/some-user/projects/melp/poky/meta/classes/sstate.bbclass", line 1015, in sstate_eventhandler2

(stamp, manifest, workdir) = l.split()

ValueError: not enough values to unpack (expected 3, got 1)It looks like a python error. Who knows what the problem is? Am I using the wrong version?

The following is the output of Python — version

$ python --version

Python 2.7.122. How to solve it?

Delete the TMP and sstate cache directories, try again, and compile OK

[SSL: certificate_verify_failed] problem when downloading video using you get and ffmpeg

Since the you get -- debug debugging shows that it is a certificate verification problem, the part ignoring SSL certificate verification is added to the code and implemented in pycharm (non command line)

Modify url = 'website', output_Dir = R 'save path'

import ssl

from you_get import common

# Ignore certificate validation issues

ssl._create_default_https_context = ssl._create_unverified_context

# Call any_download_playlist in you_get.common to download a collection

common.any_download_playlist(url='https://www.bilibili.com/video/BVXXX',stream_id='',info_only=False,

output_dir=r'F:\StudyLesson\YouGet',merge=True)

# Call any_download in you_get.common for single set download

common.any_download(url='https://www.bilibili.com/video/BVXXX?p=8',stream_id='',

info_only=False,output_dir=r'F:\StudyLesson\YouGet',merge=True)So far, the video has been downloaded successfully

record.

This error occurred in the last step of installing mujoco.

All the previous steps have been obtained, including downloading mjkey, installing VC + + buildtools, downloading mjpro150 and the corresponding version of mujoco py. In many tutorials, it is mentioned that PIP install mujoco py = = 1.50.1.68 should be run at last, so an error appears in the title.

function

pip install -e <path>< path> Is the local mujoco py file directory. The installation was successful.

Attached:

During the first import, the compilation will be performed first, and errors may be reported, such as mujoco and mujoco_Py installed pit code farm home

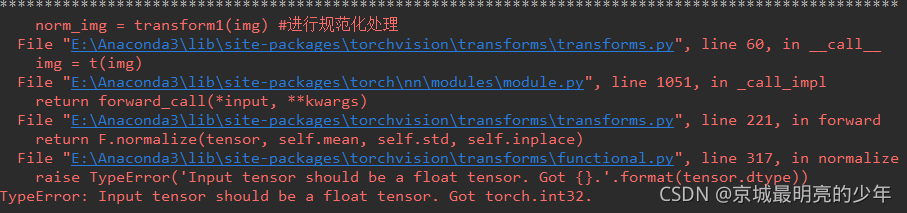

The following error is reported when using tensor ` normalization

from torchvision import transforms

import numpy as np

import torchvision

import torch

data = np.random.randint(0, 255, size=12)

img = data.reshape(2,2,3)

print(img)

print("*"*100)

transform1 = transforms.Compose([

transforms.ToTensor(), # range [0, 255] -> [0.0,1.0]

transforms.Normalize(mean = (10,10,10), std = (1,1,1)),

]

)

# img = img.astype('float')

norm_img = transform1(img)

print(norm_img)

You can add this sentence. In fact, it is to set the element type. See the tips above