Question:

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- Failed to upload code location: xxx/bom

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- Reason: Request failed authorization [HTTP Error]: There was a problem trying to POST https://xxx.com/api/scan/data/, response was 403 Forbidden.

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- An error occurred uploading a bdio file.

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- There was a problem: An error occurred uploading a bdio file.

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- Detect run failed: There was a problem: An error occurred uploading a bdio file.

15:03:23 2022-02-14 15:03:23 CST ERROR [main] --- There was a problem: An error occurred uploading a bdio file.

ROOT CAUSE:

The project-owner is not a role in Blackduck, but an external reference to a project.

SOLUTION:

SOLUTION:

Found that this is due to the user/project roles assigned to the user.

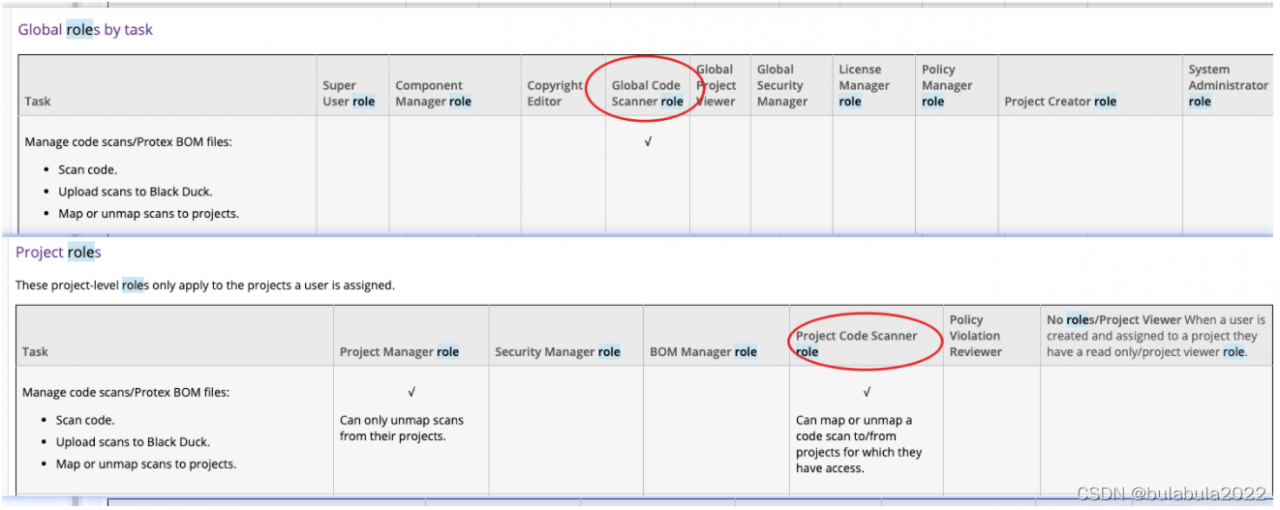

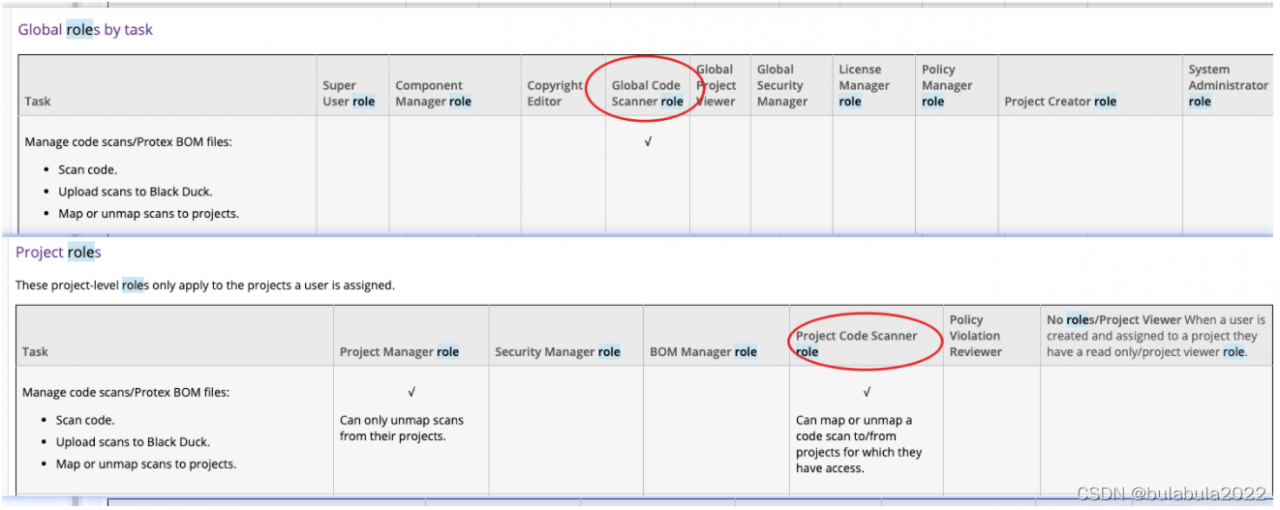

User had Project Code Scanner assigned under the specific project but this role does not allow you to create new projects (only project versions).

User must have Global Code Scanner assigned in order to create new projects within Black Duck while scanning.

OR

User can have Project Creator role assigned but needs to be assigned to the specific project in order to run scans against the new project.

OR

Ensure if user is already part of a group that has the correct above persmissions they are not running the following property as will also give 403 , because group already exists: ‘ detect.project.user.groups=blackduck.xxxx ‘ – Removing property will allow scan to run.

OR

To upload the scans using Detect, Global Code Scanner role (global scope) or Project Code Scanner role (project scope) needed to be set to the user, from who the token was generated and used in Detect CLI.

Please refer to the attached screenshot and role matrix doc part in Blackduck user guide (/doc/Welcome.htm#users_and_groups/rolematrix.htm)

NOTE:

Please ensure the user is the BOM manager of the project this will also prevent failure.

Product

Black Duck/Black Duck Hub