When installing mars3d, you need to install the copy webpack plugin plug-in

and then configure it in vue.config.js report an error: Error: error: [copy webpack plugin] patterns must be an array

the current node version is 12.2.0

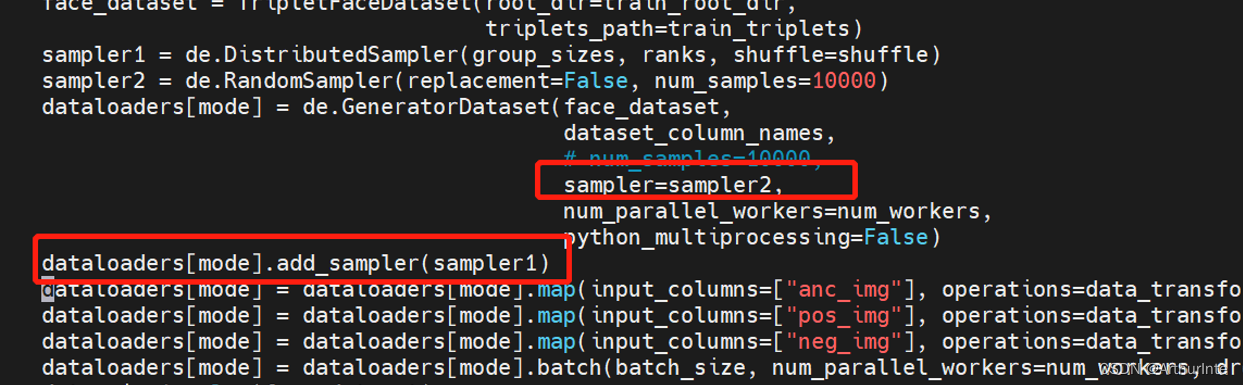

const plugins = [

// Identifies the home directory where cesium resources are located, which is needed for resource loading, multithreading, etc. inside cesium

new webpack.DefinePlugin({

CESIUM_BASE_URL: JSON.stringify(path.join(config.output.publicPath, cesiumRunPath))

}),

// Cesium-related resource directories need to be copied to the system directory (some CopyWebpackPlugin versions may not have patterns in their syntax)

new CopyWebpackPlugin({

patterns: [

{ from: path.join(cesiumSourcePath, 'Workers'), to: path.join(config.output.path, cesiumRunPath, 'Workers') },

{ from: path.join(cesiumSourcePath, 'Assets'), to: path.join(config.output.path, cesiumRunPath, 'Assets') },

{ from: path.join(cesiumSourcePath, 'ThirdParty'), to: path.join(config.output.path, cesiumRunPath, 'ThirdParty') },

{ from: path.join(cesiumSourcePath, 'Widgets'), to: path.join(config.output.path, cesiumRunPath, 'Widgets') }

]

})

];

new CopyWebpackPlugin is changed as follows:

const plugins = [

// Identifies the home directory where cesium resources are located, which is needed for resource loading, multithreading, etc. within cesium

new webpack.DefinePlugin({

CESIUM_BASE_URL: JSON.stringify(path.join(config.output.publicPath, cesiumRunPath))

}),

new CopyWebpackPlugin([

{ from: path.join(cesiumSourcePath, 'Workers'), to: path.join(config.output.path, cesiumRunPath, 'Workers') },

{ from: path.join(cesiumSourcePath, 'Assets'), to: path.join(config.output.path, cesiumRunPath, 'Assets') },

{ from: path.join(cesiumSourcePath, 'ThirdParty'), to: path.join(config.output.path, cesiumRunPath, 'ThirdParty') },

{ from: path.join(cesiumSourcePath, 'Widgets'), to: path.join(config.output.path, cesiumRunPath, 'Widgets') }

])

];

Run successfully