Tenserrt TRT reports an error when using engine infer

Question

An error occurred in building the yoov5s model and trying to use the TRT inference service

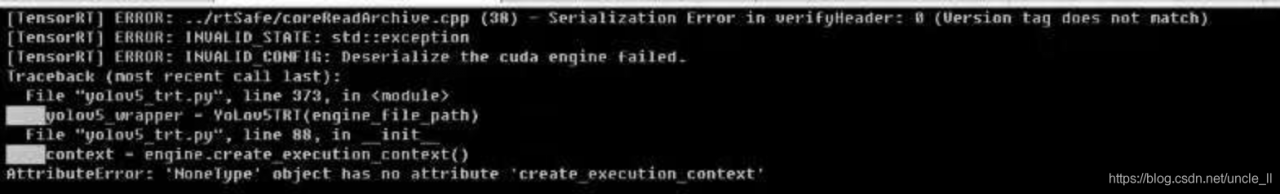

[TensorRT] ERROR: ../rtSafe/coreReadArchive.cpp (38) - Serialization Error in verifyHeader: 0 (Version tag does not match)

[TensorRT] ERROR: INVALID_STATE: std::exception

[TensorRT] ERROR: INVALID_CONFIG: Deserialize the cuda engine failed.

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-z24zz5ve-1629281553325) (C: \ users \ dell-3020 \ appdata \ roaming \ typora user images \ image-20210818142817754. PNG)]

Error reporting reason:

The version of tensorrt used when compiling engine is inconsistent with the version of tensorrt used when TRT reasoning is used. It needs to be consistent

terms of settlement

Confirm the tensorrt version of each link to ensure consistency; Look at the dynamic link library of Yolo compiled files

ldd yolo

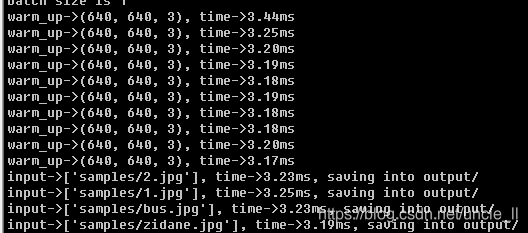

After modification, it runs normally and the speed becomes very fast

reference resources

https://forums.developer.nvidia.com/t/tensorrt-error-rtsafe-corereadarchive-cpp-31-serialization-error-in-verifyheader-0-magic-tag-does-not-match/81872/3https://github.com/wang -xinyu/tensorrtx.git