Article catalog

1 error recurrence 2 causes and Solutions

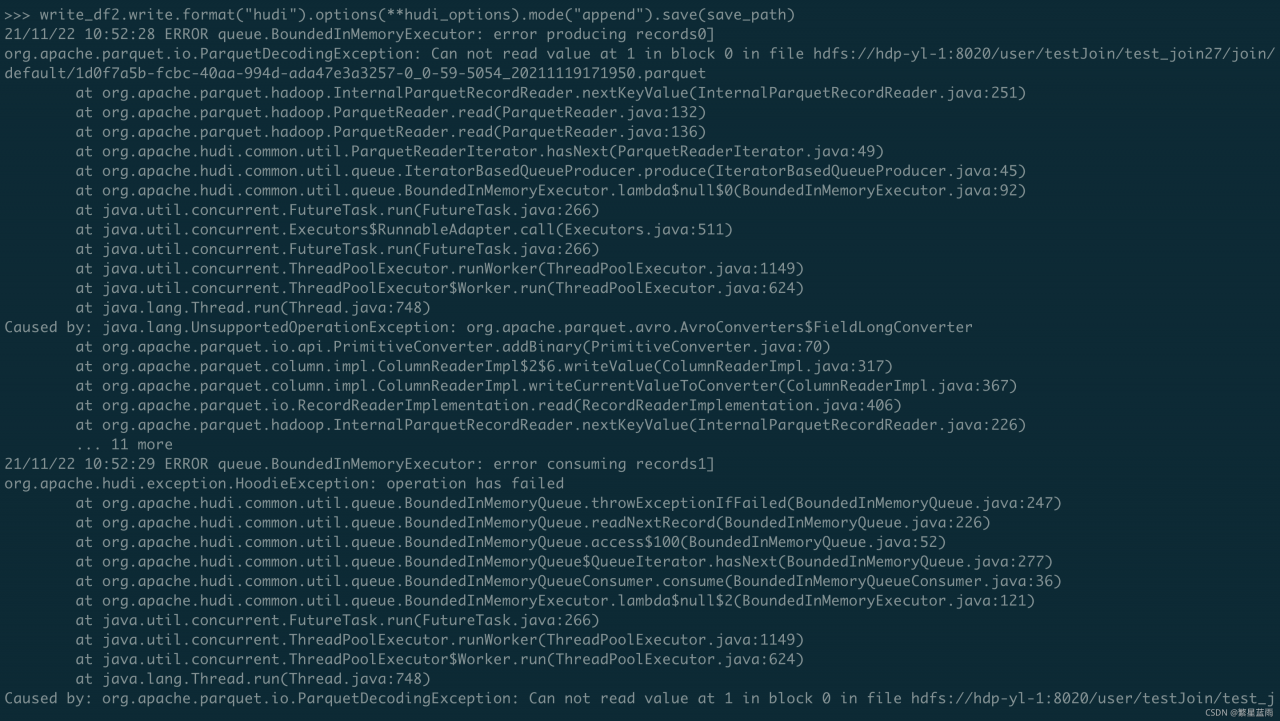

1 error recurrence

ERROR queue.BoundedInMemoryExecutor: error producing records0]

org.apache.parquet.io.ParquetDecodingException: Can not read value at 1 in block 0 in file hdfs://hdp-yl-1:8020/user/testJoin/test_join27/join/default/1d0f7a5b-fcbc-40aa-994d-ada47e3a3257-0_0-59-5054_20211119171950.parquet

2 causes and Solutions

The reason for the error is that the data types of the fields of the table to be written and the fields of the destination table are different.

The solution is to reset the data type of the written data. See the following example.

write_df2 = write_df2.withColumn("superior_emp_id",col("superior_emp_id").cast("string"))