Brokerload statement

LOAD

LABEL gaofeng_broker_load_HDD

(

DATA INFILE("hdfs://eoop/user/coue_data/hive_db/couta_test/ader_lal_offline_0813_1/*")

INTO TABLE ads_user

)

WITH BROKER "hdfs_broker"

(

"dfs.nameservices"="eadhadoop",

"dfs.ha.namenodes.eadhadoop" = "nn1,nn2",

"dfs.namenode.rpc-address.eadhadoop.nn1" = "h4:8000",

"dfs.namenode.rpc-address.eadhadoop.nn2" = "z7:8000",

"dfs.client.failover.proxy.provider.eadhadoop" = "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider",

"hadoop.security.authentication" = "kerberos","kerberos_principal" = "ou3.CN",

"kerberos_keytab_content" = "BQ8uMTYzLkNPTQALY291cnNlXgAAAAFfVyLbAQABAAgCtp0qmxxP8QAAAAE="

);

report errors

Task cancelled

type:ETL_ QUALITY_ UNSATISFIED; msg:quality not good enough to cancel

Solution:

Generally, there must be a deeper reason for this error

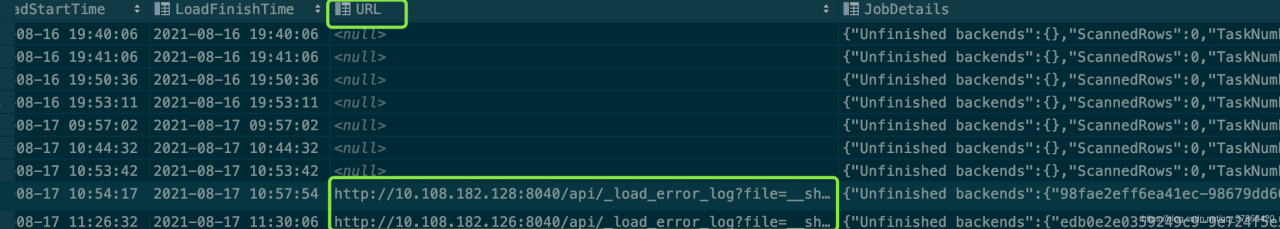

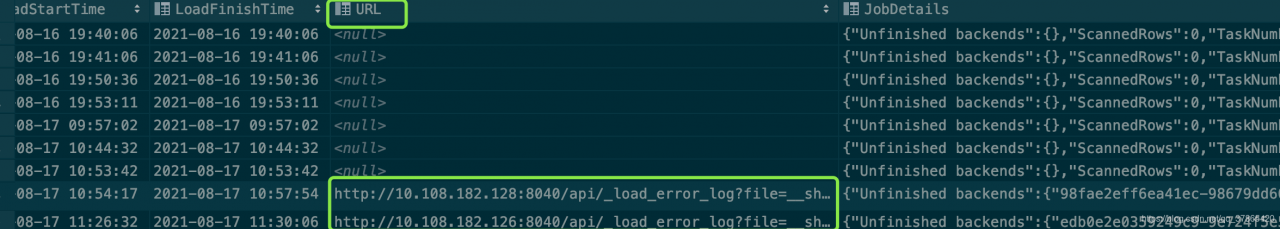

you can see the URL field of the brokerload task through show load

show load warnings on ‘{URL}’

or open the web page directly

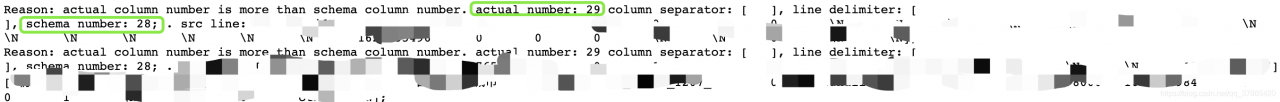

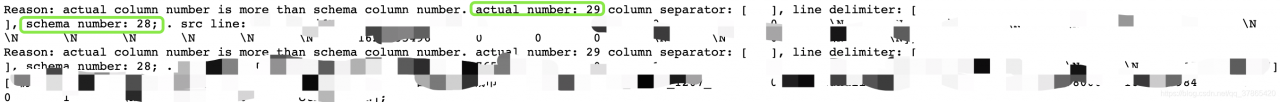

the number of fields is inconsistent or other reasons. The fundamental reason

is that the number of fields in some rows in the file to be imported is inconsistent with that in the table, Or the size of a field in some lines of the file exceeds the upper limit of the corresponding table field, resulting in data quality problems, which need to be adjusted accordingly

If you wants to ignore these error data

modify the task statement configuration parameter “Max_ filter_ratio” = “1”

LOAD

LABEL gaofeng_broker_load_HDD

(

DATA INFILE("hdfs://eoop/user/coue_data/hive_db/couta_test/ader_lal_offline_0813_1/*")

INTO TABLE ads_user

)

WITH BROKER "hdfs_broker"

(

"dfs.nameservices"="eadhadoop",

"dfs.ha.namenodes.eadhadoop" = "nn1,nn2",

"dfs.namenode.rpc-address.eadhadoop.nn1" = "h4:8000",

"dfs.namenode.rpc-address.eadhadoop.nn2" = "z7:8000",

"dfs.client.failover.proxy.provider.eadhadoop" = "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider",

"hadoop.security.authentication" = "kerberos","kerberos_principal" = "ou3.CN",

"kerberos_keytab_content" = "BQ8uMTYzLkNPTQALY291cnNlXgAAAAFfVyLbAQABAAgCtp0qmxxP8QAAAAE="

)

PROPERTIES

(

"max_filter_ratio" = "1"

);