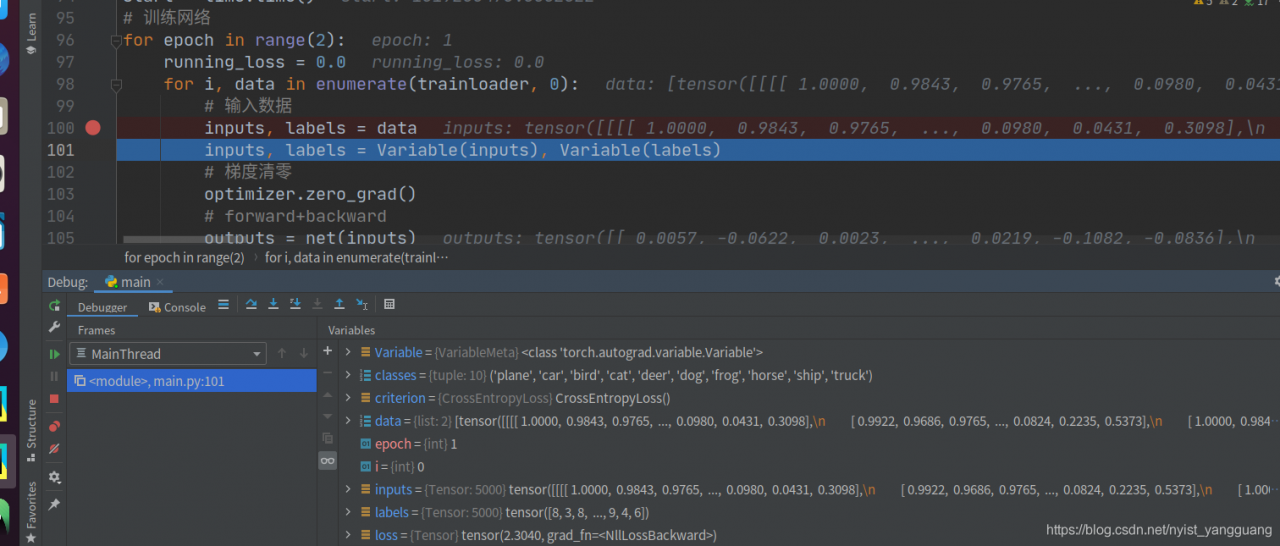

num_ Works parameters This is the usage of the process.

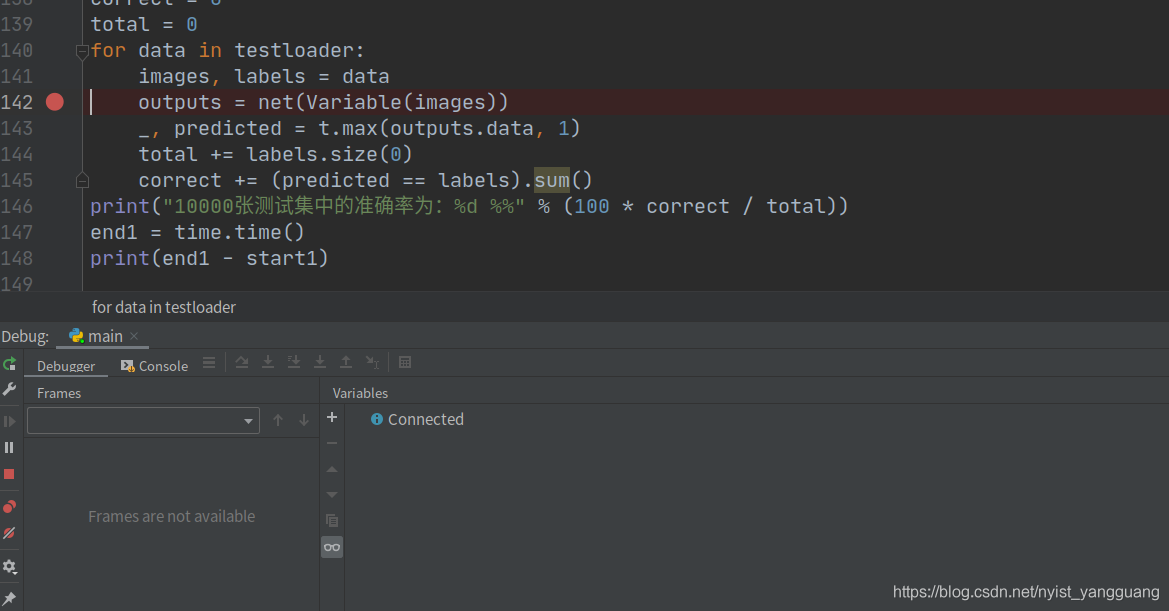

num_ When works is nonzero, the for loop will be stuck here.

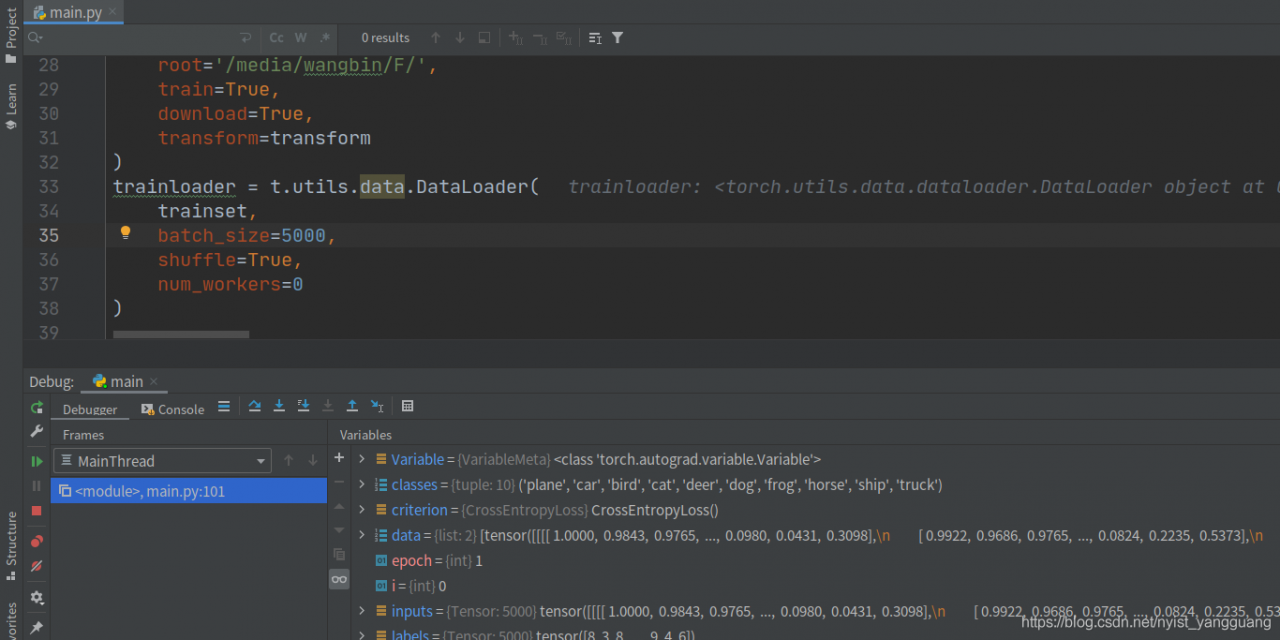

Changed to 0, ready to run.

Add the num parameter in the dataloader_ Workers can be set to 0

Resources

debugger freezes stepping forward when using torch with workers (multiprocessing)

Zhihu – torch dataloader uses batch and num_ What is the principle of works parameter?Principle analysis

CSDN – pytorch training encountered in the stuck stop and other problems (recommended)

using the python dataloader stuck error solution

dataloader, when num_ worker > 0, there is a bug

to solve the pytorch dataloader num_ Problems of workers