Fresco Android image loading Library — Facebook

Fresco is a powerful image loading component.

A module called image pipeline is designed in fresco. It is responsible for loading images from network, local file system and local resources. In order to save space and CPU time to the greatest extent, it contains 3-level cache design (2-level memory, 1-level file).

There is a module called drawings in fresco, which can easily display the loading graph. When the picture is no longer displayed on the screen, it can release the memory and space in time.

Fresco supports Android 2.3 (API level 9) and above.

memory management

The extracted image, that is, the bitmap in Android, takes up a lot of memory. Large memory consumption is bound to lead to more frequent GC. Below 5.0, GC will cause interface stuck.

In systems below 5.0, fresco places the image in a special memory area. Of course, when the picture is not displayed, the occupied memory will be released automatically. This will make the app smoother and reduce the oom caused by image memory occupation.

Fresco does just as well on low-end machines, so you don’t have to think twice about how much image memory you’re using.

Progressive image presentation #

the progressive JPEG image format has been popular for several years. The progressive image format first presents the outline of the image, and then presents the gradually clear image as the image download continues, which is of great benefit to mobile devices, especially the slow network, and can bring better user experience.

Android’s own image library does not support this format, but fresco does. When using it, as usual, you just need to provide a URI of the image, and fresco will handle the rest.

It is very important to display pictures quickly and efficiently on Android devices. In the past few years, we have encountered many problems in how to efficiently store images. The picture is too big, but the memory of the phone is very small. The R, G, B and alpha channels of each pixel take up a total of 4 bytes of space. If the screen of the mobile phone is 480 * 800, a picture of the screen size will occupy 1.5m of memory. The memory of mobile phones is usually very small, especially Android devices need to allocate memory for various applications. On some devices, only 16MB of memory is allocated to the Facebook app. A picture will occupy one tenth of its memory.

What happens when your app runs out of memory?Of course it will collapse! We developed a library to solve this problem. We call it fresco. It can manage the images and memory used, and the app will no longer crash.

Memory area

In order to understand what Facebook does, we need to understand the difference between the heap memory that Android can use. The Java heap memory size of every app in Android is strictly limited. Each object is instantiated in heap memory using java new, which is a relatively safe area in memory. Memory has garbage collection mechanism, so when the app is not using memory, the system will automatically recycle this memory.

Unfortunately, the process of garbage collection in memory is the problem. When the memory is garbage collected, the memory is not only garbage collected, but also the Android application is completely terminated. This is also one of the most common reasons for users to get stuck or temporarily feign death when using the app. This will make the users who are using the app very depressed, and then they may anxiously slide the screen or click the button, but the only response of the app is to ask the user to wait patiently before the app returns to normal

In contrast, the native heap is allocated by the new of the C + + program. There is more available memory in the native heap. App is only limited by the physical available memory of the device, and there is no garbage collection mechanism or other things. But the C + + programmer must recycle every memory allocated by himself, otherwise it will cause memory leakage and eventually lead to program crash.

Android has another memory area called ashmem. It operates more like a native heap, but with additional system calls. When Android operates the ashmem heap, it will extract the memory area in the heap that contains data from the ashmem heap instead of releasing it, which is a weak memory release mode; the extracted memory will be released only when the system really needs more memory (the system memory is not enough). When Android puts the extracted memory back to the ashmem heap, as long as the extracted memory space is not released, the previous data will be restored to the corresponding location.

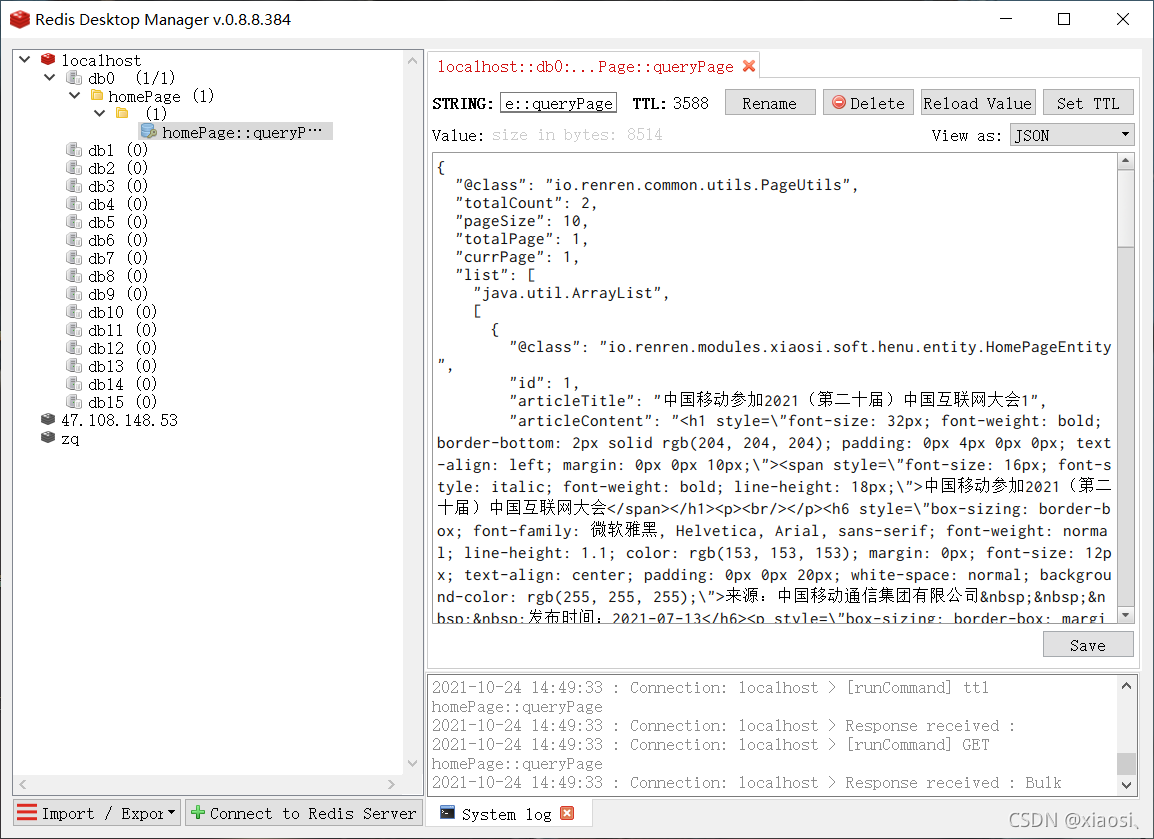

Three level cache

1. Bitmap cache

bitmap cache stores bitmap objects, which can be immediately used for display or post-processing

In systems below 5.0, the bitmap cache is located in ashmem, so that the creation and release of bitmap objects will not cause GC, and fewer GC will make your app run more smoothly.

In contrast, memory management has been greatly improved, so the bitmap cache is directly located on the heap of Java.

When the application is running in the background, the memory will be cleared.

-

memory cache of undeciphered pictures

this cache stores pictures in the original compressed format. The pictures retrieved from the cache need to be decoded before being used.

If there is any resizing, rotation, or webp transcoding work to be done, it will be done before decoding.

When the app is in the background, the cache will also be cleared.

-

file cache

is similar to UN decoded memory cache. File cache stores UN decoded images in original compressed format, which also needs decoding before use.

Unlike the memory cache, when the app is in the background, the content will not be cleared. Not even if it’s turned off. Users can clear the cache at any time in the system’s settings menu.

Bitmap and cache

Bitmap is special in Android, because Android limits the memory of single APP, for example, allocate 16MB, and the domestic custom system will be larger than 16. There are two common caching strategies in Android, LruCache and DiskLruCache. The former is used as memory cache method and the latter is used as storage cache method.

android.support Brief reading of lrucache source code in. V4 package

package android.util;

import java.util.LinkedHashMap;

import java.util.Map;

/**

* A cache that holds strong references to a limited number of values. Each time

* a value is accessed, it is moved to the head of a queue. When a value is

* added to a full cache, the value at the end of that queue is evicted and may

* become eligible for garbage collection.

* Cache keeps a strong reference to limit the number of contents. Whenever an Item is accessed, this Item is moved to the head of the queue.

* When a new item is added when the cache is full, the item at the end of the queue is reclaimed.

* <p>If your cached values hold resources that need to be explicitly released,

* override {@link #entryRemoved}.

* If a value in your cache needs to be explicitly freed, override entryRemoved()

* <p>If a cache miss should be computed on demand for the corresponding keys,

* override {@link #create}. This simplifies the calling code, allowing it to

* assume a value will always be returned, even when there's a cache miss.

* If the item corresponding to the key is lost, rewrite create(). This simplifies the calling code and always returns it even if it is lost.

* <p>By default, the cache size is measured in the number of entries. Override

* {@link #sizeOf} to size the cache in different units. For example, this cache

* is limited to 4MiB of bitmaps: The default cache size is the number of items measured, rewrite sizeof to calculate the size of different items

* size.

* <pre> {@code

* int cacheSize = 4 * 1024 * 1024; // 4MiB

* LruCache<String, Bitmap> bitmapCache = new LruCache<String, Bitmap>(cacheSize) {

* protected int sizeOf(String key, Bitmap value) {

* return value.getByteCount();

* }

* }}</pre>

*

* <p>This class is thread-safe. Perform multiple cache operations atomically by

* synchronizing on the cache: <pre> {@code

* synchronized (cache) {

* if (cache.get(key) == null) {

* cache.put(key, value);

* }

* }}</pre>

*

* <p>This class does not allow null to be used as a key or value. A return

* value of null from {@link #get}, {@link #put} or {@link #remove} is

* unambiguous: the key was not in the cache.

* Do not allow key or value to be null

* When get(), put(), remove() return null, the corresponding item of the key is not in the cache

*/

public class LruCache<K, V> {

private final LinkedHashMap<K, V> map;

/** Size of this cache in units. Not necessarily the number of elements. */

private int size; //The size of the already stored

private int maxSize; //the maximum storage space specified

private int putCount; //the number of times to put

private int createCount; //the number of times to create

private int evictionCount; //the number of times to recycle

private int hitCount; //number of hits

private int missCount; //number of misses

/**

* @param maxSize for caches that do not override {@link #sizeOf}, this is

* the maximum number of entries in the cache. For all other caches,

* this is the maximum sum of the sizes of the entries in this cache.

*/

public LruCache(int maxSize) {

if (maxSize <= 0) {

throw new IllegalArgumentException("maxSize <= 0");

}

this.maxSize = maxSize;

this.map = new LinkedHashMap<K, V>(0, 0.75f, true);

}

/**

* Returns the value for {@code key} if it exists in the cache or can be

* created by {@code #create}. If a value was returned, it is moved to the

* head of the queue. This returns null if a value is not cached and cannot

* be created. The corresponding item is returned by key, or created. the corresponding item is moved to the head of the queue.

* If the value of the item is not cached or cannot be created, null is returned.

*/

public final V get(K key) {

if (key == null) {

throw new NullPointerException("key == null");

}

V mapValue;

synchronized (this) {

mapValue = map.get(key);

if (mapValue != null) {

hitCount++;

return mapValue;

}

missCount++;

}

/*

* Attempt to create a value. This may take a long time, and the map

* may be different when create() returns. If a conflicting value was

* added to the map while create() was working, we leave that value in

* the map and release the created value.

* If it's missing, try to create an item

*/

V createdValue = create(key);

if (createdValue == null) {

return null;

}

synchronized (this) {

createCount++;

mapValue = map.put(key, createdValue);

if (mapValue != null) {

// There was a conflict so undo that last put

//If oldValue exists before it, then undo put()

map.put(key, mapValue);

} else {

size += safeSizeOf(key, createdValue);

}

}

if (mapValue != null) {

entryRemoved(false, key, createdValue, mapValue);

return mapValue;

} else {

trimToSize(maxSize);

return createdValue;

}

}

/**

* Caches {@code value} for {@code key}. The value is moved to the head of

* the queue.

*

* @return the previous value mapped by {@code key}.

*/

public final V put(K key, V value) {

if (key == null || value == null) {

throw new NullPointerException("key == null || value == null");

}

V previous;

synchronized (this) {

putCount++;

size += safeSizeOf(key, value);

previous = map.put(key, value);

if (previous != null) { //The previous value returned

size -= safeSizeOf(key, previous);

}

}

if (previous != null) {

entryRemoved(false, key, previous, value);

}

trimToSize(maxSize);

return previous;

}

/**

* @param maxSize the maximum size of the cache before returning. May be -1

* to evict even 0-sized elements.

* Empty cache space

*/

private void trimToSize(int maxSize) {

while (true) {

K key;

V value;

synchronized (this) {

if (size < 0 || (map.isEmpty() && size != 0)) {

throw new IllegalStateException(getClass().getName()

+ ".sizeOf() is reporting inconsistent results!");

}

if (size <= maxSize) {

break;

}

Map.Entry<K, V> toEvict = map.eldest();

if (toEvict == null) {

break;

}

key = toEvict.getKey();

value = toEvict.getValue();

map.remove(key);

size -= safeSizeOf(key, value);

evictionCount++;

}

entryRemoved(true, key, value, null);

}

}

/**

* Removes the entry for {@code key} if it exists.

* Delete the corresponding cache item of the key and return the corresponding value

* @return the previous value mapped by {@code key}.

*/

public final V remove(K key) {

if (key == null) {

throw new NullPointerException("key == null");

}

V previous;

synchronized (this) {

previous = map.remove(key);

if (previous != null) {

size -= safeSizeOf(key, previous);

}

}

if (previous != null) {

entryRemoved(false, key, previous, null);

}

return previous;

}

/**

* Called for entries that have been evicted or removed. This method is

* invoked when a value is evicted to make space, removed by a call to

* {@link #remove}, or replaced by a call to {@link #put}. The default

* implementation does nothing.

* Called when the item is recycled or deleted. Change method is called by remove when value is reclaimed to free up storage space.

* or put called when the value of the item is replaced, the default implementation does nothing.

* <p>The method is called without synchronization: other threads may

* access the cache while this method is executing.

*

* @param evicted true if the entry is being removed to make space, false

* if the removal was caused by a {@link #put} or {@link #remove}.

* true---is deleted for free space; false - put or remove causes

* @param newValue the new value for {@code key}, if it exists. If non-null,

* this removal was caused by a {@link #put}. Otherwise it was caused by

* an eviction or a {@link #remove}.

*/

protected void entryRemoved(boolean evicted, K key, V oldValue, V newValue) {}

/**

* Called after a cache miss to compute a value for the corresponding key.

* Returns the computed value or null if no value can be computed. The

* default implementation returns null.

* Called when an Item is missing and returns the corresponding calculated value or null

* <p>The method is called without synchronization: other threads may

* access the cache while this method is executing.

*

* <p>If a value for {@code key} exists in the cache when this method

* returns, the created value will be released with {@link #entryRemoved}

* and discarded. This can occur when multiple threads request the same key

* at the same time (causing multiple values to be created), or when one

* thread calls {@link #put} while another is creating a value for the same

* key.

*/

protected V create(K key) {

return null;

}

private int safeSizeOf(K key, V value) {

int result = sizeOf(key, value);

if (result < 0) {

throw new IllegalStateException("Negative size: " + key + "=" + value);

}

return result;

}

/**

* Returns the size of the entry for {@code key} and {@code value} in

* user-defined units. The default implementation returns 1 so that size

* is the number of entries and max size is the maximum number of entries.

* Return the size of the user-defined item, the default return 1 represents the number of items, the maximum size is the maximum item value

* <p>An entry's size must not change while it is in the cache.

*/

protected int sizeOf(K key, V value) {

return 1;

}

/**

* Clear the cache, calling {@link #entryRemoved} on each removed entry.

* 清空cacke

*/

public final void evictAll() {

trimToSize(-1); // -1 will evict 0-sized elements

}

/**

* For caches that do not override {@link #sizeOf}, this returns the number

* of entries in the cache. For all other caches, this returns the sum of

* the sizes of the entries in this cache.

*/

public synchronized final int size() {

return size;

}

/**

* For caches that do not override {@link #sizeOf}, this returns the maximum

* number of entries in the cache. For all other caches, this returns the

* maximum sum of the sizes of the entries in this cache.

*/

public synchronized final int maxSize() {

return maxSize;

}

/**

* Returns the number of times {@link #get} returned a value that was

* already present in the cache.

*/

public synchronized final int hitCount() {

return hitCount;

}

/**

* Returns the number of times {@link #get} returned null or required a new

* value to be created.

*/

public synchronized final int missCount() {

return missCount;

}

/**

* Returns the number of times {@link #create(Object)} returned a value.

*/

public synchronized final int createCount() {

return createCount;

}

/**

* Returns the number of times {@link #put} was called.

*/

public synchronized final int putCount() {

return putCount;

}

/**

* Returns the number of values that have been evicted.

* Return the number of recycled

*/

public synchronized final int evictionCount() {

return evictionCount;

}

/**

* Returns a copy of the current contents of the cache, ordered from least

* recently accessed to most recently accessed. Returns a copy of the current cache, from least recently accessed to most accessed

*/

public synchronized final Map<K, V> snapshot() {

return new LinkedHashMap<K, V>(map);

}

@Override public synchronized final String toString() {

int accesses = hitCount + missCount;

int hitPercent = accesses != 0 ?(100 * hitCount/accesses) : 0;

return String.format("LruCache[maxSize=%d,hits=%d,misses=%d,hitRate=%d%%]",

maxSize, hitCount, missCount, hitPercent);

}

}