After the Hadoop cluster is set up, the following port error occurs on one node when the Hadoop FS – put command is executed

location:org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1495)

throwable:java.io.IOException: Got error, status message , ack with firstBadLink as ip:port

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:142)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1482)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1385)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

First, check whether the firewalls of the three nodes are closed

firewall-cmd --state

Turn off firewall command

systemctl stop firewalld.service

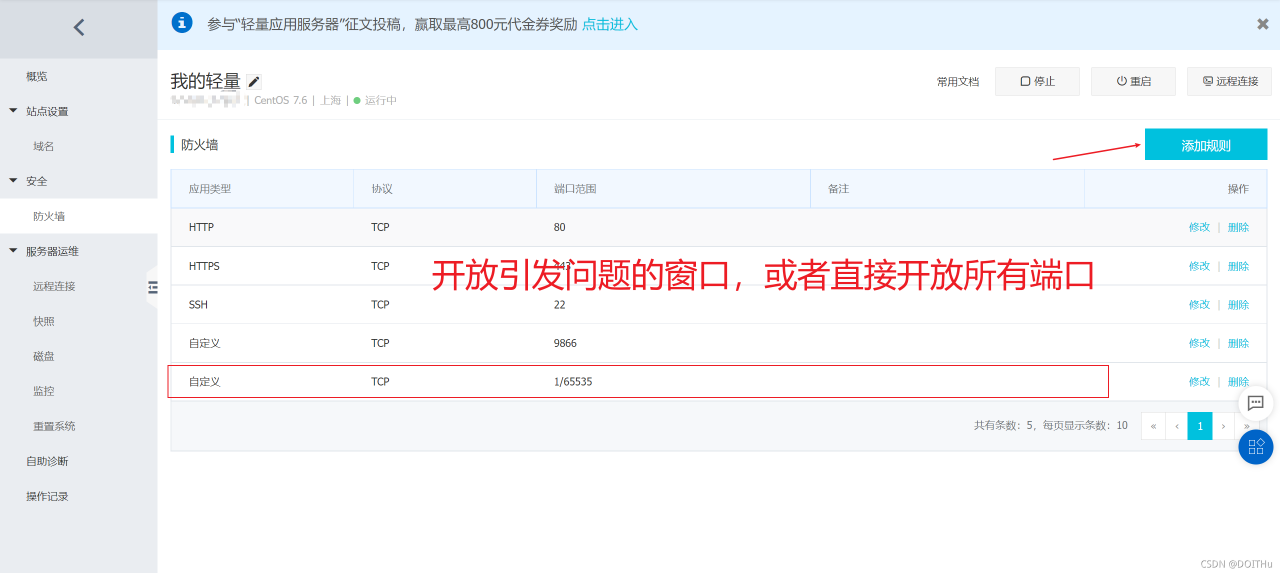

If this situation still occurs after the firewall is closed, it may be that the ports of ECs are not open to the public. Take Alibaba cloud server as an example (Tencent cloud opens all ports by default):

After setting, execute the command again to succeed.

Read More:

- [Solved] java.io.IOException: Got error, status=ERROR, status message, ack with firstBadLink as

- [Solved] Hadoop Error: Exception in thread “main“ java.io.IOException: Error opening job jar: /usr/local/hadoop-2.

- I/O error while reading input message; nested exception is java.io.IOException: Stream closed

- [Solved] Sqoop Error: ERROR tool.ImportTool: Import failed: java.io.IOException

- [Solved] ClientAbortException: java.io.IOException: Connection reset by peer

- Java learning unreported exception java.io.IOException ; must be caught or declared to be thrown

- [Solved] canal Startup Error: error while reading from client socket java.io.IOException: Received error packet:

- keytool error java.io.IOException:keystore was tampered with,or password was incorre

- keytool Error: java.io.IOException: Keystore was tampered with, or password was incorrect

- [Solved] hadoop Error: 9000 failed on connection exception java.net.ConnectException Denied to Access

- [Solved] Hadoop Error: HADOOP_HOME and hadoop.home.dir are unset.

- The java springboot websocket service server actively closes the connection and causes java.io.EOFException to be thrown

- [Solved] Hadoop failed on connection exception: java.net.ConnectException: Connection refused

- [Solved] Flink1.12 integrate Hadoop 3.X error: java.lang.RuntimeException…

- [Solved] JAVA fx Error: java.lang.instrument ASSERTION FAILED ***: “!errorOutstanding“ with message transform

- How to Solve java server error (java application Run Normally)

- RSA Decryption Error: java.security.InvalidKeyException: IOException : algid parse error, not a sequence

- springboot jsp: There was an unexpected error (type=Not Found, status=404). No message available

- How to Solve Error:java.io.InvalidClassException

- Springboot integrates Redis annotation and access error: java.io.NotSerializableException: com.demo.entity.MemberEntity