After the Hadoop cluster is set up, the following port error occurs on one node when the Hadoop FS – put command is executed

location:org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1495)

throwable:java.io.IOException: Got error, status message , ack with firstBadLink as ip:port

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:142)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1482)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1385)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

First, check whether the firewalls of the three nodes are closed

firewall-cmd --state

Turn off firewall command

systemctl stop firewalld.service

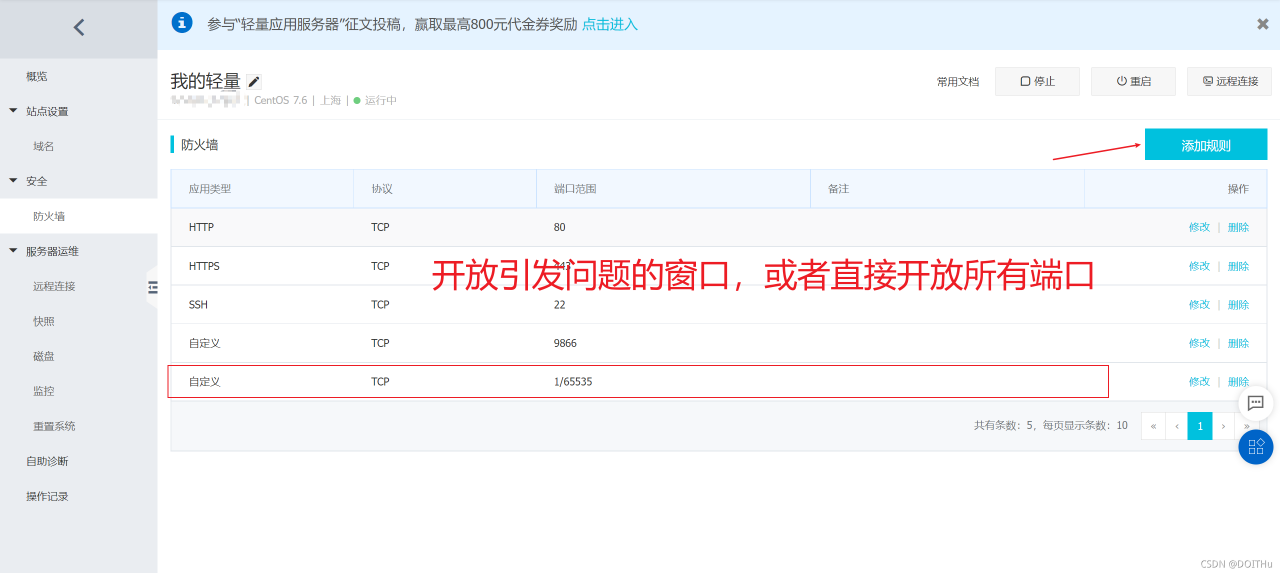

If this situation still occurs after the firewall is closed, it may be that the ports of ECs are not open to the public. Take Alibaba cloud server as an example (Tencent cloud opens all ports by default):

After setting, execute the command again to succeed.