Solution:

brew install chromedriver

Solution:

brew install chromedriver

└── Project

├── dir1

│ ├── __init__.py

│ └── module1.py

│ └── module1_2.py

├── dir2

│

│ └── module2.py

├── file_a.py

└── main.py1. In main.py, you want to use from . import file_ Import error: attempted relative import with no known parent package

The error message means that an attempt was made to import using a relative path, but a known parent package could not be found. Generally speaking, this error occurs when you try to use relative path import in a running. Py file. The reason for the error is that the relative path import of Python actually needs to be implemented with the help of the parent package path of the current file, that is, by judging the path of a. Py file__ name__ And__ package__ Property to get information about the parent package. In a running. Py application file, there are:

if __name__ == '__main__':This introduces the main entry into the file. At this time, the file __ name__’s attribute is’ ‘__ main__’ And __ package__’s attribute is none. If the relative path is used in such a file, the interpreter cannot find any information about the parent package, so an error is reported.

Python seems to have a setting. The directory of the current executable file will not be treated as a package.

To solve the problem, you can add the following code to the file where the import code is located

print('__file__={0:<35} | __name__={1:<20} | __package__={2:<20}'.format(__file__,__name__,str(__package__))) The running result shows: __name__ = __main__ and __package__ = None, the python interpreter does not have any information about the package to which the module belongs, so it throws an exception that the parent package cannot be found.

solution:

Import directly from the module

from file_a import xxx

2. When I want to use from .. import file_a in module1.py, an error message is reported: attempted relative import beyond top-level package

The reason is the same as the above situation, that is, the current project directory is not a package, that is, the cheating setting of Python, and the current directory of the project entry will not be regarded as a package.

solution:

The content of file_a.py to be imported in module1.py:

from file_a import xxx

To import the content of module1_2.py in module1.py, you can use relative import:

from. import module1_2 as module12

Project scenario:

The python model is converted to onnx model and can be exported successfully, but the following errors occur when loading the model using onnxruntime

InvalidGraph: [ONNXRuntimeError] : 10 : INVALID_ GRAPH : Load model from T.onnx failed:This is an invalid model. Type Error: Type ‘tensor(bool)’ of input parameter (8) of operator (ScatterND) in node (ScatterND_ 15) is invalid.

Problem Description:

import torch

import torch.nn as nn

import onnxruntime

from torch.onnx import export

class Preprocess(nn.Module):

def __init__(self):

super().__init__()

self.max = 1000

self.min = -44

def forward(self, inputs):

inputs[inputs>self.max] = self.max

inputs[inputs<self.min] = self.min

return inputs

x = torch.randint(-1024,3071,(1,1,28,28))

model = Preprocess()

model.eval()

export(

model,

x,

"test.onnx",

input_names=["input"],

output_names=["output"],

opset_version=11,

)

session = onnxruntime.InferenceSession("test.onnx")

Cause analysis:

The same problem can be found in GitHub of pytorch #34054

Solution:

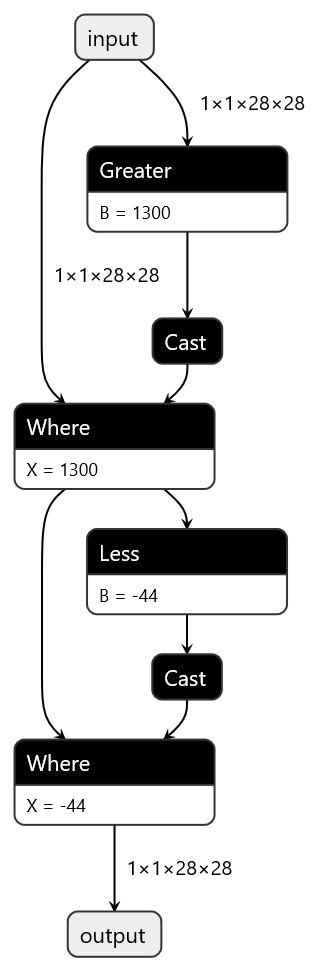

The specific operations are as follows: Mr. Cheng mask , and then use torch.masked_ Fill() operation. Instead of using the index to directly assign the entered tensor

class MaskHigh(nn.Module):

def __init__(self, val):

super().__init__()

self.val = val

def forward(self, inputs):

x = inputs.clone()

mask = x > self.val

output = torch.masked_fill(inputs, mask, self.val)

return output

class MaskLow(nn.Module):

def __init__(self, val):

super().__init__()

self.val = val

def forward(self, inputs):

x = inputs.clone()

mask = x < self.val

output = torch.masked_fill(inputs, mask, self.val)

return output

class Clip(nn.Module):

def __init__(self):

super().__init__()

self.high = MaskHigh(1300)

self.low = MaskLow(-44)

def forward(self, inputs):

output = self.high(inputs)

output = self.low(output)

return output

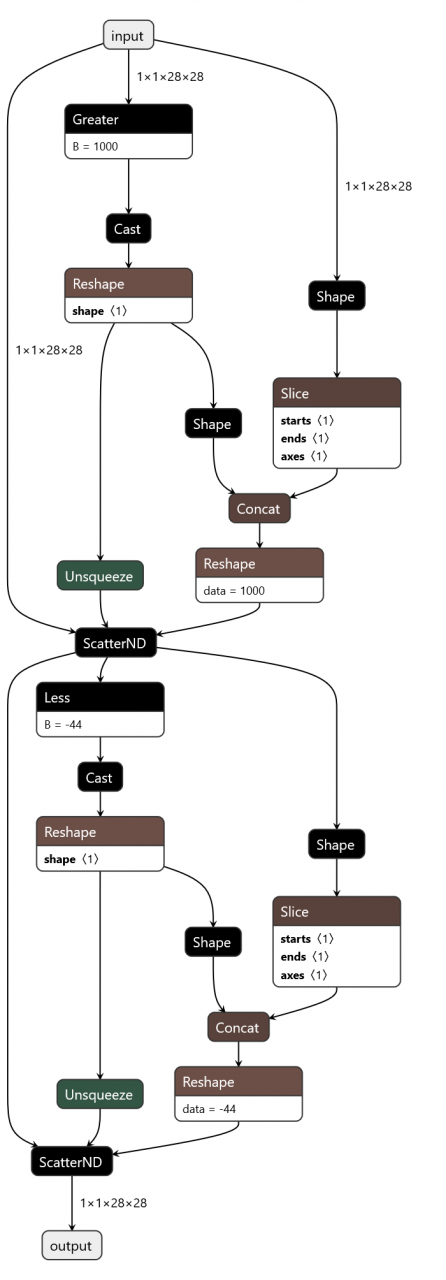

Netron can be used to visualize the calculation diagram generated by the front and rear methods

Index assignment

Import * has a red wavy line, but does not report an error when running

hold down Ctrl and click the left mouse button to jump to the function

Let’s see if there are:

import sys

sys.path.append("../../..")

If so, you can only view the source function manually

The following error occurs when installing GPY with PIP

ERROR: Could not find a version that satisfies the requirement scipy<1.5.0,>=1.3.0 (from GPy) (from versions: 0.8.0, 0.9.0, 0.10.0, 0.10.1, 0.11.0, 0.12.0, 0.12.1, 0.13.0, 0.13.1, 0.13.2, 0.13.3, 0.14.0, 0.14.1, 0.15.0, 0.15.1, 0.16.0, 0.16.1, 0.17.0, 0.17.1, 0.18.0, 0.18.1, 0.19.0, 0.19.1, 1.0.0b1, 1.0.0rc1, 1.0.0rc2, 1.0.0, 1.0.1, 1.1.0rc1, 1.1.0, 1.2.0rc1, 1.2.0rc2, 1.2.0, 1.2.1, 1.2.2, 1.2.3)

ERROR: No matching distribution found for scipy<1.5.0,>=1.3.0 (from GPy)

Solution: use PIP3

pip3 install GPy

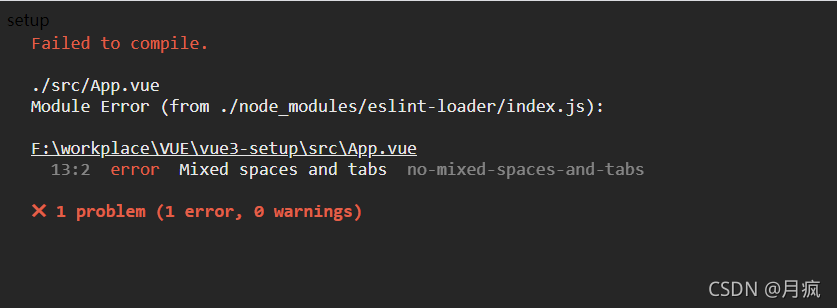

"extensions": "eslint: Recommended" the attribute in the configuration file enables this rule.

Most code conventions require tabs or spaces for indentation. Therefore, if a single line code is indented with tabs and spaces, an error usually occurs.

This rule does not allow indenting with mixed spaces and tabs.

Example of error code for this rule:

/*eslint no-mixed-spaces-and-tabs: "error"*/

function add(x, y) {

// --->..return x + y;

return x + y;

}

function main() {

// --->var x = 5,

// --->....y = 7;

var x = 5,

y = 7;

}Example of the correct code for this rule:

/*eslint no-mixed-spaces-and-tabs: "error"*/

function add(x, y) {

// --->return x + y;

return x + y;

}This rule has a string option.

"smart tabs"when the latter is used for alignment, it is allowed to mix space and labels.

Smart tag

The correct code example for this rule includes the following "smart tabs" Options:

/*eslint no-mixed-spaces-and-tabs: ["error", "smart-tabs"]*/

function main() {

// --->var x = 5,

// --->....y = 7;

var x = 5,

y = 7;

}Add this line:

/*eslint no-mixed-spaces-and-tabs: [“error”, “smart-tabs”]*/

<template>

<comp-setup>

</comp-setup>

</template>

<script>

/*eslint no-mixed-spaces-and-tabs: ["error", "smart-tabs"]*/

import CompSetup from './components/setupview'

export default {

name: 'App',

components: {

CompSetup,

}

}

</script>

<style>

</style>

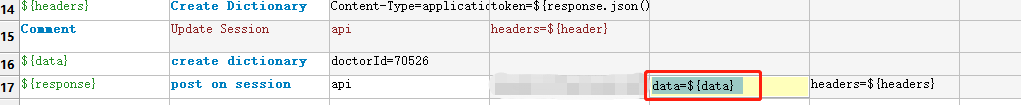

In the post request,

‘content type’: ‘application/JSON; Charset = UTF-8 ‘

data = ${data} is used to transfer parameters, resulting in an error

Error “:” internal server error “,” message “:” syntax error, expect

![]()

Solution:

After changing data=data to json{data}, the execution succeeds

PS: in the post request, data parameters need to be transferred according to the content type

When the source code Matplotlib has the following parameters for setting Chinese or negative numbers, a NameError will appear in pyinstaller packaging: name ‘defaultparams’ is not defined error

plt.rcParams['font.sans-serif'] = ['STSong']

plt.rcParams['axes.unicode_minus'] = False

Solution:

Reduce Matplotlib to version 3.2.2

conda install matplotlib==3.2.2

Note: pyinstaller 3.6 version Python 3.6.13 version

Traceback (most recent call last):

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/__init__.py”, line 20, in init

eng = _activeEngines[driverName]

File “/usr/lib/python3.8/weakref.py”, line 131, in __getitem__

o = self.data[key]()

KeyError: None

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “mqttToTts.py”, line 23, in <module>

tts = Tts()

File “mqttToTts.py”, line 7, in __init__

self.engine = pyttsx3.init()

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/__init__.py”, line 22, in init

eng = Engine(driverName, debug)

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/engine.py”, line 30, in __init__

self.proxy = driver.DriverProxy(weakref.proxy(self), driverName, debug)

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/driver.py”, line 50, in __init__

self._module = importlib.import_module(name)

File “/usr/lib/python3.8/importlib/__init__.py”, line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File “<frozen importlib._bootstrap>”, line 1014, in _gcd_import

File “<frozen importlib._bootstrap>”, line 991, in _find_and_load

File “<frozen importlib._bootstrap>”, line 975, in _find_and_load_unlocked

File “<frozen importlib._bootstrap>”, line 671, in _load_unlocked

File “<frozen importlib._bootstrap_external>”, line 783, in exec_module

File “<frozen importlib._bootstrap>”, line 219, in _call_with_frames_removed

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/drivers/espeak.py”, line 9, in <module>

from . import _espeak, toUtf8, fromUtf8

File “/home/summergao/.local/lib/python3.8/site-packages/pyttsx3/drivers/_espeak.py”, line 18, in <module>

dll = cdll.LoadLibrary(‘libespeak.so.1’)

File “/usr/lib/python3.8/ctypes/__init__.py”, line 451, in LoadLibrary

return self._dlltype(name)

File “/usr/lib/python3.8/ctypes/__init__.py”, line 373, in __init__

self._handle = _dlopen(self._name, mode)

OSError: libespeak.so.1: cannot open shared object file: No such file or directory

When using python3 to do text-to-speech, I installed pyttsx3 and ran the program with the above error

The reason is that before installing pyttsx3, I need to install a speech environment: “espeak”

Installation command:

sudo apt-get update && sudo apt-get install espeakreport errors:

SyntaxError: 'break' outside loop

Break can only be used in a while loop or a for loop. If it is used in an if conditional statement, an error will be reported: syntax error: ‘break’ outside loop. However, if the if conditional statement is nested inside a while loop or a for loop,

if you do not pay attention to the format when corresponding to while, an error will also be reported

Wrong:

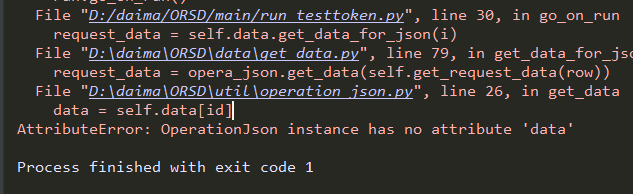

#coding:utf-8

import json

class OperetionJson:

def __init__(self,file_path=None):

if file_path == None:

self.file_path = '../dataconfig/user.json'

else:

self.file_path = file_path

self.data = self.read_data();

Right:

```python

#coding:utf-8

import json

class OperetionJson:

def __init__(self,file_path=None):

if file_path == None:

self.file_path = '../dataconfig/user.json'

else:

self.file_path = file_path

self.data = self.read_data()

YOLOX Model conversion error reported: [TensorRT] ERROR: runtime.cpp (25) – Cuda Error in allocate: 2 (out of memory)

Configuration:

ubuntu 18.04

pytroch 1.9.0

cuda10.0

cudnn 7.5.0

TensorRT 5.1.5

torch2trt 0.3.0

Solution:

Change the max_workspace_size in trt.py