nginx: [error] invalid PID number “” in “/run/nginx.pid”

Solutions:

You need to do it first

Nginx – c/etc/nginx/nginx. Conf

The path to the nginx.conf file can be found in the return of nginx-t.

nginx -s reload

Check whether client_max_body_size parameter size is too small

client_max_body_size 100m;

2, check whether the agent link is accessible. 3, check whether the firewall is open by the proxy server.

The reason is:

During the test, turn off the port number of RTMP,./kill -9 PID (RTMP :1935)

Results in starting the Nginx,./Nginx – c/usr/local/Nginx/conf/Nginx. Conf

The above error occurs

The result process is as follows:

First use lsof – I :80 to see what program is using port 80. Lsof returns the following:

[root@localhost sbin]# lsof – I :80

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

nginx 63170 root 9u IPv4 14243472 0t0 TCP *: HTTP (LISTEN)

Close process: Kill -9 63170

Restart the Nginx: [root @ localhost sbin] #./Nginx – c/usr/local/Nginx/conf/Nginx. Conf

Problem solving

Recv () Failed (104: Connection reset by Peer) problem troubleshooting

In the recent project, through the Nginx reverse proxy nodejs (using nestjs framework) service, the probability of 502 Bad Gateway appears in the pressure testing process, with a low probability of around 0.005%. The specific error message in the log is recv() failed (104: Connection reset by peer) while reading the response header from upstream, through searching the data, we learned that the direct reason for the error was:

nodejs服务已经断开了连接,但是未通知到Nginx,Nginx还在该连接上收发数据,最终导致了该报错。

Considering that in Nginx we have configured to open a long connection with nodeJS Server, namely:

proxy_http_version 1.1;

proxy_set_header Connection "";

Let’s start with the Settings related to long connections. Several common parameters that Nginx USES to affect long connections are: keepalive_timeout, keepalive_requests, and keepalive

1) keepalive_timeout: set the client’s long connection timeout. If the client does not make a request beyond this time, Nginx server will actively close the long connection. Nginx defaults to keepalive_timeout 75s; . Some browsers only hold 60 seconds at most, so we usually set it to 60s. If set to 0, close the long connection. 2) keepalive_requests: set the maximum number of requests a long connection can handle with the client. If this value is exceeded, Nginx will actively close the long connection. The default value is 100. Under normal circumstances, keepalive_requests 100 can basically meet the requirements. However, in the case of high QPS, the number of continuous long connection requests reaches the maximum number of requests and is shut down. This means that Nginx needs to keep creating new long connection to handle the requests. Set the maximum number of connection to the upstream server free keepalive, the number of connections when idle keepalive exceeds this value, the least recently used connection will be closed, if this value is set too small, a certain time period the number of requests, and request processing time is not stable, may be kept closed and create a long number of connections. We usually set 1024. In special scenarios, the average response time and QPS of the interface can be estimated. 4) Open a long connection with the upstream server The default nginx access backend is a short (HTTP1.0) connection that is opened each time a request is made, closed after processing, and reopened on the next request. The HTTP protocol has supported long connections since version 1.1. Therefore, we will set the following parameters in location to open a long connection:

http {

server {

location/ {

proxy_http_version 1.1;

proxy_set_header Connection "";

}

}

}

1) and 2) are set to be long connections between client and Nginx, and 3) and 4) are set to be long connections between Nginx and server.

After checking the above parameter configuration, we started to look for the configuration related to keepalive in node. By looking up nodejs documents, we found that the default server.keepAliveTimeout is 5000ms. If you continue to send and receive data on the long connection, the above error may occur.

So we add more to the node keepAliveTimeout value (see https://shuheikagawa.com/blog/2019/04/25/keep-alive-timeout/) :

// Set up the app...

const server = app.listen(8080);

server.keepAliveTimeout = 61 * 1000;

server.headersTimeout = 65 * 1000; // Node.js >= 10.15.2 需要设置该值大于keepAliveTimeout

The purpose of lengthening the node keepAliveTimeout value is to prevent Nginx from disconnecting before node service, and the caller needs to timeout longer than the caller.

After the above modification, no error 502 Bad Gateway occurred in Nginx during the pressure test.

nginx startup error: nginx working. Service failed because control process exited, error code

>>> service nginx restart

Job for nginx.service failed because the control process exited with error code.

See "systemctl status nginx.service" and "journalctl -xe" for details.as prompted:

systemctl status nginx.service

>>> systemctl status nginx.service

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since 五 2018-08-31 11:08:14 CST; 5min ago

Process: 4668 ExecStop=/sbin/start-stop-daemon --quiet --stop --retry QUIT/5 --pidfile /run/nginx.pid (code=exited, status=1/FAILURE)

Process: 28149 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=1/FAILURE)

Process: 28146 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 4455 (code=killed, signal=KILL)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:14 user-70DGA014CN nginx[28149]: nginx: [emerg] still could not bind()

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Control process exited, code=exited status=1

8月 31 11:08:14 user-70DGA014CN systemd[1]: Failed to start A high performance web server and a reverse proxy server.

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Unit entered failed state.

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Failed with result 'exit-code'.

8月 31 11:08:49 user-70DGA014CN systemd[1]: Stopped A high performance web server and a reverse proxy server.journalctl -xe

>>> journalctl -xe

8月 31 11:08:12 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:12 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:12 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:12 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

8月 31 11:08:13 user-70DGA014CN nginx[28149]: nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

8月 31 11:08:14 user-70DGA014CN nginx[28149]: nginx: [emerg] still could not bind()

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Control process exited, code=exited status=1

8月 31 11:08:14 user-70DGA014CN systemd[1]: Failed to start A high performance web server and a reverse proxy server.

-- Subject: nginx.service

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- nginx.service

--

-- Output:“failed”。

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Unit entered failed state.

8月 31 11:08:14 user-70DGA014CN systemd[1]: nginx.service: Failed with result 'exit-code'.

8月 31 11:08:49 user-70DGA014CN systemd[1]: Stopped A high performance web server and a reverse proxy server.

-- Subject: nginx.service

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- nginx.service From the above information, it can be seen that nginx’s port 80 is being used (but this is not the main issue) </p b>

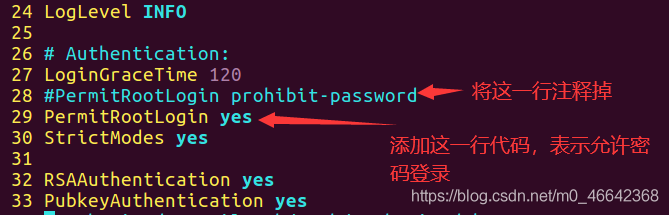

first solution:

note:

if used to modify the/etc/nginx/conf. D/default. Conf or/etc/nginx/nginx. Conf file, and use the command: systemctl restart nginx. The service, so will appear the error information is as follows:

>>> service nginx restart

Job for nginx.service failed because the control process exited with error code.

See "systemctl status nginx.service" and "journalctl -xe" for details.you need to open default.conf or nginx.conf to see if a semicolon is missing.

second solution:

solution: for example, the server side is

on Linux

### reproduce step

1. Log in with any user

2. Try to exit

3. Error

### expected behavior

user should log out.

Nextcloud report error :”Access forbidden CSRF check failed”

Nextcloud to configure Nginx cross-region solution

### actual behavior

user clicks logout with error:

“Access forbidden

CSRF check failed”

### solution (configure nginx)

1. The first part is to mapmap in the HTTP in the global configuration (default path /etc/nginx/nginx.conf);

First configure nginx.conf add HTTP map

vi /etc/nginx/nginx.conf# added to HTTP save

map $http_upgrade $connection_upgrade {

default upgrade; '' close;

}

2. The second part, it is the corresponding nextcloud configuration file (create/etc/nginx/conf. D/nextcloud. Conf) under the server IP configuration.

Configure nextcloud.conf inverse configuration file by adding

vi/etc/nginx/conf. D/nextcloud. Conf

proxy_set_header Host $http_host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

After

configuration is completed, run nginx-t to check, and confirm that there is no error, run nginx-s reload or systemctl restart nginx to restart nginx for effective configuration.

3. After completion, visiting Nextcloud again may appear: reverse proxy domain is not in the trusted_domain of Nextcloud your visiting domain is not in the trusted domain of Nextcloud

#

modify $nextcloud/config/config in PHP trusted_domains parameters can

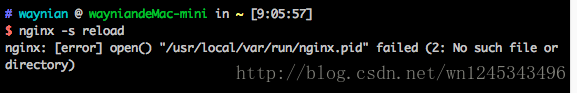

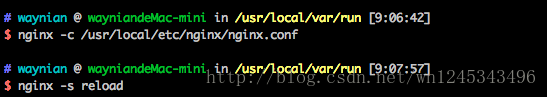

starting Nginx occurs with this error

nginx: [error] open() "/usr/local/var/run/nginx.pid" failed (2: No such file or directory)

the solution: find your nginx. Conf folder directory, then run the

nginx - c/usr/local/etc/nginx/nginx. Conf command,

and then run the reload nginx - s , it is ok to

Nginx browses.php files into downloads: this is because Nginx is not set to pass to the PHP interpreter

when it touches PHP files

ps aux|grep 'php-fpm'

Open PHP – FPM

service php-fpm start

ocation ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;#php-fpm的默认端口是9000

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

nginx configuration folder cd /usr/local/nginx/conf

vim nginx.conf

......

http {

......

server {

listen 80;

server_name location;

#charset koi8-r;

#access_log logs/host.access.log main;

location/{

root html/index/;

index index.html index.htm;

}

......

}

# 访问 www.test1.com 网站,默认解析到 http://ip/test1 项目

server {

listen 80;

server_name www.test1.com;

location/{

root html/test1/;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

# 访问 www.test2.com 网站,默认解析到 http://ip/test2 项目

server {

listen 80;

server_name www.test2.com;

location/{

alias html/test2/;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

......

}

domain name resolution address is the server’s IP, access to nginx will determine which site to request, and then match to the corresponding project

nginx configuration folder cd /usr/local/nginx/conf

vhostCreate www.test.com.conf

under the folder vhost

server {

listen 80;

server_name www.test.com;

location/{

root html/www.test.com;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

pay attention to change

rootpath (www.test.comcome in to visit the project path)

here newwww.test.comcan be named according to your domain name, according to the above template, Changeserver_name and root.

. Create a newproject name for each project

nginx configuration folder cd /usr/local/nginx/conf

vim nginx.conf

......

http {

......

#引入其它配置

include vhost/*.conf;

......

}

domain name resolution address is the server's IP, access to nginx will determine which site to request, and then match to the corresponding project

reference blog: