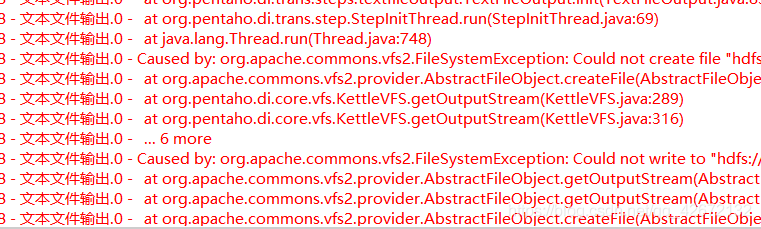

Caused by: org.apache.commons.vfs2.FileSystemException: Could not create file

First method.

Edit the Spoon.bat file, and add the following to line 119.

“-DHADOOP_USER_NAME=xxx” “-Dfile-encoding=UTF-8”

Note: xxx is your own user name

Second way.

hdfs dfs -chmod 777 /