LeNet is trained with MNIST’s training set, and the code is not shown here.

directly loads the saved model

lenet = torch.load('resourses/trained_model/LeNet_trained.pkl')

Attached to the test code

print("Testing")

# Define conversion operations

# Read in the test image and transfer it to the model.

test_images = Image.open('resourses/LeNet_test/0.png')

img_to_tensor = transforms.Compose([

transforms.Resize(32),

transforms.Grayscale(num_output_channels=1),

transforms.ToTensor(),

transforms.Normalize([0.5], [0.5])])

input_images = img_to_tensor(test_images).unsqueeze(0)

# Move models and data to cuda for computation if cuda is available

USE_CUDA = torch.cuda.is_available()

if USE_CUDA:

input_images = input_images.cuda()

lenet = lenet.cuda()

output_data = lenet(input_images)

# Print test information

test_labels = torch.max(output_data, 1)[1].data.cpu().numpy().squeeze(0)

print(output_data)

print(test_labels)

At present, there is no correct rate according to my own picture, and I can’t find any reason. At present, the frequency of output 8 is very high.

later looked up relevant information, for the following reasons: </mark b>

-

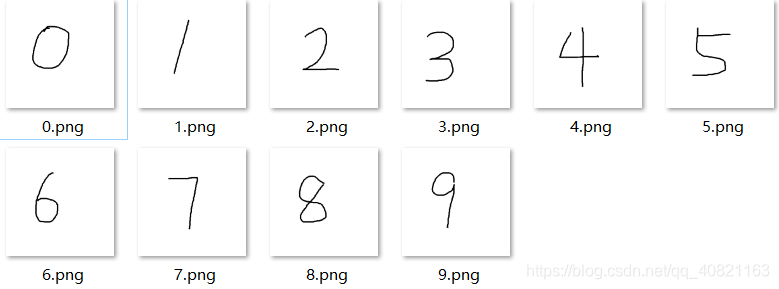

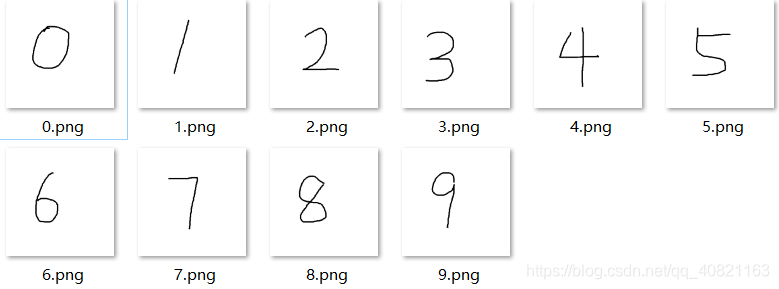

parsed MNIST data set, you will find that the pictures in the data set are white words on a black background, such as:

-

, but our custom test pictures are generally black words on a white background, such as:

-

, so I took the custom test pictures by pixel and then re-tested

pixel reverse code is as follows:

from PIL import Image, ImageOps

image = Image.open('resourses/LeNet_test/0.png')

image_invert = ImageOps.invert(image)

image_invert.show()

After pixel reversal, the accuracy rate of the test reaches 50-60 percent, but the accuracy rate is still not ideal. Please refer to the following reasons

MNIST data set contains the handwriting of foreigners. The handwriting style and habits of foreigners are slightly different from those of Chinese people, which is also a major factor affecting the accuracy of the test. But the owner of the building has not tested the correct rate of the image test after modifying the font.