RuntimeError: Unable to find a valid cuDNN algorithm to run convolution

Preface solution

preface

Today, we use Yolo V5.6 training model and modify the batch size to 32. The following error occurred:

Starting training for 100 epochs...

Epoch gpu_mem box obj cls labels img_size

0%| | 0/483 [00:04<?, ?it/s]

Traceback (most recent call last):

File "train.py", line 620, in <module>

main(opt)

File "train.py", line 517, in main

train(opt.hyp, opt, device, callbacks)

File "train.py", line 315, in train

pred = model(imgs) # forward

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "D:\liufq\yolov5-6.0\models\yolo.py", line 126, in forward

return self._forward_once(x, profile, visualize) # single-scale inference, train

File "D:\liufq\yolov5-6.0\models\yolo.py", line 149, in _forward_once

x = m(x) # run

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "D:\liufq\yolov5-6.0\models\common.py", line 137, in forward

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "D:\liufq\yolov5-6.0\models\common.py", line 45, in forward

return self.act(self.bn(self.conv(x)))

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\conv.py", line 443, in forward

return self._conv_forward(input, self.weight, self.bias)

File "E:\Anaconda3\envs\yolov550\lib\site-packages\torch\nn\modules\conv.py", line 440, in _conv_forward

self.padding, self.dilation, self.groups)

RuntimeError: Unable to find a valid cuDNN algorithm to run convolution

terms of settlement

Modify the size of batchsize and make it smaller.

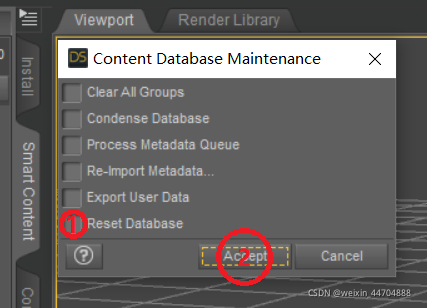

on the left of the viewport

on the left of the viewport

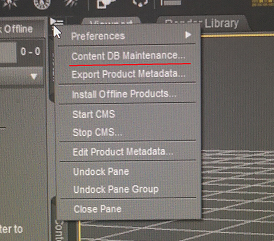

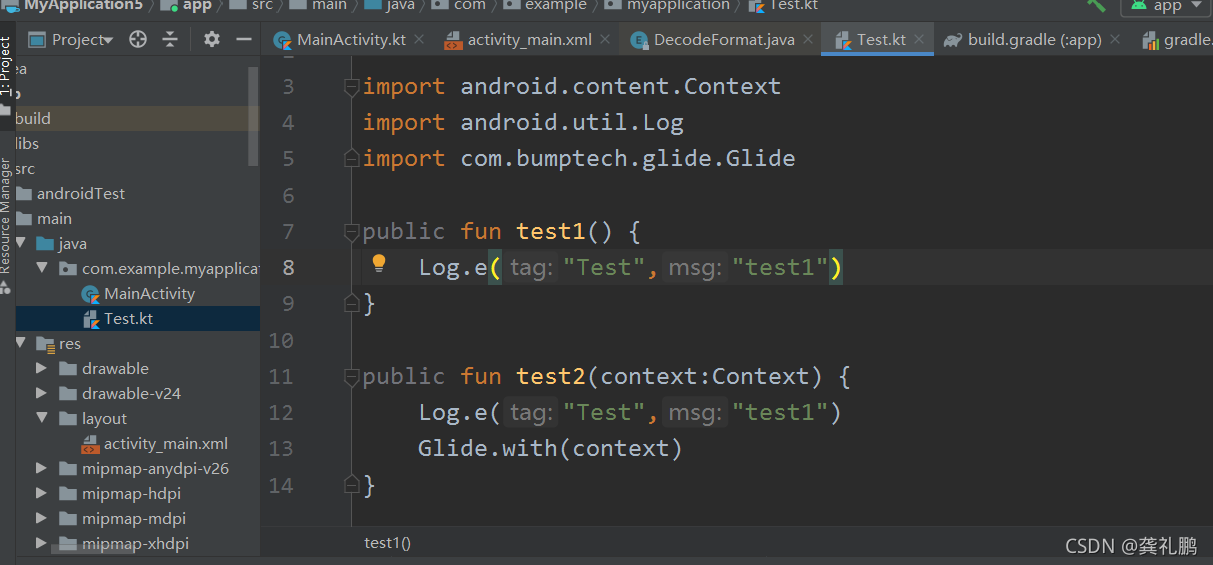

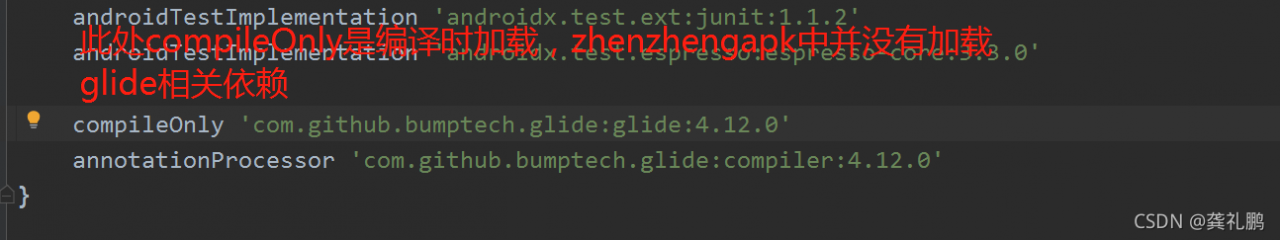

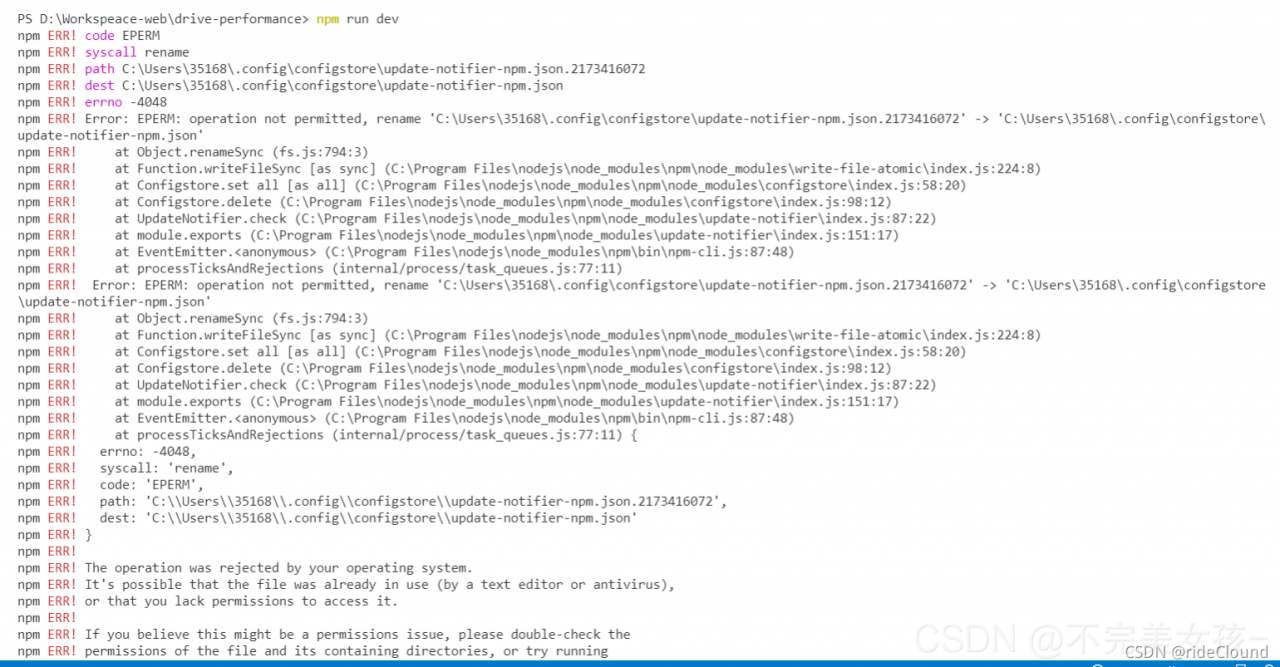

after encountering problems, the first is a variety of search solutions. The commonly used solutions are probably the following:

after encountering problems, the first is a variety of search solutions. The commonly used solutions are probably the following: