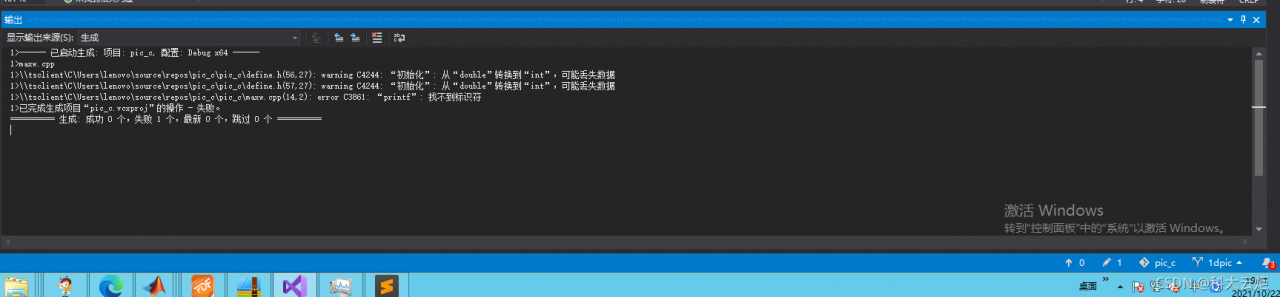

Question content:

{

"error": {

"root_cause": [

{

"type": "mapper_parsing_exception",

"reason": "Root mapping definition has unsupported parameters: [article : {properties={id={index=not_analyzed, store=true, type=long}, title={analyzer=standard, index=analyzed, store=true, type=text}, content={analyzer=standard, index=analyzed, store=true, type=text}}}]"

}

],

"type": "mapper_parsing_exception",

"reason": "Failed to parse mapping [_doc]: Root mapping definition has unsupported parameters: [article : {properties={id={index=not_analyzed, store=true, type=long}, title={analyzer=standard, index=analyzed, store=true, type=text}, content={analyzer=standard, index=analyzed, store=true, type=text}}}]",

"caused_by": {

"type": "mapper_parsing_exception",

"reason": "Root mapping definition has unsupported parameters: [article : {properties={id={index=not_analyzed, store=true, type=long}, title={analyzer=standard, index=analyzed, store=true, type=text}, content={analyzer=standard, index=analyzed, store=true, type=text}}}]"

}

},

"status": 400

}

Wrong configuration

{

"mappings": {

"article": {

"properties": {

"id": {

"type": "long",

"store": true,

"index":"not_analyzed"

},

"title": {

"type": "text",

"store": true,

"index":"analyzed",

"analyzer":"standard"

},

"content": {

"type": "text",

"store": true,

"index":"analyzed",

"analyzer":"standard"

}

}

}

}

}

Modify configuration

{

"mappings": {

"properties": {

"id": {

"type": "long",

"store": true

},

"title": {

"type": "text",

"store": true,

"analyzer":"standard"

},

"content": {

"type": "text",

"store": true,

"analyzer":"standard"

}

}

}

}

Cause of problem: ES7 adjustment for configuration