Project scenario:

The mmkv version 1.0.23 used in the project is too old, and 1.0.23 also introduces libc++_shared.so which is about 249K + libmmkv.so which is about 40K.

Checking github, I found that the latest version has reached 1.2.14 and the aar package has been optimized, so I have a need to upgrade.

Problem description

In the project, we upgraded mmkv version 1.0.23 to 1.2.14. After solving a lot of compilation errors (inconsistent kotlin versions, gradle upgrade required, etc.), we thought everything was all right, but we didn’t expect to report the startup

Non-fatal Exception: java.lang.UnsatisfiedLinkError: dlopen failed: library “libmmkv.so” not found

I searched various posts on the Internet – no answer. Later, someone in github issue raised similar questions: dlopen failed: library “libmmkv. so” not found · Issue # 958 · Tencent/MMKV · GitHub

Inspired, we developed the source code GitHub – Tencent/MMKV: An efficient, small mobile key value storage framework by WeChat Works on Android, iOS, macOS, Windows, and POSIX. Clone down and study.

Cause analysis:

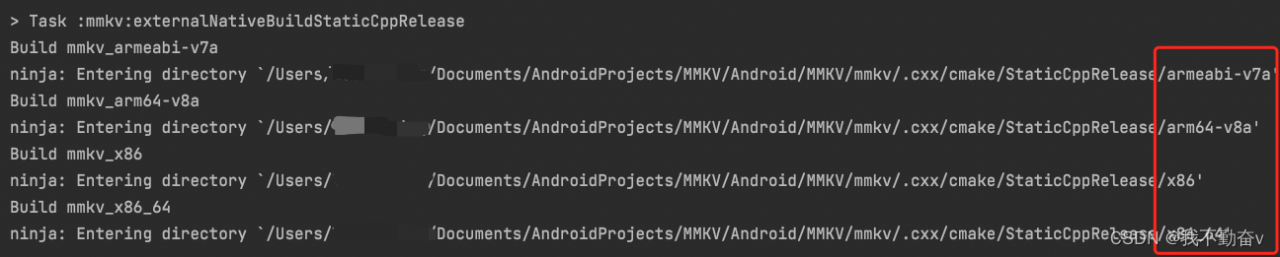

After compiling the mmkv module with the source code clone, it is found that only the following four cpu architecture sos will be generated in the compilation log

armeabi-v7a, arm64-v8a, x86, x86_64

No armeabi generated

My own project only supports armeabi

Therefore, the reason is obviously related to the CPU architecture settings of your project.

Why didn’t you compile so to generate armeabi?

The ndk17 does not support armeabi at first. The ndk version needs to be changed to 16 and below and the gradle plug-in needs to be downgraded to 4.1.3 and below. However, the gradle in the project has been upgraded to 7. x

Solution:

Method 1: The app’s build.gradle checks for ndk abiFilters under android-buildTypes

ndk {

abiFilters "armeabi"

}

Modify to

ndk {

abiFilters "armeabi-v7a"

}

Armeabi-v7a is backward compatible with armeabi

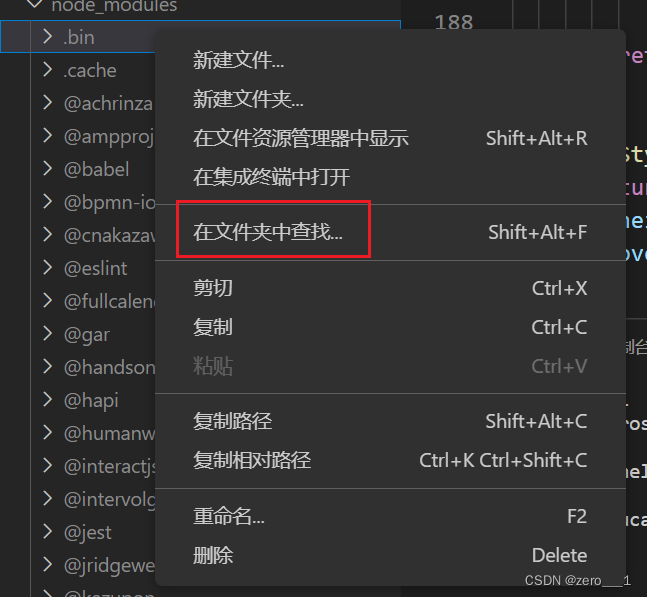

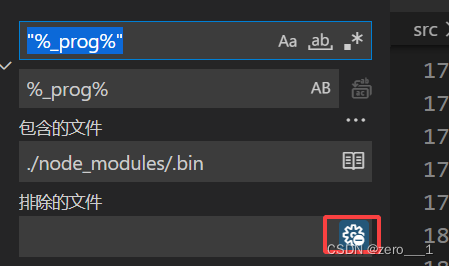

Method 2: If the project has only armeabi architecture and cannot upgrade to v7a, you can find the armeabi-v7a so through the aar package that mmkv maven depends on, put the so into the project armeabi directory, and the abiFilters can still be “armeabi”.