having recently been stretched by several of pytorch’s Tensor Tensor dimensional transformations, I delve into them, outlining their journey and their results as follows:

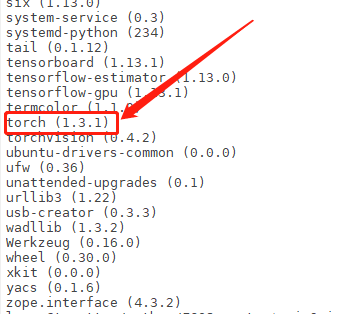

note: torch. The __version__ 1.2.0 ‘= =’

torch. Transpose () and the torch permute ()

and

are used to exchange content from different dimensions. Here, however, torch. () is exchange the content of two specified dimensions, and permute() can exchange more than one dimension all at once. Here is code:

(): the exchange of two dimensions

>>> a = torch.Tensor([[[1,2,3,4,5], [6,7,8,9,10], [11,12,13,14,15]],

[[-1,-2,-3,-4,-5], [-6,-7,-8,-9,-10], [-11,-12,-13,-14,-15]]])

>>> a.shape

torch.Size([2, 3, 5])

>>> print(a)

tensor([[[ 1., 2., 3., 4., 5.],

[ 6., 7., 8., 9., 10.],

[ 11., 12., 13., 14., 15.]],

[[ -1., -2., -3., -4., -5.],

[ -6., -7., -8., -9., -10.],

[-11., -12., -13., -14., -15.]]])

>>> b = a.transpose(1,2) # 使用transpose,将维度1和2进行交换。这个很好理解。转换后tensor与其shape如下

>>> print(b, b.shape)

(tensor([[[ 1., 6., 11.],

[ 2., 7., 12.],

[ 3., 8., 13.],

[ 4., 9., 14.],

[ 5., 10., 15.]],

[[ -1., -6., -11.],

[ -2., -7., -12.],

[ -3., -8., -13.],

[ -4., -9., -14.],

[ -5., -10., -15.]]]),

torch.Size([2, 5, 3])))

permute() : does an arbitrary dimension swap

at once

>>> c = a.permute(2, 0, 1)

>>> print(c, c.shape) # 此举将原维度0,1,2的次序变为2,1,0,所以shape也发生了相应的变化。

(tensor([[[ 1., 6., 11.],

[ -1., -6., -11.]],

[[ 2., 7., 12.],

[ -2., -7., -12.]],

[[ 3., 8., 13.],

[ -3., -8., -13.]],

[[ 4., 9., 14.],

[ -4., -9., -14.]],

[[ 5., 10., 15.],

[ -5., -10., -15.]]]),

torch.Size([5, 2, 3]))

This transformation between

transpose() and permute() :

>>> b = a.permute(2,0,1)

>>> c = a.transpose(1,2).transpose(0,1)

>>> print(b == c, b.shape)

(tensor([[[True, True, True],

[True, True, True]],

[[True, True, True],

[True, True, True]],

[[True, True, True],

[True, True, True]],

[[True, True, True],

[True, True, True]],

[[True, True, True],

[True, True, True]]]),

torch.Size([5, 2, 3]))

as shown in the code, if you swap the first and second dimensions for Tensor a, and then swap the first and second dimensions for Tensor a, then they will get the same result as permute.

transpose () and the view ()

view() is a very common function in pytorch. This function also ACTS as an Tensor dimension, but does all this in a very different way from Transpose ()/permute(). If tranpose() is the Tensor whose original dimensions are exchanged faithfully, then view() is much more straightforward and simple — first, the view() function flattens all the Tensor dimensions into one, and then reconstructs an Tensor based on the incoming dimension information. Code is as follows:

# 还是上面的Tensor a

>>> print(a.shape)

torch.Size([2, 3, 5])

>>> print(a.view(2,5,3))

tensor([[[ 1., 2., 3.],

[ 4., 5., 6.],

[ 7., 8., 9.],

[ 10., 11., 12.],

[ 13., 14., 15.]],

[[ -1., -2., -3.],

[ -4., -5., -6.],

[ -7., -8., -9.],

[-10., -11., -12.],

[-13., -14., -15.]]])

>>> c = a.transpose(1,2)

>>> print(c, c.shape)

(tensor([[[ 1., 6., 11.],

[ 2., 7., 12.],

[ 3., 8., 13.],

[ 4., 9., 14.],

[ 5., 10., 15.]],

[[ -1., -6., -11.],

[ -2., -7., -12.],

[ -3., -8., -13.],

[ -4., -9., -14.],

[ -5., -10., -15.]]]),

torch.Size([2, 5, 3]))

is shown in the code. Even though view() and () end up doing the same thing, their contents are not the same. The view function is just going to be applied to the Tensor dimensions of (2,5,3), which are going to be applied to the elements and ; All this does is do of the first second dimension.

Moreover, there are cases where the Tensor after transpose cannot be called view, because the Tensor after transpose is not “continuous” (non-self-help). The question about self-help array is the same in numpy, we have a great explanation here for