An error was reported today.

error: where in subquery source

Reason:

This is actually because all subqueries in hive need to be aliased

An error was reported today.

error: where in subquery source

Reason:

This is actually because all subqueries in hive need to be aliased

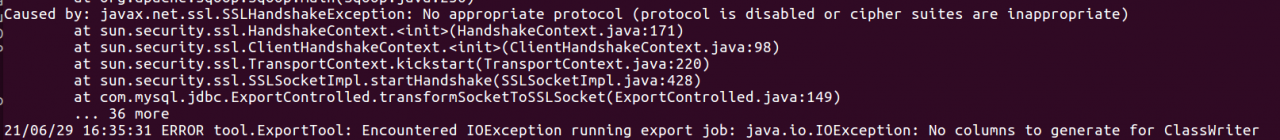

The error is as follows

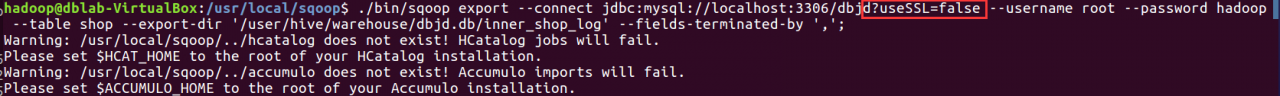

Solution: add usessl = false after the MySQL connection URL, as shown in the figure below

Import it later

Initialize hive metabase When schematool – initschema – dbtype MySQL – verb is used, the following error occurs:

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLException : Access denied for user ‘root’@’hadoop102’ (using password: YES) SQL Error code: 1045

Cause analysis:

“Access denied” means that hive is refused to connect to MySQL database, and there is a problem in account permission or password configuration to connect to MySQL database.

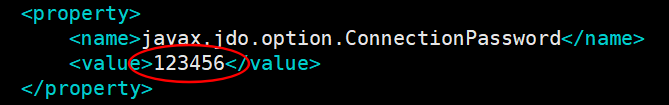

When configuring the Metastore to MySQL, configure the hive-site.xml. It is found that the password connecting to the MySQL database in the figure below is forgotten to be modified when pasting the configuration;

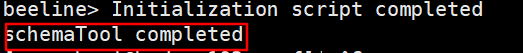

After correction, initialize hive metadata and execute the following command:

schematool -initSchema -dbType mysql -verboseInitialization complete.

This error report is due to my carelessness, which may not be universal. It is only used to remind you to check these two configurations!

preface

This article belongs to the column “big data abnormal problems summary”, which is the author’s original. Please indicate the source of the quotation, and point out the shortcomings and errors in the comments area. Thank you!

For the table of contents and references of this column, please refer to the summary of big data anomalies

Questions

When installing and deploying hive3.1.2, an error occurred during startup:

[root@node2 apache-hive-3.1.2-bin]# ./bin/hive

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/opt/java/bin:/opt/maven/bin:/opt/bigdata/hadoop-3.2.2/bin:/opt/bigdata/hadoop-3.2.2/sbin:/opt/bigdata/spark-3.2.0/bin:/opt/bigdata/spark-3.2.0/sbin:/opt/java/bin:/opt/maven/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1357)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1338)

at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:536)

at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:554)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:448)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5141)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5099)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:97)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

solve

From the error log, we can see that there is a problem with the guava package. Either there are fewer packages or there are conflicts. Let’s first check whether the package exists

[root@node2 apache-hive-3.1.2-bin]# find/-name *guava*

find: ‘/proc/9666’: Not having that file or directory

/opt/maven/lib/guava.license

/opt/maven/lib/guava-25.1-android.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/common/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/hdfs/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/httpfs/tomcat/webapps/webhdfs/WEB-INF/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/kms/tomcat/webapps/kms/WEB-INF/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/yarn/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/tools/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/hdfs/lib/guava-27.0-jre.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

/opt/bigdata/spark-3.2.0/jars/guava-14.0.1.jar

/opt/bigdata/apache-hive-3.1.2-bin/lib/guava-19.0.jar

/opt/bigdata/apache-hive-3.1.2-bin/lib/jersey-guava-2.25.1.jar

There are guava packages in hive’s lib directory and Hadoop’s common lib directory, but the package versions are inconsistent. We use guava-27.0-jre.jar to override guava-19.0.jar in hive’s lib directory

[root@node2 apache-hive-3.1.2-bin]# cd lib

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2308517 Sep 27 2018 guava-19.0.jar

-rwxr-xr-x. 1 hadoop hadoop 971309 May 21 2019 jersey-guava-2.25.1.jar

[root@node2 lib]# cd /opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2747878 Sep 26 00:18 guava-27.0-jre.jar

-rwxr-xr-x. 1 hadoop hadoop 2199 Sep 26 00:18 listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

[root@node2 lib]# cd /opt/bigdata/apache-hive-3.1.2-bin/lib/

[root@node2 lib]# mv guava-19.0.jar guava-19.0.jar.bak

[root@node2 lib]# cp /opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar /opt/bigdata/apache-hive-3.1.2-bin/lib/

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2308517 Sep 27 2018 guava-19.0.jar.bak

-rwxr-xr-x. 1 root root 2747878 Jun 19 13:23 guava-27.0-jre.jar

-rwxr-xr-x. 1 hadoop hadoop 971309 May 21 2019 jersey-guava-2.25.1.jar

[root@node2 lib]# chmod 755 guava-27.0-jre.jar

[root@node2 lib]# chown hadoop:hadoop guava-27.0-jre.jar

At the same time, we switch to Hadoop users to execute (this can ensure that Hadoop is a super user)

[root@node2 lib]# su - hadoop

Last login: April June 17 22:14:01 CST 2021pts/0 AM

[hadoop@node2 ~]$ cd /opt/bigdata/apache-hive-3.1.2-bin/

[hadoop@node2 apache-hive-3.1.2-bin]$ ./bin/hive

which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/java/bin:/opt/maven/bin:/opt/bigdata/hadoop-3.2.2/bin:/opt/bigdata/hadoop-3.2.2/sbin:/opt/bigdata/spark-3.2.0/bin:/opt/bigdata/spark-3.2.0/sbin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 901d74e6-fa5d-45b5-b949-bebdacb582ed

Logging initialized using configuration in jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

The problem has been solved

Reason: because my cluster is centos7 system, according to the data on the Internet, the buffer/cache caching mechanism of centos7 will continue to increase the number of buffers, which will occupy the memory and reduce the available memory of the program. As a result, the Java garbage collection mechanism recycles the yarnchild process, resulting in the failure to find the main class

solution: store the cache data in memory to disk, and then release the memory manually

It is found on the Internet that:

execute the sync command several times, and then execute it in turn:

echo 1 & gt/ proc/sys/vm/drop_ caches

echo 2 >/proc/sys/vm/drop_ caches

echo 3 >/proc/sys/vm/drop_ caches

However, when running the following statement to clear the cache, an error of permission denied is reported: – bash/proc/sys/VM/drop_ caches: Permission denied

sudo echo 1 >/proc/sys/vm/drop_ caches

sudo echo 2 >/proc/sys/vm/drop_ caches

sudo echo 3 >/proc/sys/vm/drop_ caches

sync

Bash refused to do so because of the redirection symbol “& gt;” It’s also bash’s order. Sudo only allows echo command to have root authority,

but not “& gt;” The command also has root permission, so bash will think that the command has no permission to write information.

resolvent:

“SH – C” command, which allows bash to execute a string as a complete command

sudo sh -c “echo 1 >/proc/sys/vm/drop_ caches”

sudo sh -c “echo 2 >/proc/sys/vm/drop_ caches”

sudo sh -c “echo 3 >/proc/sys/vm/drop_ caches”

Or

echo 1 | sudo TEE/proc/sys/VM/drop_ caches

Hive SQL syntax is different from the frequently used MySQL syntax. SQL written according to the habit of writing MySQL often reports errors, and it is difficult to see the cause of the problem. Therefore, this paper records the phenomenon of the problem and the solution

If you don’t find any problem with the alias: select from error ‘> select from error = 4200* From a) treror: error while compiling statement: failed: semanticexception [error 10025]: expression not in group by key ID (state = 42000, code = 10025)

cause: fields in the select statement but not in the group by statement will cause the error

solution: change the select id, name from a group by name to select collect_ Set (ID), name from a group by NameError: error while compiling statement: failed: semanticexception [error 10004]: Line 1:13 invalid table alias or column reference ‘ID’:

cause: the corresponding field in the subquery statement has changed, such as using a function or renaming

solution: select id, name from (select collect)_ Set (ID), name from a group by name) t “is changed to” select id, name from (select collect)_ Set (ID) id, name from a group by name) t or select t.id, name from (select collect)_ Set (ID), name from a group by name) tproblem: unable to query data after hive multiple SQL unions

cause: the data after union is saved in HDFS to multiple new directories under the table directory

solution: add configuration (which can be directly input on the CLI command line) set mapred.input.dir .recursive=true;

Or use a select statement to package multiple union statements and then execute hsql on tez to report an error. Out of memory

needs to adjust the size of the container

set hive.tez.container .size=4096;

set hive.tez.java . opts = – xmx3072m; hive does not query subdirectories recursively by default, so when creating a table, if there are subdirectories in the specified directory, it will report ERROR:not a file

You can perform the following four configurations in hive cli to enable recursive access to subdirectories in the callback. Instead of recursive query, all the data under the directory will be loaded in. Therefore, when the subdirectories are very deep or there are many subdirectories, the speed will be very slow.

set hive.input.dir .recursive=true;

set hive.mapred.supports .subdirectories=true;

set hive.supports.subdirectories=true ;

set mapred.input.dir .recursive=true;

cd $HIVE_ HOME/bin

vi hive- site.xml

The reason for the error is hive- site.xml The configuration in is incomplete or the configuration item is wrong

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/opt/software/hadoop/hive110/warehouse</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.56.130:3306/hive110?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

</configuration>

In particular, we should pay attention to whether the IP address in the IP address configuration item is correct

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.56.130:3306/hive110?createDatabaseIfNotExist=true</value>

explain select deptno `dept`,

year(hiredate) `year`,

sum(sal)

from tb_emp

group by deptno, year(hiredate);

1. There are several stages

So let’s say we have two of them in this case

+------------------------------------+

|Explain |

+------------------------------------+

|STAGE DEPENDENCIES: |

| Stage-1 is a root stage |

| Stage-0 depends on stages: Stage-1|

+------------------------------------+

Stage 0 is dependent on stage1, which means stage1 is executed first, then stage 0

1 View the Map phase of stage1

You can see that the Map phase is mostly done

Table Scanning The table data volume is statistically retrieved by the Expressions block

+-------------------------------------------------------------------------------------------------+

|Explain |

+-------------------------------------------------------------------------------------------------+

| Map Reduce |

| Map Operator Tree: |

| TableScan |

| alias: tb_emp |

| Statistics: Num rows: 6 Data size: 718 Basic stats: COMPLETE Column stats: NONE |

| Select Operator |

| expressions: deptno (type: int), year(hiredate) (type: int), sal (type: float) |

| outputColumnNames: _col0, _col1, _col2 |

| Statistics: Num rows: 6 Data size: 718 Basic stats: COMPLETE Column stats: NONE |

| Group By Operator |

| aggregations: sum(_col2) |

| keys: _col0 (type: int), _col1 (type: int) |

| mode: hash |

| outputColumnNames: _col0, _col1, _col2 |

| Statistics: Num rows: 6 Data size: 718 Basic stats: COMPLETE Column stats: NONE |

| Reduce Output Operator |

| key expressions: _col0 (type: int), _col1 (type: int) |

| sort order: ++ |

| Map-reduce partition columns: _col0 (type: int), _col1 (type: int) |

| Statistics: Num rows: 6 Data size: 718 Basic stats: COMPLETE Column stats: NONE|

| value expressions: _col2 (type: double) |

+-------------------------------------------------------------------------------------------------+

3. Look at the Reduce phase

Determine input and output formats

+-------------------------------------------------------------------------------------------+

|Explain |

+-------------------------------------------------------------------------------------------+

| Reduce Operator Tree: |

| Group By Operator |

| aggregations: sum(VALUE._col0) |

| keys: KEY._col0 (type: int), KEY._col1 (type: int) |

| mode: mergepartial |

| outputColumnNames: _col0, _col1, _col2 |

| Statistics: Num rows: 3 Data size: 359 Basic stats: COMPLETE Column stats: NONE |

| File Output Operator |

| compressed: false |

| Statistics: Num rows: 3 Data size: 359 Basic stats: COMPLETE Column stats: NONE|

| table: |

| input format: org.apache.hadoop.mapred.SequenceFileInputFormat |

| output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat |

| serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe |

+-------------------------------------------------------------------------------------------+

reference

Hive Experiment 5: Check out the HQL execution plan and key steps in _HeroicPoem column -CSDN blog _Hive View the execution plan

LanguageManual Explain – Apache Hive – Apache Software Foundation

open the CM management interface at work today and see

: view logs

raini@biyuzhe:~$ schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found the binding in [the jar: file:/home/raini/app/apache – hive – 2.1.1 – bin/lib/log4j – slf4j – impl – against 2.4.1. Jar!/org/slf4j/impl/StaticLoggerBinder class]

slf4j: Found the binding in [the jar: file:/home/raini/app/hadoop – 2.8.0/share/hadoop/common/lib/slf4j – log4j12-1.7.10. Jar!/org/slf4j/impl/StaticLoggerBinder class]

slf4j: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true& UseSSL =false

Metastore Connection Driver : com.mysql. JDBC.Driver

Connection User: hive

Starting Metastore schema initialization to 2.1.0

Initialization script hive – schema – 2.1.0. Mysql.

SQL Initialization script completed

org.. Apache hadoop. Hive. Metastore. HiveMetaException: Failed to get the schema version.

physicist cause: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException : Table ‘hive.VERSION’ doesn’t exist

SQL Error code: 1146

Use –verbose for detailed stacktrace.

*** schemaTool failed ***

problem above, after installing hive 2.1.1, formatting was not successful, and did not find metastore (using mysql, but still fine before upgrade)

reason:

previously used hive 1.2.1, mysql connection metastore use user user is hive, may metadata deletion is not clean or some configuration file cannot be deleted,

solution:

USES another user.

mysql> create user 'hive1'@'%' identified by 'hive1';

mysql> grant all on *.* to 'hive1'@localhost identified by 'hive1';

mysql> flush privileges;and modify: XML hive – site.

< property>

& lt; name> javax.jdo.option.ConnectionUserName< /name>

& lt; value> hive1< /value>

& lt; /property>

& lt; property>

& lt; name> javax.jdo.option.ConnectionPassword< /name>

& lt; value> hive1< /value>

& lt; /property>

this is the step required for hive2.0.

mysql> The SOURCE/home/raini/app/hive/scripts/metastore/upgrade/mysql/hive – schema – 2.1.0. Mysql. SQL;

start hive to fix ~

question 2:

hive (default)> create database anserchapp;

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:Got exception: java.io.IOException Failed on local exception: java.io.IOException: Couldn’t set up IO streams; Host Details: Local Host is: “biyuzhe/127.0.1.1”; destination host is: “user”:8020; )

Settings:

mysql> ALTER DATABASE hive character set latin1;

Query OK, 1 row affected (0.00 SEC)

error:

hive (default)> create database anserchapp;

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:java.lang.IllegalArgumentException: java.net.UnknownHostException: user)

1. Problem description

The

sqoop list-tables tool works, but there is a problem with the import tool. Strange!!

[hadoop@master ~]$ sqoop import --username test --password test --connect jdbc:mysql://172.1.1.96:3306/test -m 1 --delete-target-dir --table student_exam_score_20170802 --fields-terminated-by '\t' --hive-import --hive-database bond_edu --hive-table student_exam_score_20170802

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/11/08 11:31:13 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/11/08 11:31:13 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/11/08 11:31:13 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/11/08 11:31:13 INFO tool.CodeGenTool: Beginning code generation

18/11/08 11:31:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student_exam_score_20170802` AS t LIMIT 1

18/11/08 11:31:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student_exam_score_20170802` AS t LIMIT 1

18/11/08 11:31:14 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop-2.8.1/share/hadoop/mapreduce

Note: /tmp/sqoop-hadoop/compile/98d200728b6a446d170eba746e2c9a4c/student_exam_score_20170802.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/11/08 11:31:18 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/98d200728b6a446d170eba746e2c9a4c/student_exam_score_20170802.jar

18/11/08 11:31:19 INFO tool.ImportTool: Destination directory student_exam_score_20170802 is not present, hence not deleting.

18/11/08 11:31:19 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/11/08 11:31:19 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/11/08 11:31:19 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/11/08 11:31:19 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/11/08 11:31:19 INFO mapreduce.ImportJobBase: Beginning import of student_exam_score_20170802

18/11/08 11:31:20 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/11/08 11:31:20 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/11/08 11:31:20 INFO client.RMProxy: Connecting to ResourceManager at master/10.0.1.118:18040

18/11/08 11:31:24 INFO db.DBInputFormat: Using read commited transaction isolation

18/11/08 11:31:24 INFO mapreduce.JobSubmitter: number of splits:1

18/11/08 11:31:24 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1541640011445_0018

18/11/08 11:31:25 INFO impl.YarnClientImpl: Submitted application application_1541640011445_0018

18/11/08 11:31:25 INFO mapreduce.Job: The url to track the job: http://master:18088/proxy/application_1541640011445_0018/

18/11/08 11:31:25 INFO mapreduce.Job: Running job: job_1541640011445_0018

18/11/08 11:31:37 INFO mapreduce.Job: Job job_1541640011445_0018 running in uber mode : false

18/11/08 11:31:37 INFO mapreduce.Job: map 0% reduce 0%

18/11/08 11:31:45 INFO mapreduce.Job: Task Id : attempt_1541640011445_0018_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2189)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2222)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2017)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: java.net.SocketException: Network is unreachable (connect failed)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 26 more

18/11/08 11:31:51 INFO mapreduce.Job: Task Id : attempt_1541640011445_0018_m_000000_1, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2189)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2222)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2017)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: java.net.SocketException: Network is unreachable (connect failed)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 26 more

18/11/08 11:31:57 INFO mapreduce.Job: Task Id : attempt_1541640011445_0018_m_000000_2, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2189)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2222)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2017)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: java.net.SocketException: Network is unreachable (connect failed)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 26 more

18/11/08 11:32:05 INFO mapreduce.Job: map 100% reduce 0%

18/11/08 11:32:06 INFO mapreduce.Job: Job job_1541640011445_0018 failed with state FAILED due to: Task failed task_1541640011445_0018_m_000000

Job failed as tasks failed. failedMaps:1 failedReduces:0

18/11/08 11:32:06 INFO mapreduce.Job: Counters: 8

Job Counters

Failed map tasks=4

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=20616

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=20616

Total vcore-seconds taken by all map tasks=20616

Total megabyte-seconds taken by all map tasks=21110784

18/11/08 11:32:06 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead

18/11/08 11:32:06 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 46.2311 seconds (0 bytes/sec)

18/11/08 11:32:06 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

18/11/08 11:32:06 INFO mapreduce.ImportJobBase: Retrieved 0 records.

18/11/08 11:32:06 ERROR tool.ImportTool: Error during import: Import job failed!

[hadoop@master ~]$ sqoop list-tables --username test --password test --connect jdbc:mysql://172.1.1.96:3306/test

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/11/08 11:35:27 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/11/08 11:35:27 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/11/08 11:35:27 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

a_jw_coursejoin_detail_f

a_test

bk_fee_common

bk_student_rect

bk_subjectdet

bonus

dept

emp

exam_ticket

gg

gg_tets

id

incre_table

p_bk_real_lession_cost_detail

registration

salgrade

student_exam_score_20170802

student_exam_score_20170803

student_exam_score_20170906

student_exam_term

t

t_card_package

t_dictionary

t_sms_send

t_time

t_tracelog_cgi

t_tracelog_cgi_20180920

t_tracelog_cgi_20180921

t_tracelog_cgi_tmp

tbl_name

test_1

test_2

test_3

test_4

test_5

test_x

treenodes

tt1

tt2

tt3

tt4

user

z

[hadoop@master ~]$ 2. Problem solving

2.1 mysql local (sqoop local)

this query data:

1. Change the url localhost to IP (just configure /etc/hosts file)

sqoop import --username test --password test --connect jdbc:mysql://127.0.0.1:3306/test?autoReconnect=true --delete-target-dir --table t --fields-terminated-by '\t' -m 1 --hive-import --hive-database test --hive-table t

2. Empower the user (ignore if you have permission)

grant all privileges on test to test@'%' IDENTIFIED BY 'test' WITH GRANT OPTION;

flush PRIVILEGES;Locahost import failed:

[hadoop@master ~]$ sqoop import --username test --password test --connect jdbc:mysql://127.0.0.1:3306/test?autoReconnect=true --delete-target-dir --table t --fields-terminated-by '\t' -m 1 --hive-import --hive-database test --hive-table t

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/11/08 11:53:58 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/11/08 11:53:58 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/11/08 11:53:58 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/11/08 11:53:58 INFO tool.CodeGenTool: Beginning code generation

18/11/08 11:53:59 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t` AS t LIMIT 1

18/11/08 11:53:59 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t` AS t LIMIT 1

18/11/08 11:53:59 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop-2.8.1/share/hadoop/mapreduce

Note: /tmp/sqoop-hadoop/compile/910a58c86a0bd49d993fb3c8e9860e5f/t.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/11/08 11:54:01 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/910a58c86a0bd49d993fb3c8e9860e5f/t.jar

18/11/08 11:54:03 INFO tool.ImportTool: Destination directory t is not present, hence not deleting.

18/11/08 11:54:03 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/11/08 11:54:03 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/11/08 11:54:03 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/11/08 11:54:03 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/11/08 11:54:03 INFO mapreduce.ImportJobBase: Beginning import of t

18/11/08 11:54:03 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/11/08 11:54:03 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/11/08 11:54:03 INFO client.RMProxy: Connecting to ResourceManager at master/10.0.1.118:18040

18/11/08 11:54:07 INFO db.DBInputFormat: Using read commited transaction isolation

18/11/08 11:54:07 INFO mapreduce.JobSubmitter: number of splits:1

18/11/08 11:54:07 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1541640011445_0023

18/11/08 11:54:07 INFO impl.YarnClientImpl: Submitted application application_1541640011445_0023

18/11/08 11:54:07 INFO mapreduce.Job: The url to track the job: http://master:18088/proxy/application_1541640011445_0023/

18/11/08 11:54:07 INFO mapreduce.Job: Running job: job_1541640011445_0023

18/11/08 11:54:19 INFO mapreduce.Job: Job job_1541640011445_0023 running in uber mode : false

18/11/08 11:54:19 INFO mapreduce.Job: map 0% reduce 0%

18/11/08 11:54:31 INFO mapreduce.Job: Task Id : attempt_1541640011445_0023_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.Util.getInstance(Util.java:408)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:918)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:897)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:886)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:860)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2097)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2022)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2189)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2038)

... 24 more

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 26 more

18/11/08 11:54:41 INFO mapreduce.Job: Task Id : attempt_1541640011445_0023_m_000000_1, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.Util.getInstance(Util.java:408)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:918)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:897)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:886)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:860)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2097)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2022)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: java.sql.SQLException: Access denied for user 'test'@'localhost' (using password: YES)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:964)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3973)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3909)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:873)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1710)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1226)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2191)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2038)

... 24 more

18/11/08 11:54:51 INFO mapreduce.Job: Task Id : attempt_1541640011445_0023_m_000000_2, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:749)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:175)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:169)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server. Attempted reconnect 3 times. Giving up.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.Util.getInstance(Util.java:408)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:918)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:897)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:886)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:860)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2097)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2022)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more

Caused by: java.sql.SQLException: Access denied for user 'test'@'localhost' (using password: YES)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:964)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3973)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3909)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:873)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1710)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1226)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2191)

at com.mysql.jdbc.ConnectionImpl.connectWithRetries(ConnectionImpl.java:2038)

... 24 more

18/11/08 11:55:03 INFO mapreduce.Job: map 100% reduce 0%

18/11/08 11:55:03 INFO mapreduce.Job: Job job_1541640011445_0023 failed with state FAILED due to: Task failed task_1541640011445_0023_m_000000

Job failed as tasks failed. failedMaps:1 failedReduces:0

18/11/08 11:55:03 INFO mapreduce.Job: Counters: 8

Job Counters

Failed map tasks=4

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=36276

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=36276

Total vcore-seconds taken by all map tasks=36276

Total megabyte-seconds taken by all map tasks=37146624

18/11/08 11:55:03 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead

18/11/08 11:55:03 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 60.1829 seconds (0 bytes/sec)

18/11/08 11:55:03 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

18/11/08 11:55:03 INFO mapreduce.ImportJobBase: Retrieved 0 records.

18/11/08 11:55:03 ERROR tool.ImportTool: Error during import: Import job failed!

[hadoop@master ~]$ IP import successful:

[hadoop@master ~]$ sqoop import --username test --password test --connect jdbc:mysql://10.0.1.118:3306/test?autoReconnect=true --delete-target-dir --table t --fields-terminated-by '\t' -m 1 --hive-import --hive-database test --hive-table t

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/11/08 11:51:29 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/11/08 11:51:29 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/11/08 11:51:30 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/11/08 11:51:30 INFO tool.CodeGenTool: Beginning code generation

18/11/08 11:51:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t` AS t LIMIT 1

18/11/08 11:51:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t` AS t LIMIT 1

18/11/08 11:51:30 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop-2.8.1/share/hadoop/mapreduce

Note: /tmp/sqoop-hadoop/compile/8dc5c288374d370426b87eca124b15e0/t.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/11/08 11:51:33 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/8dc5c288374d370426b87eca124b15e0/t.jar

18/11/08 11:51:34 INFO tool.ImportTool: Destination directory t deleted.

18/11/08 11:51:34 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/11/08 11:51:34 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/11/08 11:51:34 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/11/08 11:51:34 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/11/08 11:51:34 INFO mapreduce.ImportJobBase: Beginning import of t

18/11/08 11:51:34 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/11/08 11:51:34 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/11/08 11:51:34 INFO client.RMProxy: Connecting to ResourceManager at master/10.0.1.118:18040

18/11/08 11:51:37 INFO db.DBInputFormat: Using read commited transaction isolation

18/11/08 11:51:37 INFO mapreduce.JobSubmitter: number of splits:1

18/11/08 11:51:38 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1541640011445_0022

18/11/08 11:51:38 INFO impl.YarnClientImpl: Submitted application application_1541640011445_0022

18/11/08 11:51:38 INFO mapreduce.Job: The url to track the job: http://master:18088/proxy/application_1541640011445_0022/

18/11/08 11:51:38 INFO mapreduce.Job: Running job: job_1541640011445_0022

18/11/08 11:51:49 INFO mapreduce.Job: Job job_1541640011445_0022 running in uber mode : false

18/11/08 11:51:49 INFO mapreduce.Job: map 0% reduce 0%

18/11/08 11:51:57 INFO mapreduce.Job: map 100% reduce 0%

18/11/08 11:51:57 INFO mapreduce.Job: Job job_1541640011445_0022 completed successfully

18/11/08 11:51:57 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=160855

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=76

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=5208

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=5208

Total vcore-seconds taken by all map tasks=5208

Total megabyte-seconds taken by all map tasks=5332992

Map-Reduce Framework

Map input records=10

Map output records=10

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=98

CPU time spent (ms)=820

Physical memory (bytes) snapshot=107737088

Virtual memory (bytes) snapshot=2091540480

Total committed heap usage (bytes)=18919424

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=76

18/11/08 11:51:57 INFO mapreduce.ImportJobBase: Transferred 76 bytes in 22.9794 seconds (3.3073 bytes/sec)

18/11/08 11:51:57 INFO mapreduce.ImportJobBase: Retrieved 10 records.

18/11/08 11:51:57 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t` AS t LIMIT 1

18/11/08 11:51:57 INFO hive.HiveImport: Loading uploaded data into Hive

18/11/08 11:52:08 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings.

18/11/08 11:52:08 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/apache-hive-2.1.1/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

18/11/08 11:52:08 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

18/11/08 11:52:08 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

18/11/08 11:52:08 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

18/11/08 11:52:09 INFO hive.HiveImport:

18/11/08 11:52:09 INFO hive.HiveImport: Logging initialized using configuration in file:/home/hadoop/apache-hive-2.1.1/conf/hive-log4j2.properties Async: true

18/11/08 11:52:18 INFO hive.HiveImport: OK

18/11/08 11:52:18 INFO hive.HiveImport: Time taken: 1.476 seconds

18/11/08 11:52:19 INFO hive.HiveImport: Loading data to table test.t

18/11/08 11:52:19 INFO hive.HiveImport: OK

18/11/08 11:52:19 INFO hive.HiveImport: Time taken: 1.235 seconds

18/11/08 11:52:20 INFO hive.HiveImport: Hive import complete.

18/11/08 11:52:20 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

2.2 mysql is not local (remote)

is the hadoop cluster (multiple nodes), many data nodes, must ensure N data node can access mysql database (N depends on the specified sqoop -m parameter N, greater than or equal to the -m) at the back of the parameters, sqoop run at the bottom of the MR, MR run on hadoop cluster, so the hadoop each node must be able to connect the mysql database, mysql available – h * * * * * u * * * * * – p * * * * * test.

my environment:

three virtual machines, two network CARDS each, one private (communication between clusters), one public bridge (communication with outside machines)

The

| node | IP |

| master | 10.0.1.118 |

| slave – 1 | 10.0.1.227 |

| slave – 2 | 10.0.1.226

slave-1 to connect to remote database : slave-2 connects to remote database: import remote 172.1.1.96 data table test.student_exam_score_20170802 to hive bond_edu.student_exam_score_20170802

summary: 1. Sqoop remote import of mysql data into hive must ensure that N data nodes can connect to the remote database properly (N> or equal to the number of sqoop parallelism). 2. Sqoop local import users may need to use IP or modify the host file. 3. Sqoop list-tables does not require data nodes?(Doubtful??) . New fields in hive table and modification of field commentshive table new field, modify field comment (1) create test table: use mart_flow_test; (2) new field: use mart_flow_test; alter table detail_flow_test add columns(original_union_id string); (3) modification comment: use mart_flow_test;alter table detail_flow_conversion_base_raw change column original_union_id original_union_id string COMMENT’ original device unique id ‘;

|