Today, when I was running the program, I kept reporting this error, saying that I was out of CUDA memory. After a long time of debugging, it turned out to be

At first I suspected that the graphics card on the server was being used, but when I got to mvidia-SMi I found that none of the three Gpus were used. That question is obviously impossible. So why is that?

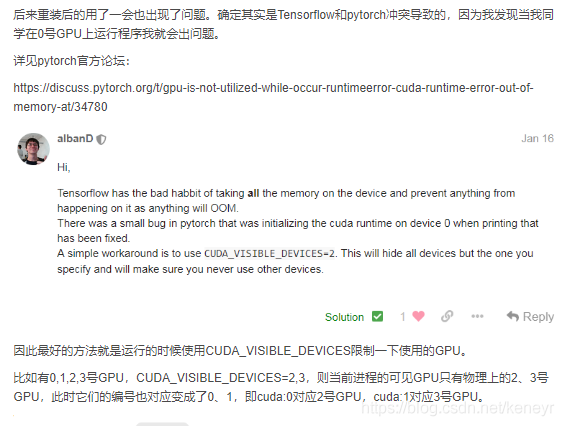

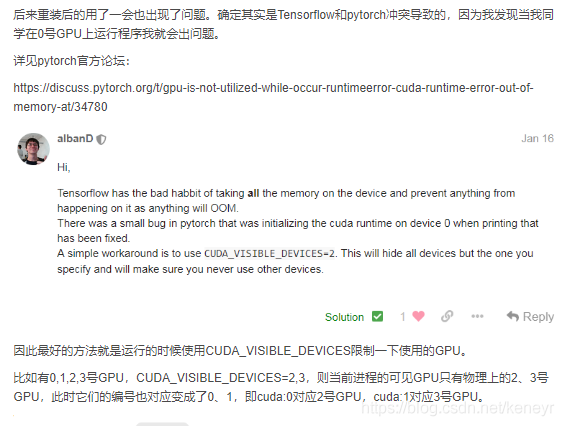

Others say the TensorFlow and Pytorch versions conflict. ?????I didn’t get TensorFlow

The last reference the post: http://www.cnblogs.com/jisongxie/p/10276742.html

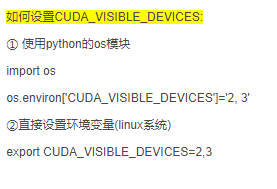

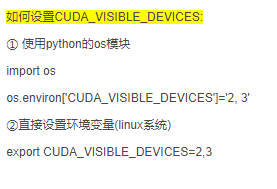

Yes, Like the blogger, I’m also using a No. 0 GPU, so I don’t know why my Pytorch process works. I can only see a no. 2 GPU physically, I don’t have a no. 3 GPU. So something went wrong?

So I changed the code so that PyTorch could see all the Gpus on the server:

OS. Environ [‘ CUDA_VISIBLE_DEVICES] = ‘0’

Then on the physics of no. 0 GPU happily run up ~~~

At first I suspected that the graphics card on the server was being used, but when I got to mvidia-SMi I found that none of the three Gpus were used. That question is obviously impossible. So why is that?

Others say the TensorFlow and Pytorch versions conflict. ?????I didn’t get TensorFlow

The last reference the post: http://www.cnblogs.com/jisongxie/p/10276742.html

Yes, Like the blogger, I’m also using a No. 0 GPU, so I don’t know why my Pytorch process works. I can only see a no. 2 GPU physically, I don’t have a no. 3 GPU. So something went wrong?

So I changed the code so that PyTorch could see all the Gpus on the server:

OS. Environ [‘ CUDA_VISIBLE_DEVICES] = ‘0’

Then on the physics of no. 0 GPU happily run up ~~~