Error Messages:

Failed to monitor Job[-1] with exception ‘java.lang.IllegalStateException(Connection to remote Spark driver was lost)’ Last known state = SENT

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Unable to send message SyncJobRequest{job=org.apache.hadoop.hive.ql.exec.spark.status.impl.RemoteSparkJobStatus$GetAppIDJob@7805478c} because the Remote Spark Driver - HiveServer2 connection has been closed.

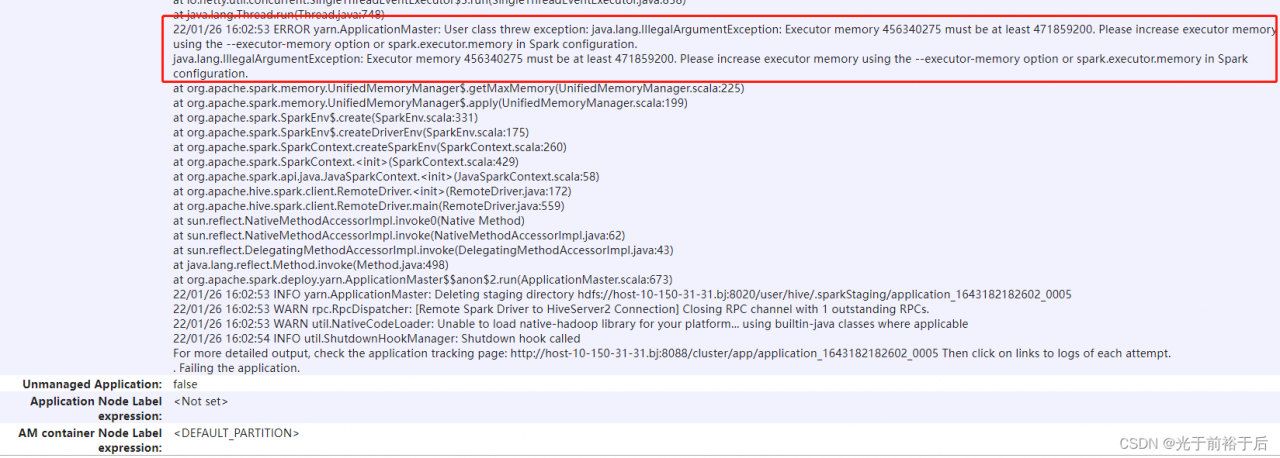

The real cause of this problem requires going to Yarn and looking at the Application’s detailed logs at

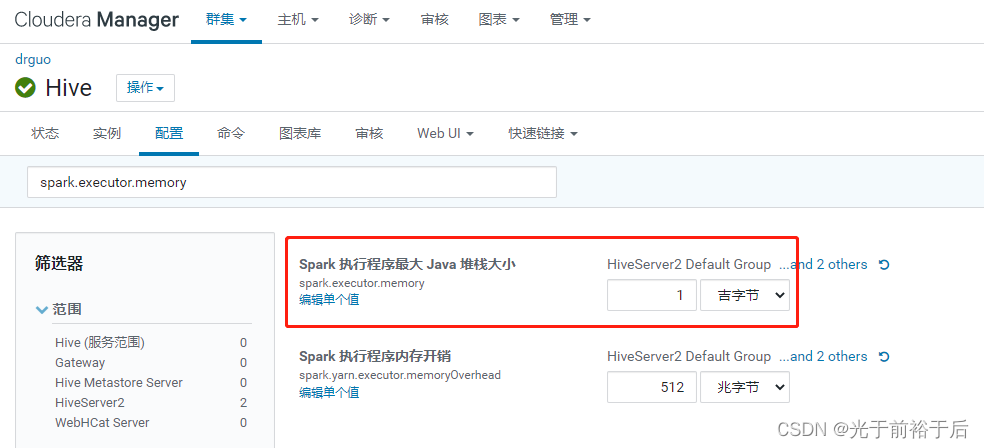

It turns out that the executor-memory is too small and needs to be modified in the hive configuration page

Save the changes and restart the relevant components, the problem is solved.