21/11/08 12:13:10 ERROR tool.ImportTool: Import failed: java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.

at org.apache.hadoop.mapreduce.Cluster.initialize(Cluster.java:143)

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:108)

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:101)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1311)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1307)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1844)

at org.apache.hadoop.mapreduce.Job.connect(Job.java:1306)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1335)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1359)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:200)

at org.apache.sqoop.mapreduce.ImportJobBase.runJob(ImportJobBase.java:173)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:270)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:692)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:127)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:520)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

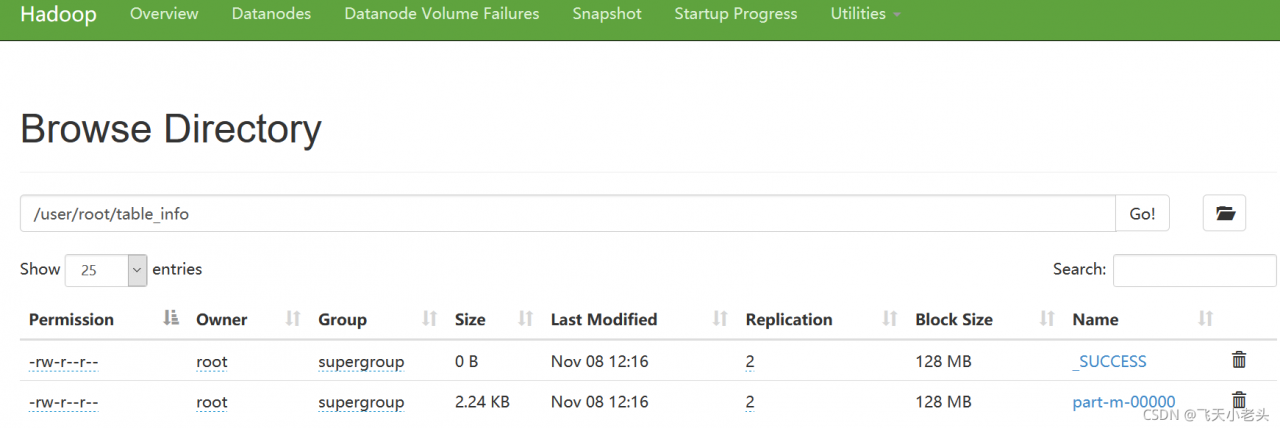

The above error occurs when running the sqoop script (the content of the script is to import MySQL data into HDFS). It is found that there is a lack of dependency. I still report an error after copying the two jar packages hadoop-mapreduce-client-common-2.8.5.jar and hadoop-mapreduce-client-core-2.8.5.jar to the Lib directory of sqoop, Then I copied all the jar packages in the hadoop-2.8.5/share/hadoop/mapreduce directory of Hadoop to solve the problem and run the script successfully. It was simple and violent.