@To live better

Step on the pit — error reported by sqoop tool.ExportTool : Error during export

The error printed on the console is

19/04/19 20:17:09 ERROR mapreduce.ExportJobBase: Export job failed!

19/04/19 20:17:09 ERROR tool.ExportTool: Error during export:

Export job failed!

at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445)

at org.apache.sqoop.manager.MySQLManager.upsertTable(MySQLManager.java:145)

at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:73)

at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

finishing touch : this error indicates that the fields of MySQL and hive do not correspond or the data format is different

solution:

Step 1 : check whether the structure (field name and data type) of MySQL and hive tables are consistent.

Step 2 : check whether the data has been imported?If the data is not imported, please change the time type in MySQL and hive to string or varchar in the first step. If there is an import, but the imported data is incomplete or incorrect. It must be that your data type is inconsistent with the actual data. There are two situations. See the following three steps for details.

Step 3 : in MySQL to hive, please be sure to check whether your data contains the default line break for hive table creation

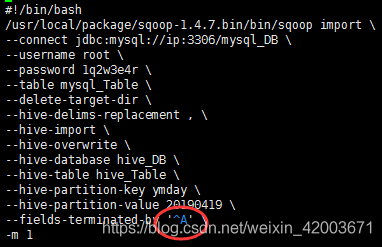

#!/bin/bash

/usr/local/package/sqoop-1.4.7.bin/bin/sqoop import \

--connect jdbc:mysql://ip:3306/mysql_DB \

--username root \

--password 1q2w3e4r \

--table mysql_Table \

--delete-target-dir \

--hive-delims-replacement , \

--hive-import \

--hive-overwrite \

--hive-database hive_DB \

--hive-table hive_Table \

--hive-partition-key ymday \

--hive-partition-value 20190419 \

--fields-terminated-by '\t' \

-m 1

Step 4 : in MySQL to hive, please be sure to check whether your data contains the field separator commonly used in hive table creation

Step 5 : if there are many empty columns from hive to hive, sometimes the above error will be reported, then add input null string and input null non string in the sqoop statement, and the following code

remember ^ A, and use Ctrl + V and Ctrl + A in VI of Linux To generate, you can't copy the following ^ a

remember ^ a directly. In VI of Linux, you can't copy the following ^ a

remember ^ a directly. In VI of Linux, you can't copy the following ^ a directly

#!/bin/bash

/usr/local/package/sqoop-1.4.7.bin/bin/sqoop export \

--connect "jdbc:mysql://ip:3306/report_db?useUnicode=true&characterEncoding=utf-8" \

--username root \

--password 1q2w3e4r \

--table mysql_table \

--columns name,age \

--update-key name \

--update-mode allowinsert \

--input-null-string '\\N' \

--input-null-non-string '\\N' \

--export-dir "/hive/warehouse/dw_db.db/hive_table/ymday=20190419/*" \

--input-fields-terminated-by '^A' \

-m 1

====================================================================

@To live better

If you have any questions about the blog, please leave a message