Problem description

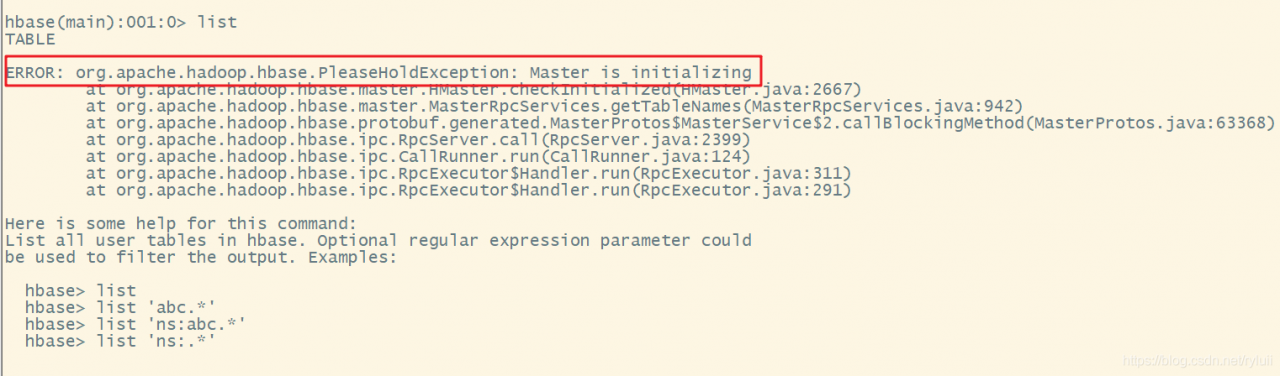

Because of the HBase version, after I changed the HBase version, I opened the HBase shell query table and reported the following error:

How to solve it

The above problems are generally the cause of the failure of hregionserver node.

-

- first step, JPS checks whether its hregionserver node is normally opened (mostly hung up) and looks at the configuration file hbase-site.xml (my problem is here, because the installation has changed the version, and it has not been integrated with Phoneix yet, it is necessary to comment out all the Phoenix mapping configuration in the configuration file, otherwise hregionserver will not start normally)

-

- the following is a positive list Exact configuration file </ OL>

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

/**

*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/export/data/hbase/tmp</value>

<!--This tmp temporary file should be created in the hbase installation directory-->

</property>

<property>

<name>hbase.master</name>

<value>node1:16010</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://node1:9000/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>node1,node2,node3:2181</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/export/servers/zookeeper/data</value>

</property>

</configuration>

3. Close HBase, delete/HBase on HDFS and restart HBase

--Close hbase

stop-hbase.sh

--delete /hbase

hadoop fs -rm -r /hbase

--start hbase

start-hbase.sh

Note: in case of this kind of error, check whether the configuration file is correct, restart HBase (restart needs to delete/HBase), that is, follow step 3 to do it again.