Problem description

Runtimeerror: CUDA out of memory. Tried to allocate 1.26 gib (GPU 0; 6.00 GiB total capacity; 557.81 MiB already allocated; 2.74 GiB free; 1.36 gib reserved in total by pytorch)

Solution:

GPU cache is not enough

you can reduce the size of batch size properly

if it works properly

1. Restart the computer

restart the computer and close the occupied GPU process, which is a solution

2. Kill process

input

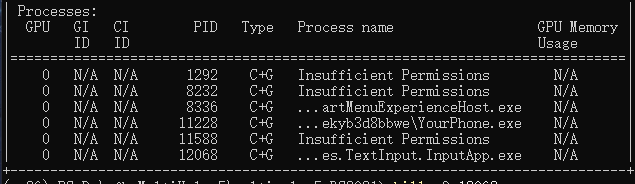

nvidia-smi

View the process

and enter

kill -9 PID