recently came to MapReduce programming with big data —

USES the following code

that is to use hadoop-streaming run on tm has been reporting an error

hadoop jar /opt/hadoop-2.7.3/share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar -input /ncdc -output /ncdc_out -mapper max_temp_map.py -reducer max_temp_reduce.py -file max_temp_map.py -file max_temp_reduce.py

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

and I have two hours left to solve this problem for two days —

During

, baidu went to a lot of places and Google went to a lot of

method is nothing more than CRLF and LF

file encoding problem, for me, the following is my solution

solution:

The

LiveDataNode needs to be greater than 2, which is my guess

enter the hadoop directory to find slaves file

I had only one slave1

It’s

and I’ll just add a master which is the hostname

let’s make fun of that

really tm want to dead snapshot delete too fast directly back to reinstall twice to tell the truth directly split

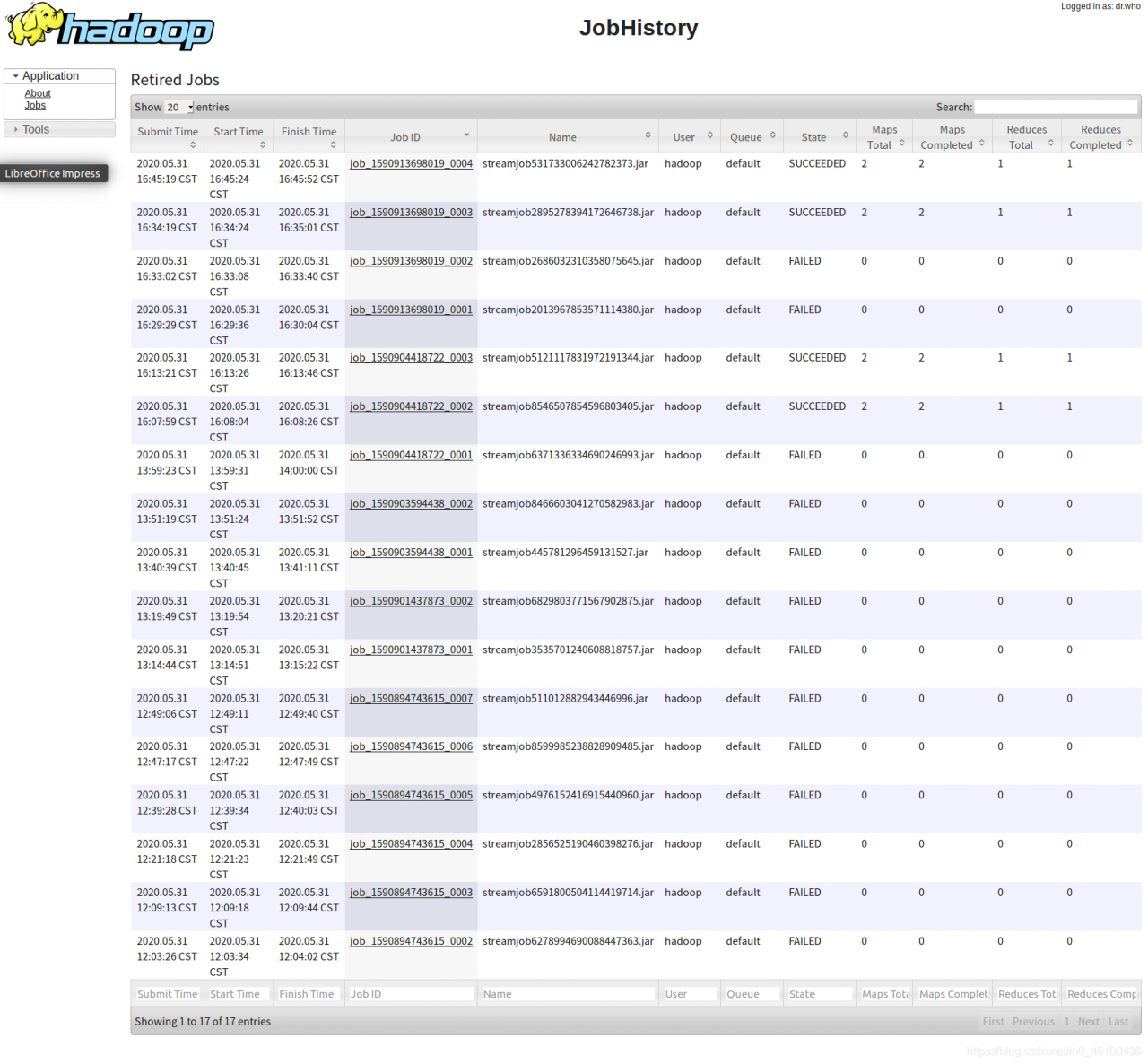

below is the error message

, let’s just take a look at that

root@master:/usr/bin# hadoop jar /opt/hadoop-2.7.3/share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar -input /ncdc -output /ncdc_out -mapper max_temp_map.py -reducer max_temp_reduce.py -file max_temp_map.py -file max_temp_reduce.py

20/05/30 14:21:17 WARN streaming.StreamJob: -file option is deprecated, please use generic option -files instead.

packageJobJar: [max_temp_map.py, max_temp_reduce.py, /tmp/hadoop-unjar5025674109683727172/] [] /tmp/streamjob1773442556914840065.jar tmpDir=null

20/05/30 14:21:18 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.150.131:8032

20/05/30 14:21:18 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.150.131:8032

20/05/30 14:21:19 WARN hdfs.DFSClient: Caught exception

java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Thread.join(Thread.java:1252)

at java.lang.Thread.join(Thread.java:1326)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:609)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:370)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:546)

20/05/30 14:21:19 INFO mapred.FileInputFormat: Total input paths to process : 1

20/05/30 14:21:19 WARN hdfs.DFSClient: Caught exception

java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Thread.join(Thread.java:1252)

at java.lang.Thread.join(Thread.java:1326)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:609)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:370)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:546)

20/05/30 14:21:19 INFO mapreduce.JobSubmitter: number of splits:2

20/05/30 14:21:19 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1590817515448_0008

20/05/30 14:21:19 INFO impl.YarnClientImpl: Submitted application application_1590817515448_0008

20/05/30 14:21:19 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1590817515448_0008/

20/05/30 14:21:19 INFO mapreduce.Job: Running job: job_1590817515448_0008

20/05/30 14:21:27 INFO mapreduce.Job: Job job_1590817515448_0008 running in uber mode : false

20/05/30 14:21:27 INFO mapreduce.Job: map 0% reduce 0%

20/05/30 14:21:33 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000001_0, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

20/05/30 14:21:33 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

20/05/30 14:21:39 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000001_1, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

20/05/30 14:21:40 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000000_1, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

20/05/30 14:21:46 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000001_2, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

20/05/30 14:21:47 INFO mapreduce.Job: Task Id : attempt_1590817515448_0008_m_000000_2, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:322)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:535)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

20/05/30 14:21:54 INFO mapreduce.Job: map 100% reduce 100%

20/05/30 14:21:54 INFO mapreduce.Job: Job job_1590817515448_0008 failed with state FAILED due to: Task failed task_1590817515448_0008_m_000001

Job failed as tasks failed. failedMaps:1 failedReduces:0

20/05/30 14:21:54 INFO mapreduce.Job: Counters: 17

Job Counters

Failed map tasks=7

Killed map tasks=1

Killed reduce tasks=1

Launched map tasks=8

Other local map tasks=6

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=36658

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=36658

Total time spent by all reduce tasks (ms)=0

Total vcore-milliseconds taken by all map tasks=36658

Total vcore-milliseconds taken by all reduce tasks=0

Total megabyte-milliseconds taken by all map tasks=37537792

Total megabyte-milliseconds taken by all reduce tasks=0

Map-Reduce Framework

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

20/05/30 14:21:54 ERROR streaming.StreamJob: Job not successful!

Streaming Command Failed!

root@master:/usr/bin#