First of all, we need to build a Hadoop server

Please refer to: Hadoop 3.2.0 fully distributed cluster building and wordcount running

Create a new Maven project and add Hadoop related jar package

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.2</version>

</dependency>Write HDFS tool class

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

/**

* @author LionLi

* @date 2019-2-7

*/

public class HDFSUtils {

/** hdfs Server Address */

private static final String hdfsUrl = "hdfs://192.168.0.200:8020/";

/** Access users */

private static final String username = "root";

public static void main(String[] args) throws Exception {

String filePath = "/user/root/demo.txt";

StringBuilder sb = new StringBuilder();

for (int i = 1; i < 100001; i++) {

sb.append("hadoop demo " + i + "\r\n");

}

HDFSCreateAndWriteFileValue(hdfsUrl,username, filePath,sb.toString());

HDFSReadFileValue(hdfsUrl,username, filePath);

}

/**

* Read the contents of the file

* @param url server address

* @param user access user

* @param filePath file path

*/

private static void HDFSReadFileValue(

String url,String user, String filePath) throws Exception {

Configuration conf = new Configuration();

try (FileSystem fileSystem = FileSystem.get(new URI(url), conf, user)) {

Path demo = new Path(filePath);

if (fileSystem.exists(demo)) {

System.out.println("/user/root/demo.txt file exist");

FSDataInputStream fsdis = fileSystem.open(demo);

byte[] bytes = new byte[fsdis.available()];

fsdis.readFully(bytes);

System.out.println(new String(bytes));

} else {

System.out.println("/user/root/demo.txt file not exist");

}

}

}

/**

* Create and write the contents of the file

* @param url server address

* @param user Access user

* @param filePath The path to the file to be created

* @param value The value to be written

*/

private static void HDFSCreateAndWriteFileValue(

String url,String user,String filePath,String value) throws Exception {

Configuration conf = new Configuration();

try (FileSystem fileSystem = FileSystem.get(new URI(url), conf, user)) {

Path demo = new Path(filePath);

if (fileSystem.exists(demo)) {

System.out.println("/user/root/demo.txt file exist");

if (fileSystem.delete(demo, false)) {

if (fileSystem.exists(demo)) {

System.out.println("/user/root/demo.txt failed to delete file");

} else {

System.out.println("/user/root/demo.txt success to delete file");

FSDataOutputStream fsdos = fileSystem.create(demo);

fsdos.write(value.getBytes());

}

}

} else {

System.out.println("/user/root/demo.txt file not exist");

FSDataOutputStream fsdos = fileSystem.create(demo);

fsdos.write(value.getBytes());

}

}

}

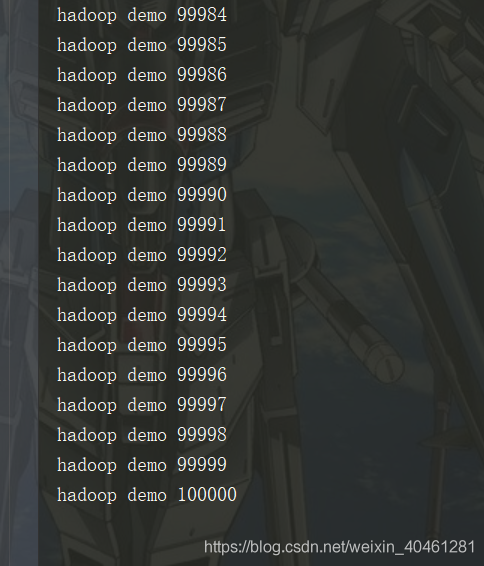

}test run

The test was successful