directory

phenomenon: docker does not start

check docker status, as failed.

manually start dockerd

check daemon. Json

modify the daemon. Json

starts the daemon and docker

with systemctl

why does using ipv6:true cause docker to fail to start normally?

phenomenon: docker doesn’t start

to see if elasticsearch service exists

[root@warehouse00 ~]# docker ps|grep elastic

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?indicates that docker is not running.

check docker status, as failed.

[root@warehouse00 ~]# service docker status

Redirecting to /bin/systemctl status docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (/etc/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: failed (Result: start-limit) since Mon 2020-06-15 06:30:16 UTC; 1 weeks 1 days ago

Docs: https://docs.docker.com

Process: 19410 ExecStart=/usr/bin/dockerd -H fd:// (code=exited, status=1/FAILURE)

Main PID: 19410 (code=exited, status=1/FAILURE)

Jun 15 06:30:16 warehouse00 systemd[1]: docker.service holdoff time over, scheduling restart.

Jun 15 06:30:16 warehouse00 systemd[1]: Stopped Docker Application Container Engine.

Jun 15 06:30:16 warehouse00 systemd[1]: start request repeated too quickly for docker.service

Jun 15 06:30:16 warehouse00 systemd[1]: Failed to start Docker Application Container Engine.

Jun 15 06:30:16 warehouse00 systemd[1]: Unit docker.service entered failed state.

Jun 15 06:30:16 warehouse00 systemd[1]: docker.service failed.

Jun 15 06:30:33 warehouse00 systemd[1]: start request repeated too quickly for docker.service

Jun 15 06:30:33 warehouse00 systemd[1]: Failed to start Docker Application Container Engine.

Jun 15 06:30:33 warehouse00 systemd[1]: docker.service failed.

Jun 23 07:45:16 warehouse00 systemd[1]: Unit docker.service cannot be reloaded because it is inactive.

manually start dockerd

[root@warehouse00 ~]# dockerd

INFO[2020-06-23T07:46:26.609656620Z] Starting up

INFO[2020-06-23T07:46:26.615956282Z] libcontainerd: started new containerd process pid=1802

INFO[2020-06-23T07:46:26.616133833Z] parsed scheme: "unix" module=grpc

INFO[2020-06-23T07:46:26.616167280Z] scheme "unix" not registered, fallback to default scheme module=grpc

INFO[2020-06-23T07:46:26.616225318Z] ccResolverWrapper: sending update to cc: {[{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}] <nil>} module=grpc

INFO[2020-06-23T07:46:26.616255985Z] ClientConn switching balancer to "pick_first" module=grpc

INFO[2020-06-23T07:46:26.665833586Z] starting containerd revision=b34a5c8af56e510852c35414db4c1f4fa6172339 version=v1.2.10

INFO[2020-06-23T07:46:26.667139004Z] loading plugin "io.containerd.content.v1.content"... type=io.containerd.content.v1

INFO[2020-06-23T07:46:26.667283454Z] loading plugin "io.containerd.snapshotter.v1.btrfs"... type=io.containerd.snapshotter.v1

WARN[2020-06-23T07:46:26.667700679Z] failed to load plugin io.containerd.snapshotter.v1.btrfs error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.btrfs must be a btrfs filesystem to be used with the btrfs snapshotter"

INFO[2020-06-23T07:46:26.667751093Z] loading plugin "io.containerd.snapshotter.v1.aufs"... type=io.containerd.snapshotter.v1

WARN[2020-06-23T07:46:26.672276961Z] failed to load plugin io.containerd.snapshotter.v1.aufs error="modprobe aufs failed: "modprobe: FATAL: Module aufs not found.\n": exit status 1"

INFO[2020-06-23T07:46:26.672329642Z] loading plugin "io.containerd.snapshotter.v1.native"... type=io.containerd.snapshotter.v1

INFO[2020-06-23T07:46:26.672396890Z] loading plugin "io.containerd.snapshotter.v1.overlayfs"... type=io.containerd.snapshotter.v1

INFO[2020-06-23T07:46:26.672604691Z] loading plugin "io.containerd.snapshotter.v1.zfs"... type=io.containerd.snapshotter.v1

INFO[2020-06-23T07:46:26.673060327Z] skip loading plugin "io.containerd.snapshotter.v1.zfs"... type=io.containerd.snapshotter.v1

INFO[2020-06-23T07:46:26.673097387Z] loading plugin "io.containerd.metadata.v1.bolt"... type=io.containerd.metadata.v1

WARN[2020-06-23T07:46:26.673137831Z] could not use snapshotter btrfs in metadata plugin error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.btrfs must be a btrfs filesystem to be used with the btrfs snapshotter"

WARN[2020-06-23T07:46:26.673161222Z] could not use snapshotter aufs in metadata plugin error="modprobe aufs failed: "modprobe: FATAL: Module aufs not found.\n": exit status 1"

WARN[2020-06-23T07:46:26.673185176Z] could not use snapshotter zfs in metadata plugin error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.zfs must be a zfs filesystem to be used with the zfs snapshotter: skip plugin"

INFO[2020-06-23T07:46:26.673528926Z] loading plugin "io.containerd.differ.v1.walking"... type=io.containerd.differ.v1

INFO[2020-06-23T07:46:26.673583123Z] loading plugin "io.containerd.gc.v1.scheduler"... type=io.containerd.gc.v1

INFO[2020-06-23T07:46:26.673692715Z] loading plugin "io.containerd.service.v1.containers-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673736540Z] loading plugin "io.containerd.service.v1.content-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673769457Z] loading plugin "io.containerd.service.v1.diff-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673804631Z] loading plugin "io.containerd.service.v1.images-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673839842Z] loading plugin "io.containerd.service.v1.leases-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673903483Z] loading plugin "io.containerd.service.v1.namespaces-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673943174Z] loading plugin "io.containerd.service.v1.snapshots-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.673979791Z] loading plugin "io.containerd.runtime.v1.linux"... type=io.containerd.runtime.v1

INFO[2020-06-23T07:46:26.674145534Z] loading plugin "io.containerd.runtime.v2.task"... type=io.containerd.runtime.v2

INFO[2020-06-23T07:46:26.674279834Z] loading plugin "io.containerd.monitor.v1.cgroups"... type=io.containerd.monitor.v1

INFO[2020-06-23T07:46:26.675734289Z] loading plugin "io.containerd.service.v1.tasks-service"... type=io.containerd.service.v1

INFO[2020-06-23T07:46:26.675851914Z] loading plugin "io.containerd.internal.v1.restart"... type=io.containerd.internal.v1

INFO[2020-06-23T07:46:26.676034068Z] loading plugin "io.containerd.grpc.v1.containers"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676081089Z] loading plugin "io.containerd.grpc.v1.content"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676117009Z] loading plugin "io.containerd.grpc.v1.diff"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676149377Z] loading plugin "io.containerd.grpc.v1.events"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676180874Z] loading plugin "io.containerd.grpc.v1.healthcheck"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676214131Z] loading plugin "io.containerd.grpc.v1.images"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676245682Z] loading plugin "io.containerd.grpc.v1.leases"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676284266Z] loading plugin "io.containerd.grpc.v1.namespaces"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676316443Z] loading plugin "io.containerd.internal.v1.opt"... type=io.containerd.internal.v1

INFO[2020-06-23T07:46:26.676440092Z] loading plugin "io.containerd.grpc.v1.snapshots"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676482597Z] loading plugin "io.containerd.grpc.v1.tasks"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676514017Z] loading plugin "io.containerd.grpc.v1.version"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.676545815Z] loading plugin "io.containerd.grpc.v1.introspection"... type=io.containerd.grpc.v1

INFO[2020-06-23T07:46:26.677125349Z] serving... address="/var/run/docker/containerd/containerd-debug.sock"

INFO[2020-06-23T07:46:26.677301919Z] serving... address="/var/run/docker/containerd/containerd.sock"

INFO[2020-06-23T07:46:26.677337263Z] containerd successfully booted in 0.013476s

INFO[2020-06-23T07:46:26.693415067Z] parsed scheme: "unix" module=grpc

INFO[2020-06-23T07:46:26.693481428Z] scheme "unix" not registered, fallback to default scheme module=grpc

INFO[2020-06-23T07:46:26.693528406Z] ccResolverWrapper: sending update to cc: {[{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}] <nil>} module=grpc

INFO[2020-06-23T07:46:26.693554473Z] ClientConn switching balancer to "pick_first" module=grpc

INFO[2020-06-23T07:46:26.695436116Z] parsed scheme: "unix" module=grpc

INFO[2020-06-23T07:46:26.695494014Z] scheme "unix" not registered, fallback to default scheme module=grpc

INFO[2020-06-23T07:46:26.695532298Z] ccResolverWrapper: sending update to cc: {[{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}] <nil>} module=grpc

INFO[2020-06-23T07:46:26.695558869Z] ClientConn switching balancer to "pick_first" module=grpc

INFO[2020-06-23T07:46:26.747479158Z] Loading containers: start.

INFO[2020-06-23T07:46:26.887145110Z] stopping event stream following graceful shutdown error="<nil>" module=libcontainerd namespace=moby

INFO[2020-06-23T07:46:26.887630706Z] stopping healthcheck following graceful shutdown module=libcontainerd

INFO[2020-06-23T07:46:26.887672287Z] stopping event stream following graceful shutdown error="context canceled" module=libcontainerd namespace=plugins.moby

failed to start daemon: Error initializing network controller: Error creating default "bridge" network: could not find an available, non-overlapping IPv6 address pool among the defaults to assign to the networkmisread means daemone.json has a problem with ipv6 configuration .

check daemon. Json

[root@warehouse00 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["registry.local", "127.0.0.1:5001", "10.10.13.42:5000"],

"registry-mirrors": ["http://mirror.local"],

"bip": "172.18.18.1/24",

"data-root": "/var/lib/docker",

"ipv6": true,

"storage-driver": "overlay2",

"live-restore": true,

"log-opts": {

"max-size": "500m"

}

}

modify the daemon. Json

[root@warehouse00 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["registry.local", "127.0.0.1:5001", "10.10.13.42:5000"],

"registry-mirrors": ["http://mirror.local"],

"bip": "172.18.18.1/24",

"data-root": "/var/lib/docker",

"ipv6": true,

"storage-driver": "overlay2",

"live-restore": true,

"log-opts": {

"max-size": "500m"

}

}deleted ipv6:true .

manually started daemon validation, modified ipv6:true, found that the problem has not been repeated.

restart docker, error: Job for docker.service invalid.

[root@warehouse00 ~]# systemctl reload docker

Job for docker.service invalid.starts the daemon with systemctl and docker

// 启动docker dameon

$ sudo systemctl start docker

// 启动docker服务

$ sudo service docker startproblem solved.

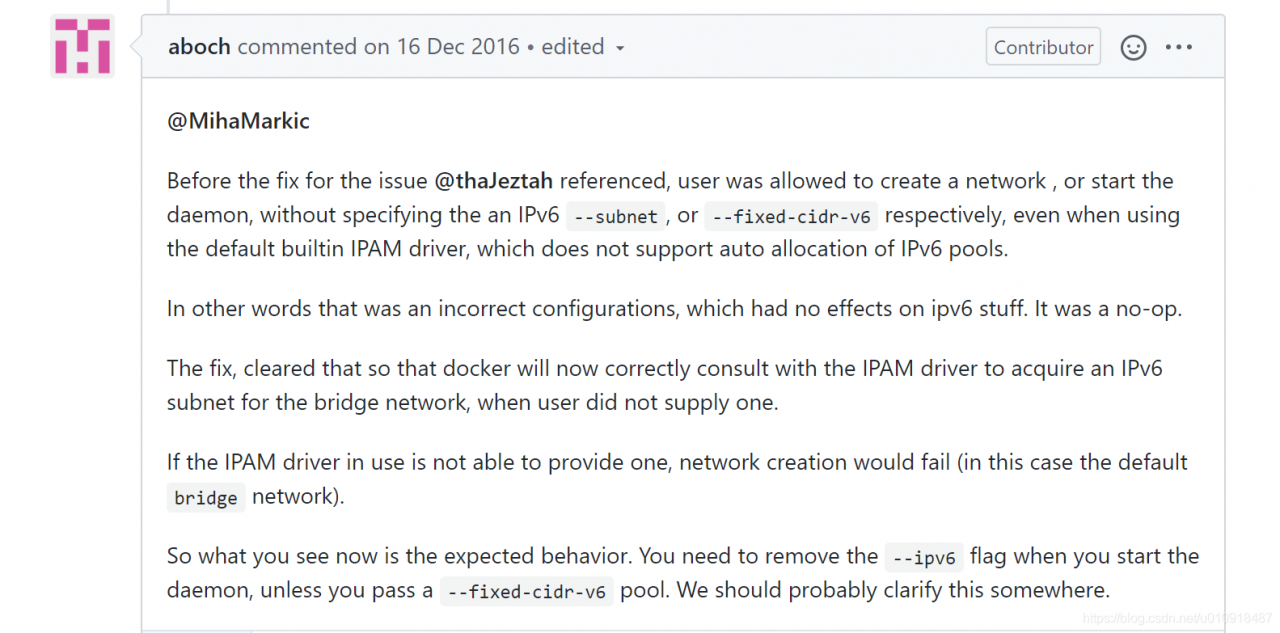

why does using ipv6:true cause docker to fail to start normally?

related issue: https://github.com/moby/moby/issues/36954

https://github.com/moby/moby/issues/29443

https://github.com/moby/moby/issues/29386

either disable ipv6. That is, ipv6:false, or delete ipv6:true

or fix it with fixed-cidr-v6.